Tensorflow - Basics

Some notes on the basics of Tensorflow.

Typical structure of a Tensorflow Model using Keras

# Make the model using keras, specifying the input_shape

model = keras.Sequential(...)

# Compile the model and specify the loss function and the optimizer

model.compile(optimizer='sgd', loss='mean_squared_error')

# Build the dataset in the shape the model is expecting

xs = np.array([...], dtype=float)

ys = np.array([...], dtype=float)

# Fit the model, specifying the number of training epochs

model.fit(xs, ys, epochs=500)

# Make predictions

model.predict([...])

- loss function - how good or bad the estimate is

- optimizer - the estimate enhancement process

Labels

Can you use numeric labels, and then map those numbers to different languages. An example is in the Mnist fashion dataset where shoes are represented by the number 9, which is subsequently mapped to different languages (i18n)

Neural Network Design

Explore the input and output values first.

For example, say an input image has size 28x28 and we want an enum output of up to 10 values:

model = keras.Sequential([

# Input image of shape 28x28

keras.layers.Flatten(input_shape=(28,28)).

# f0..f127 functions

keras.layers.Dense(128, activation=tf.nn.relu).

# Output enum of 10 values

keras.layers.Dense(10, activation=tf.nn.softmax)

])

The product of the 128 functions (f0..f127) gives us our output.

The algorithm has to vary the params of the functions in order to achieve this goal.

Initially, each function is initialised with random params.

The loss function measures how good or bad the results are and the optimizer is responsible for changing the params of the functions to make the results better.

Relu

return x > 0 ? x : 0;

Softmax

int[] vals = {......};

return getIndexOfMaximumValue(vals)

Evaluating Models

We create a train and test set out of our data. We can then pass the test set to the evaluate functions of a model:

test_loss, test_acc = model.evaluate(test_set, test_labels)

Predictions

We can call predict on the model we've made:

model.predict(...)

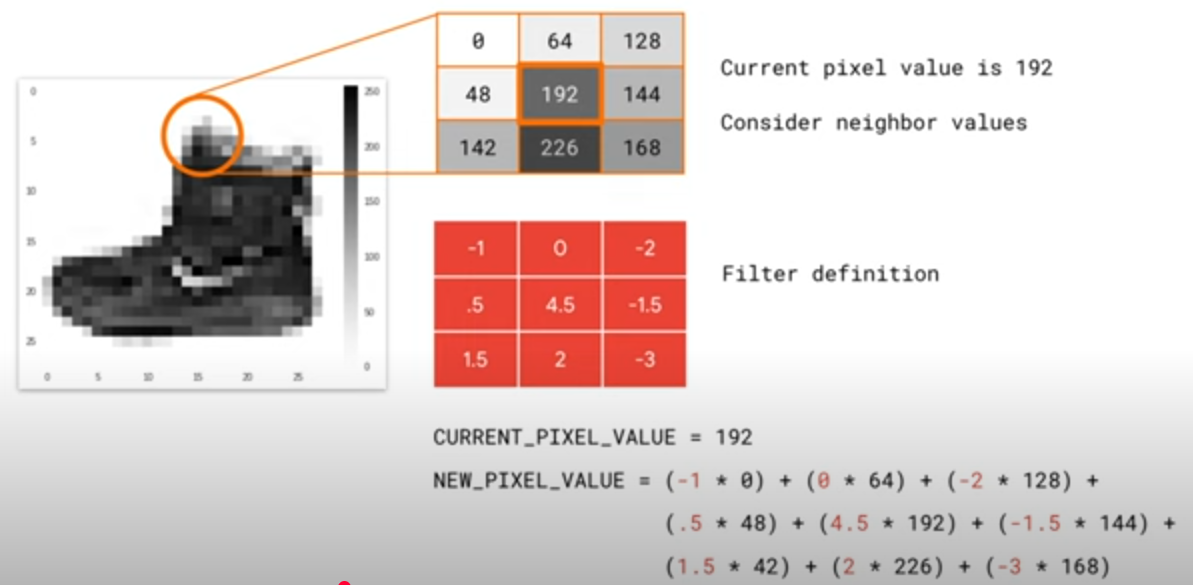

Convolutional Neural Networks

Used for creating features.

Apply filters to the training set before training

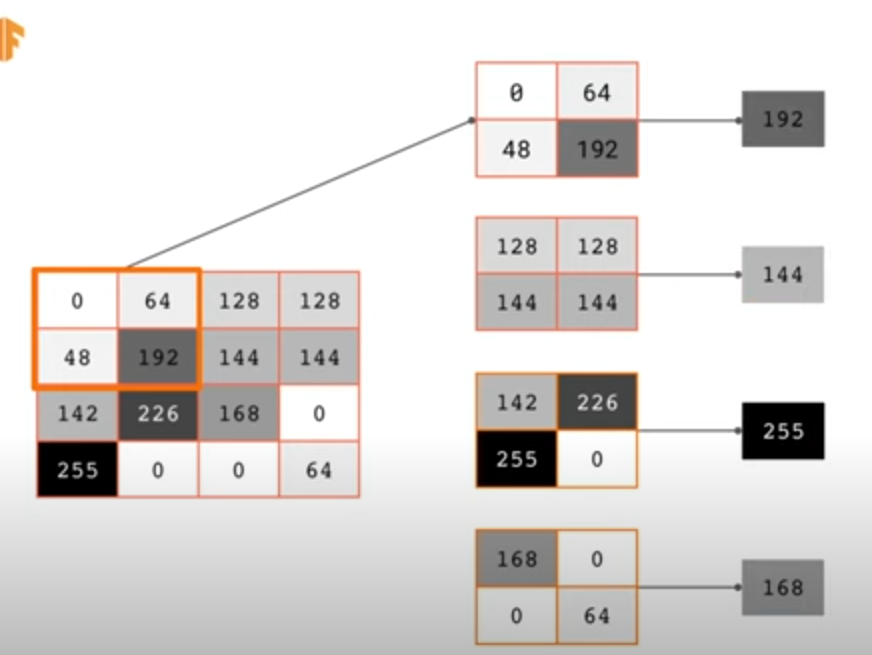

Pooling

Groups elements of the input e.g. 2x2 pooling groups into a subarray of 2x2 elements

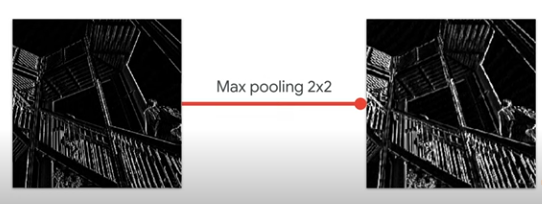

Max Pooling

Group elements of the input and select the max.

The input is reduced to 25% of it's original, but with some level of features being maintained.

The filters are learnt as part of the CNN.

Convolutional Layer

Input is fed into the convolutional layer. Randomly initialised filters are applied, the results are passed to the next layer in the network.

The filters that give us the best output matches as measured by the loss function are learnt over time.

Conceptually, different filters are learning different features of your data.

# A convolutional layer with 64 filters that takes an input shape of 28x28

tf.keras.layers.Conv2D(64, (3,3), activation='relu', input_shape=(28,28,1)).

# A max pooling layer

tf.keras.layers.MaxPooling2D(2,2).

# Input layer no longer specifies the shape due to the conv layer

keras.layers.Flatten().

# f0..f127 functions

keras.layers.Dense(128, activation=tf.nn.relu).

# Output enum of 10 values

keras.layers.Dense(10, activation=tf.nn.softmax)

])

The max pooling is used to compress the image and enhance the features - this is just as an example as we know that the max pooling will enhance the features we know the model will find useful given the kind of data we have.

Stacking Convolutional Layers

We can stack convolutional layers to break down features more e.g. you have a very abstract feature like a circle which can be broken down into sub features like elements of a clock, car wheel or pizza.

# A convolutional layer with 64 filters that takes an input shape of 28x28

tf.keras.layers.Conv2D(64, (3,3), activation='relu', input_shape=(28,28,1)).

# A max pooling layer for feature enhancement

tf.keras.layers.MaxPooling2D(2,2).

# Stacking a second convolutional layer for additional feature

# extraction/breakdown into subfeatures

tf.keras.layers.Conv2D(64, (3,3), activation='relu').

# Adding a second max pooling layer for enhancement of subfeatures

tf.keras.layers.MaxPooling2D(2,2).

# Input layer no longer specifies the shape due to the conv layer

keras.layers.Flatten().

# f0..f127 functions

keras.layers.Dense(128, activation=tf.nn.relu).

# Output enum of 10 values

keras.layers.Dense(10, activation=tf.nn.softmax)

])

The network now uses the features to learn rather than the raw input values.

Process to Build an Image Classifier

- Create a training generator

- Create a validation generator

- Create the neural network

- Compile the model

- Fit the data to the model by passing the training and validation generator to the fit function

Dropout

Throw away some of the neurons to try and find efficiency. May not need all the neurons to come up with the same result, so throwing some away results in less computational effort.

Regression with TF Workflow

- Clean data - remove unknown values

- Encode enums/categorical columns into a numeric e.g. using one hot encoding

- Split the data into train and test sets

- Look at the empirical distribution of the input features/columns

- Normalize the columns using z-scores - you can use a normalization layer

- Build the model

- Specify any early stopping criteria to prevent overtraining

Normalization layer:

normalizer = tf.keras.layers.Normalization(axis=-1)

Model Overfitting and Underfitting

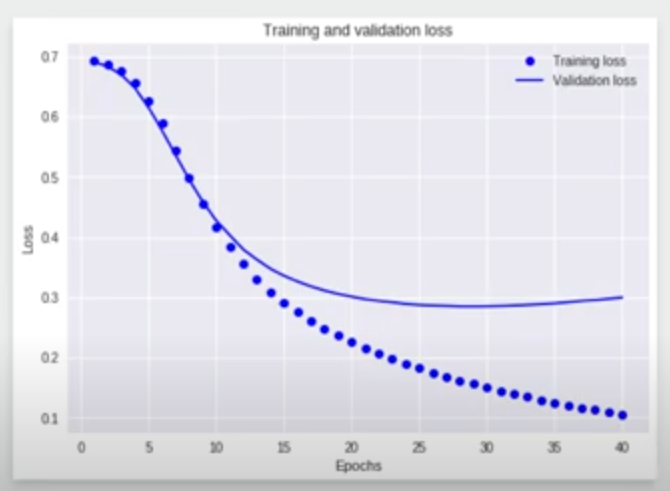

As we train, the loss decreases on training and validation.

When training loss continues to decrease whilst the validation loss stops decreasing, or starts to increase, then we are overfitting.

The model is becoming too specialized to the training data and does not generalize well to the test data set.

Underfitting - when the model doesn't express enough complexity to solve for the training data set let alone the test data.

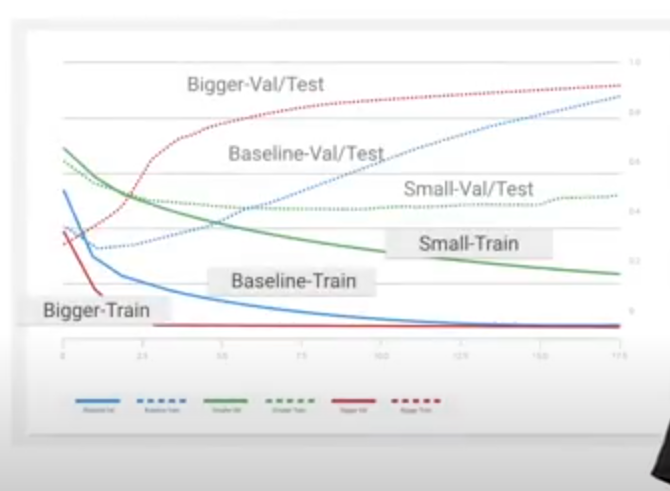

Creating model variants

- Small model - 2 dense layers with 4 neurons

- Baseline model - 2 dense layers with 16 neurons

- Bigger model - 2 dense layers with 512 neurons

If we compare the test dataset result, we see that the bigger and baseline models quickly see increasing loss, whilst the small model sees decreasing loss. More loss with more features -> overfitting.

The more neurons, the greater the risk of overfitting/memorization - the network or model has excessive expressive complexity to be able to memorize the training data.

Too few neurons results in a model that is not expressive enough - underfitting.

Regularization

Discourage learning a more complex model by shrinking coefficient estimates towards 0.

Regularization in Machine Learning, Prashant Gupta, Towards Data Science

You apply a regularizer on a layer using the kernel_regularizer param:

keras.layers.Dense(....., kernel_regularizer=keras.regularizer(.....))

Dropout

Add a probability of a layer of the model being 0.

# 50% probability of dropout

keras.layers.Dropout(0.5)

Links and References

ML Zero to Hero Playlist, YouTube

Regression Using Keras, YouTube

Getting Started with Tensorflow for Regression, YouTube

Solve your model's overfitting and underfitting problem, YouTube