GKE/K8s Foundations

Some notes I took whilst doing the "Architecting with Google Kubernetes Engine: Foundations" course.

Architecting with GKE

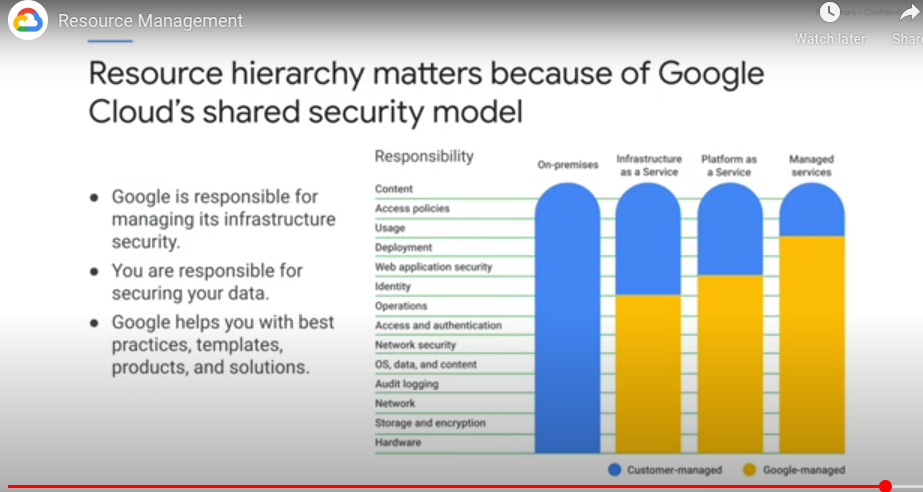

Resource Management

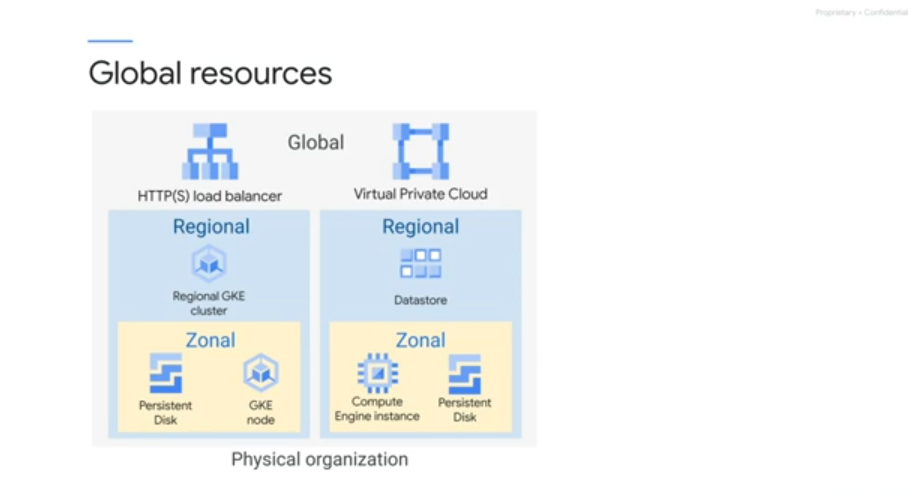

Regional resources - span multiple zones

Global resources - across multiple regions e.g. https lbs

Zones and regions - physical organisation of resources Projects - logical organisation of resources

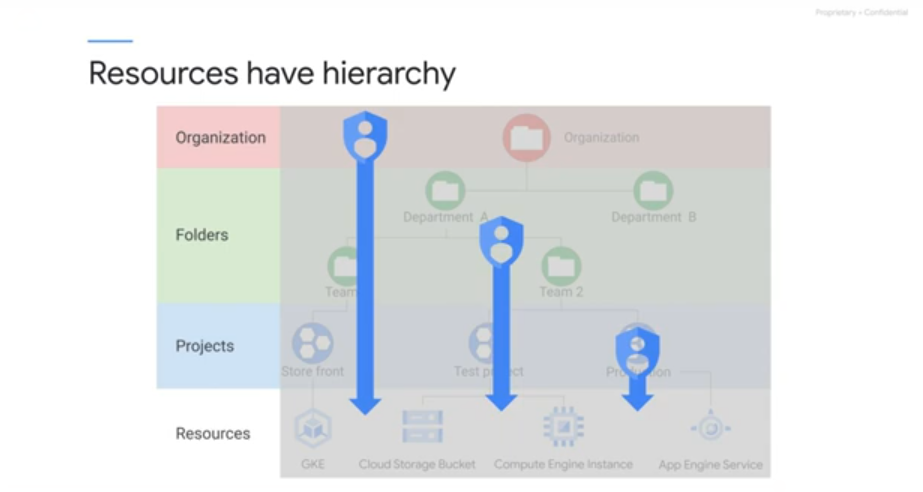

Project id and number are fixed, have changeable labels. Belong to a folder.

Folder should reflect enterprise hierarchy.

IAM and access policies flow downwards:

Billing accumulates at the project level.

Billing

Billing account linked to projects.

Intro to Containers and K8s

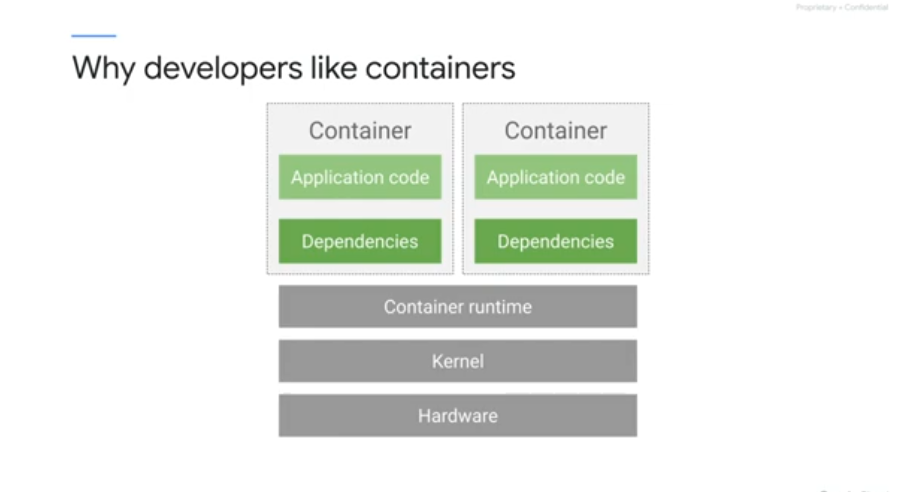

Each linux process has its own virtual mem space Containers use linux namespaces to control what an app can see including PIDS, ip addresses etc Use cgroups to control what an application can use - max cpu consumption, memory, IO bandwidth etc Use union file systems to encapsulate apps and their dependencies into a set of minimal layers.

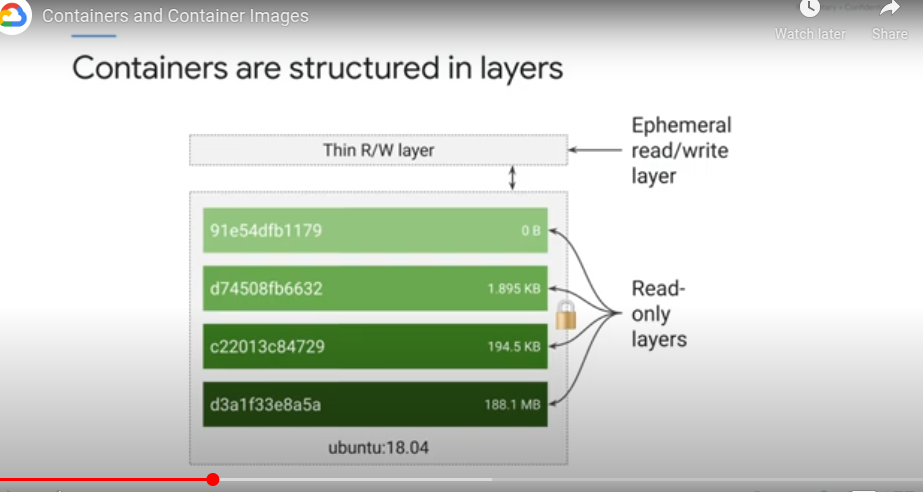

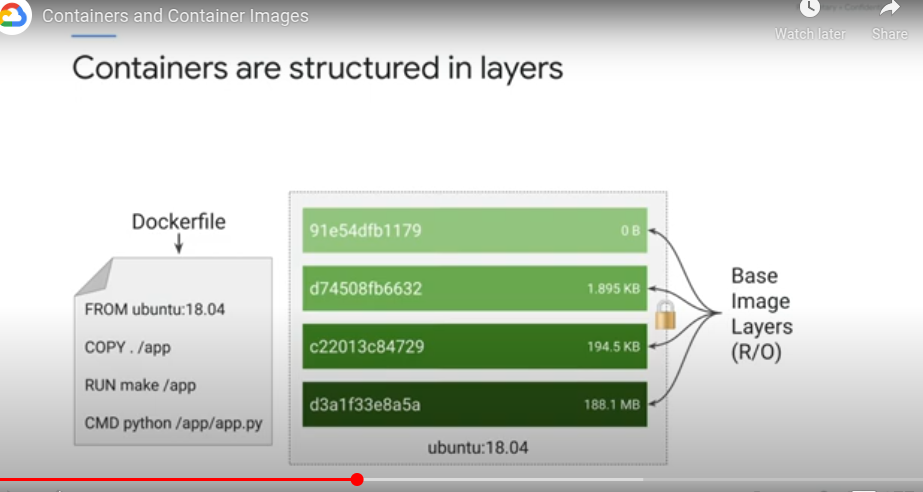

A container image is structured in layers.

The image build tool reads instructions from the container manifest - the Dockerfile. Each instruction is a layer in the container image Each layer is read only - containers running from the image have a writable ephemeral topmost layer

FROM ubuntu - the base image layer from a public repo

COPY - adds a new layer containing files copied in from your build directory

RUN - builds the application, puts the result of the build into a third layer

CMD - the final layer, what command to run within the container

Each layer is a set of differences from the layer before it.

Multi-stage build process - one container builds the final image and another gets the image

Container layer - ephemeral writable container layer

Multiple containers can share an image, but have their own state in their own container layer.

Container updates and changes work on diffs to save space and for efficiency.

Artifact registry - where to store container images

Cloud build - managed container building service. Each step in a cloud build runs in a docker container, then delivers the image to the execution environment.

K8s Architecture

K8S Object Model

Every thing managed is represented by an object - resources, policies View and change the object state

Declarative management - what you want the state of the objects being managed to be

It does via the watch loop.

A persistent entity that represents the state of something running a cluster - desired and current state.

Object spec - the desired state of the object Object status - the current state of the object provided by the K8s control plane

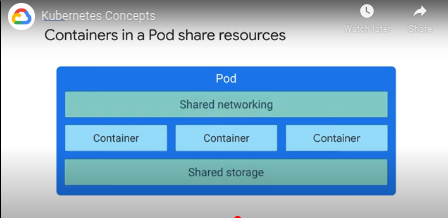

Pods - basic building block of the k8s module, smallest deployable object.

Every container is usually a pod - a pod embodies the environment the container lives, it can accomodate 1 or more containers - multi containers implies coupling, resource sharing (storage and network)

Each pod has it's own IP - every container in that pod shares the network namespace (IP address and network ports)

Containers in the same pod can communicate through localhost.

Can specify storage volumes to shared across containers in the pod.

POds are not self healing.

The control plane monitors current state vs desired state, remedying the delta as needed.

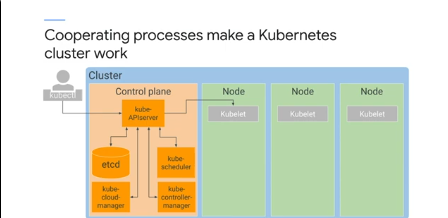

K8S Control Plane

The computers that compose your cluster are usually VMs (cloud compute).

ONe machine is the control plane, the rest are nodes.

The control plane coordinates the cluster.

Kube-API Server - accepts commands to view or change the state of the cluster, including launching pods

kubectl - connects to the API server

kube-APIServer also handles auth, admission control

Any change or query must be addressed to the kube-APIServer

Etcd -

Cluster's DB, contains config data, dynamic data like what nodes are in the cluster, what pods should be running and where.

Kube-scheduler -

Schedules pods onto nodes by evaluating the requirements of each pod and selecting the best nods. When it finds a pod that hasnt been assigned a node, it chooses a node and writes the name of the node into the pod object (to say that the pod should be running on that node).

It knows the state of all nodes, obeys constraints that are defined on where the pod can run f(hardware, software policy). e.g. a certain pod can only run on nodes with enough memory.

Affinity specs (where you want them to run) and anti-affinity specs (where you dont want them to run).

Kube-controller-manager

Monitors the state of the cluster via the API server - detects diff between desired and current state, takes action to remedy.

Controllers manage workloads - work loops.

A deployment is a controller object.

Other controllers include node controllers (monitor and respond to offline nodes), kube-cloud manager manages controllers that interact with the specific cloud provider.

Each node runs a subset of control plane components - each node runs a kubelet. THis is a type of agent - when the API server wants to start a pod on a node, it does it via the kubelet.

Kubelets also monitor pod life cycle, readiness and liveness probes, and reports back to the API server.

container runtime - containerd aka docker

Kube-proxy

Maintain the network connectivity among pods in the cluster. IN regular k8s, it does this using IP tables in the kernel.

GKE Concepts

Initial cluster setup can be handled by kubeadm in an automated manner.

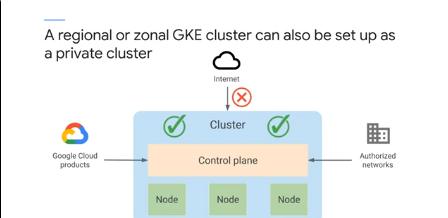

GKE has autopilot and standard mode. Autopilot manages the entire infra including control plane, node and node pools. THis is a managed service - all operational aspects of the cluster are monitored including control plane, worker nodes and core k8s components. Google SRE managed and optimized k8s cluster.

GKE provisions and manages all the contorl plane infra. No seperate control plane.

For node config and management, it depends on the type of the GKE mode. Standard mode - you manage configuring individual nodes.

Nodes are created externally by cluster admins, not by k8s - GKE automates this for you by launching compute engine VMs and registering them as nodes i.e. GKE integrates with compute engine. You can choose the machine type when you create the cluster. You may per hour of life of your nodes.

GKE may pick a newer platform than the one you specified - if it does, the cost wont change.

Node pool - subset of nodes that share a config e.g. mem, cpu. Easy way to ensure that workflows are on the right hardware by labeling them wiht a desired nodepool. This is a GKE feature, not a core k8s feature.

You can also enable auto updates, auto repair and cluster autoscaling at the node pool level.

Some of the node's resources are needed to run GKE and k8s components.

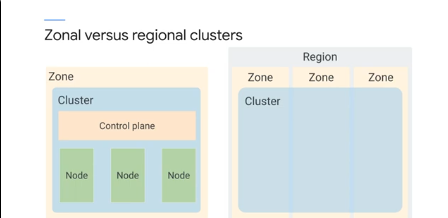

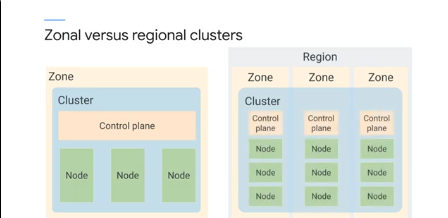

Regional clusters have a single API endpoint but the control planes and nodes are in multiple zones within a region. Maintain HA across multiple zones in a single region. The control plane is also maintained and distriuted to tolerate the failure of multiple zones in a region.

Regional cluster default is 1 control plane and 3 nodes across 3 zones.

Kubernetes Object Management

All k8s objects have a unique name and id.

You define objects in manifest files, usually in YAML. YOu define a desired state for a pod, name and container image.

apiversion - which k8s api is used to create the object

kind - the kind of object e.g. a pod

metadata - help identify the object using a name, id, namespace

You define several related objects in the same YAML file.

K8s objects are named, the name must be unique within the namespace. Maximum length of 253 chars.

K8s also generates it's own unique ID - no 2 objects have the same UID during the lifetime of a cluster.

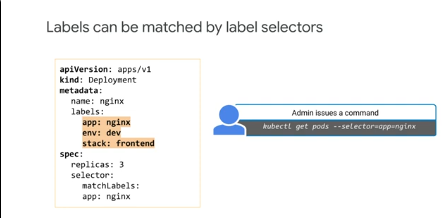

Labels - key value pairs with which you tag objects during or after creation, used to organize objects and group them into subsets.

e.g. create a label called app, the value can be the application of which the object is a part of.

Deployment objects can be labeled with it's application, environment and which stack it is a part of.

Contexts offers ways to select resources by labels.

You use label selectors to find resources:

The default scheduling algo prefers evenly spread workloads across nodes available too it.

Pods dont heal or repair themselves, designed to be ephemeral and disposable. Better ways than specifying individual pods.

Instead of declaring individual pods, we can use a controller objects that manages the state of the pods.

Deployments are for long lives components e.g. webservers managed as a group.

Wehn the kube-scheduler schedules pods for deployment, it tells the APIServer. The controller monitors these changes, particularly the deployment controller. The deployment controller detects desired vs actual state delta and will try and fix the difference e.g. by launching another pod.

You use a single deployment yaml to launch replicas of the same container. WIthin the spec you specify how many replicas, how they should run, which containers and which volumes.

When you specify a pod, you can specify resource usage. Ttypically CPU and RAM.

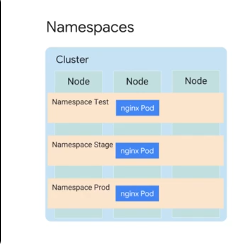

You can abstract a physical cluster into multiple clusters via namespaces - provide scope for naming resources like pods, deployments and controllers.

You cannot duplicate object names in the same namespace. ALlow you to implement resource quotas across the cluster - limits for consumption within a namespace.

You can spin up a new deployment as a test in a new namespace.

Default namespace - objects with no namespace defined kube-system namespace - objects created by k8s iteself kube-public - objects that are publicly readable for disseminating info across the cluster

You specify namespace in the YAML or on the command line when creating the resource. Better to do this via command line to make your YAML config more flexible and reusable.

Replica sets

You will work with Deployment objects directly much more often than ReplicaSet objects. But

it's still helpful to know about ReplicaSets, so that you can better understand how Deployments

work. For example, one capability of a Deployment is to allow a rolling upgrade of the Pods it

manages. To perform the upgrade, the Deployment object will create a second ReplicaSet

object, and then increase the number of (upgraded) Pods in the second ReplicaSet while it

decreases the number in the first ReplicaSet.

Services

Services provide an lb abstraction to specified pods.

- Cluster IP - expose the service on an internal ip address only

- Node Port - expose service on the IP of each node on the cluster at a specified port

- Load Balancer - expose the service externally via a cloud provided LB

In GKE, LBs give you a regional network lb config. For a global HTTPS LB config you need to be an Ingress.

Controller Objects to Know

-

Replicaset controller - ensure a set of identical pods are running. Deployments allow for updates to replica sets and pods.

-

Deployment controller - create, update, rollback and scale pods. Uses replicasets behind the scenes to do things like rolling upgrades.

-

StatefulSet - if you need to deploy apps that maintain local state. Pods created with StatefulSet have unique persistent IDs, stable network IDs and persistent disk.

-

DaemonSet - to run certain pods on all nodes or a subset of nodes. Automatically sets up new pods on newly added nodes with the desires spec. e.g. to make sure a logging agent is running on all nodes in the cluster.

-

Job controller - creates pods for the lifetime of a task then terminates them. CronJob is a related controller.

Migrate for Anthos

Tool for containerising existing workloads and putting them into the cloud. Automated process.

Migrate for compute engine - create a pipeline for moving data from on prem into cloud.

Migrate for Anthos is installed on a GKE cluster specificly setup for processing. Migrate for Anthos then generates deployment artifacts e.g. k8s config and Dockerfiles. You apply the generated config to the target cluster.

You can migrate from VMWare, aws, azure.

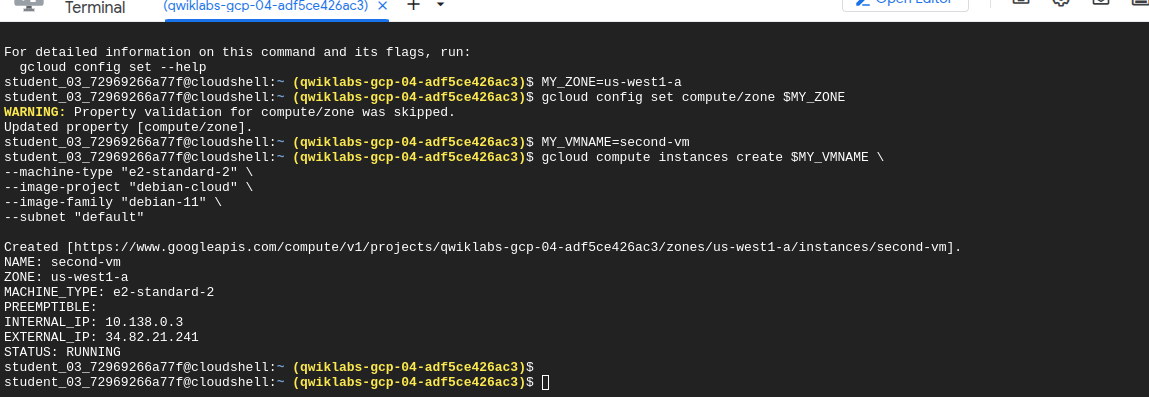

Lab Notes

List zones in your region

gcloud compute zones list |grep $MY_REGION

Set default zone

gcloud config set compute/zone $MY_ZONE

Create a VM from command line

gcloud compute instances create $MY_VMNAME

--machine-type "e2-standard-2"

--image-project "debian-cloud"

--image-family "debian-11"

--subnet "default"

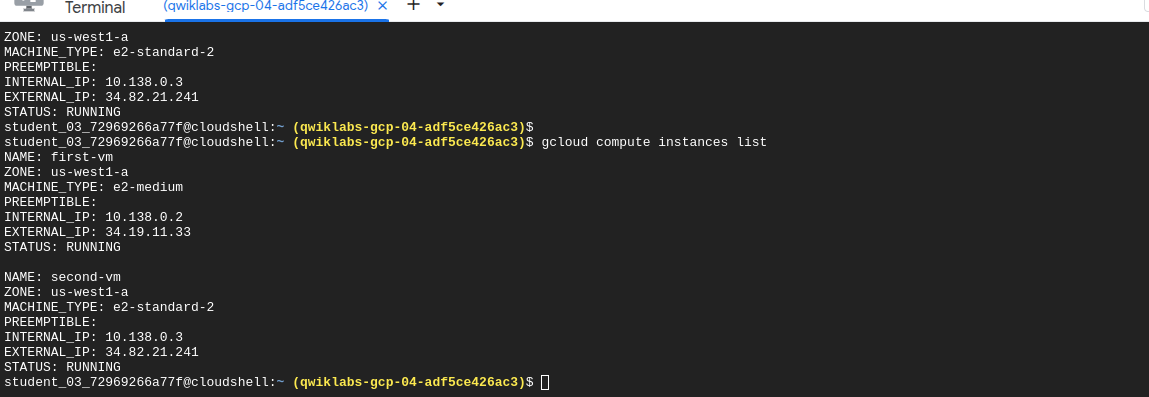

List compute instances

gcloud compute instances list

Create a service account

gcloud iam service-accounts create test-service-account2 --display-name "test-service-account2"

Grant the created service account viewer role

gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT --member serviceAccount:test-service-account2@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role roles/viewer

Get the default access list assigned to a file

gsutil acl get gs://$MY_BUCKET_NAME_1/cat.jpg > acl.txt

Make a file private

gsutil acl set private gs://$MY_BUCKET_NAME_1/cat.jpg

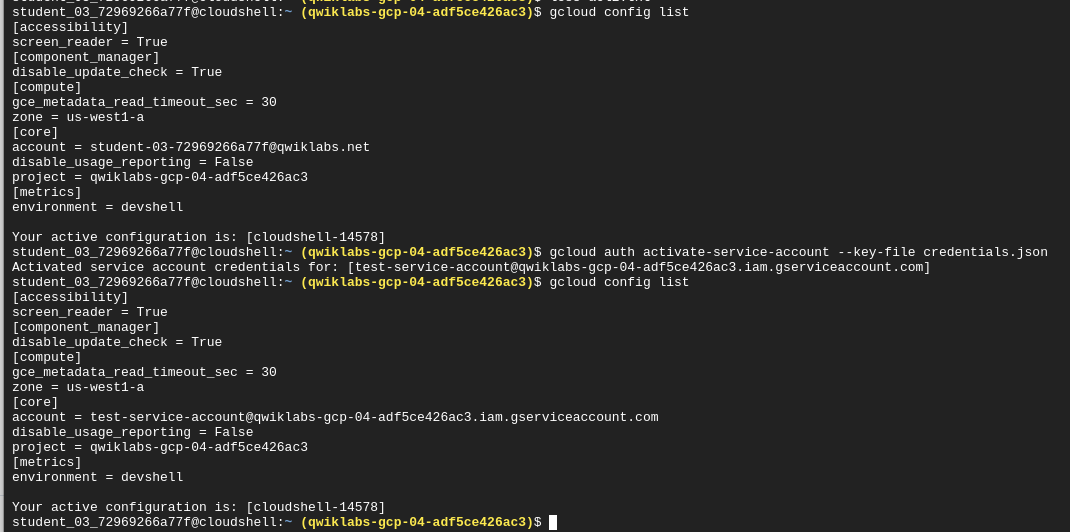

View current config

gcloud config list

Activate yourself as a service account

gcloud auth activate-service-account --key-file credentials.json

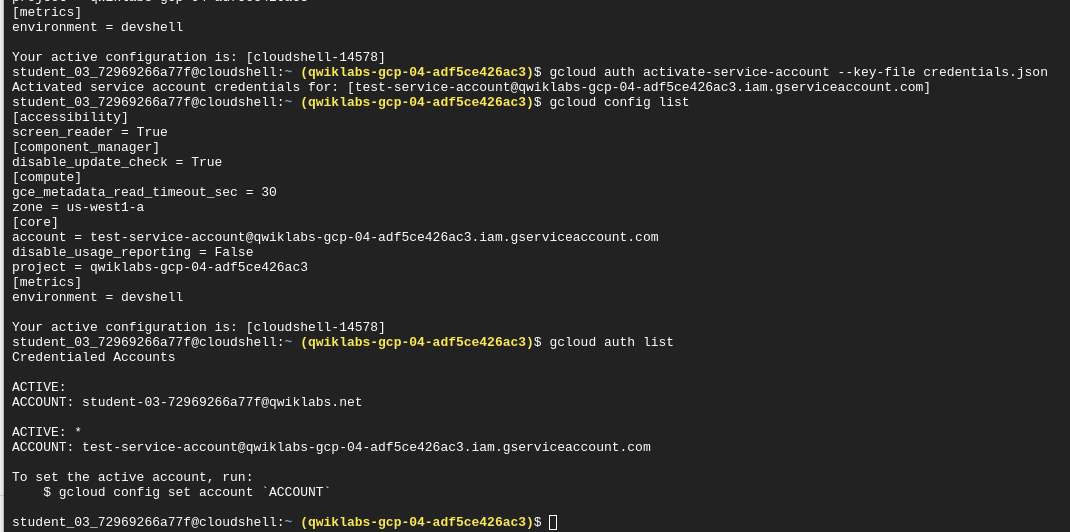

Check which account is used:

Setting the account:

gcloud config set account student-03-72969266a77f@qwiklabs.net

Make a bucket readble by everyone including unauth users

gsutil iam ch allUsers:objectViewer gs://$MY_BUCKET_NAME_1

SCP from cloudshell to CM

gcloud compute scp index.html first-vm:index.nginx-debian.html --zone=us-west1-a

Create a docker repo

gcloud artifacts repositories create quickstart-docker-repo --repository-format=docker --location=us-east1 --description="Docker repository"

Tag and build image pushing to registry

gcloud builds submit --tag us-east1-docker.pkg.dev/${DEVSHELL_PROJECT_ID}/quickstart-docker-repo/quickstart-image:tag1

Custom buildfile referencing an image to be pushed to registry

cloudbuild.yaml

steps:

- name: 'gcr.io/cloud-builders/docker'

args: [ 'build', '-t', 'YourRegionHere-docker.pkg.dev/$PROJECT_ID/quickstart-docker-repo/quickstart-image:tag1', '.' ]

images:

- 'YourRegionHere-docker.pkg.dev/$PROJECT_ID/quickstart-docker-repo/quickstart-image:tag1'

Submit custom cloudbuild file to cloud build

gcloud builds submit --config cloudbuild.yaml

The true power of custom build configuration files is their ability to perform other actions, in parallel or in sequence, in addition to simply building containers: running tests on your newly built containers, pushing them to various destinations, and even deploying them to Kubernetes Engine.

Custom cloudbuild to run the quickstart

steps:

- name: 'gcr.io/cloud-builders/docker'

args: [ 'build', '-t', 'us-east1-docker.pkg.dev/$PROJECT_ID/quickstart-docker-repo/quickstart-image:tag1', '.' ]

- name: 'gcr.io/$PROJECT_ID/quickstart-image'

args: ['fail']

images:

- 'us-east1-docker.pkg.dev/$PROJECT_ID/quickstart-docker-repo/quickstart-image:tag1'