GKE/K8s Workloads

Notes I took whilst doing the "Architecting with Google Kubernetes Engine: Workloads" course. Covers deploying, networking and storage.

Architecting with GKE Workloads

Deployments and Jobs

Workloads - a container. A concept to streamline app management and batch jobs.

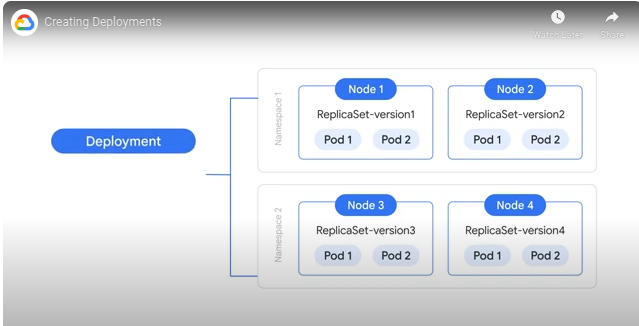

Creating Deployments

Pods are the smallest deployable unit - one or more containers, tightly coupled, with their resources like storage and networking

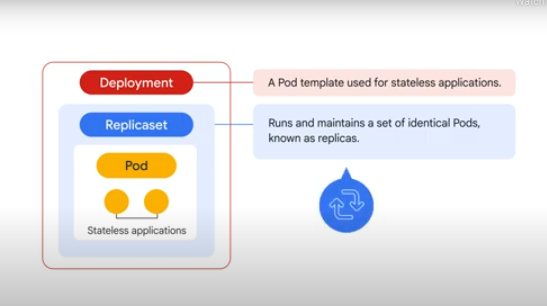

A deployment is a k8s resource that describes desired state of pods.

Deployments managed by a controller - monitors state and addresses delta.

Desired state is described in the deployment specification file - this gets submitted to the control plane and a deployment controller is created.

During this process the deployment creates and configures a ReplicaSet controller. The ReplicaSet controller creates and maintains the replica versions of the pods as specified by the deployment.

Info in a deployment file

Written in yaml, includes API version, the kind (deployment is a kind), number of pod replicas, a pod template (metadata and spec of pods in the replicaset), the container image, port to expose and accept traffic.

Deployment Lifecycle

-

Processing - what task is being performed e.g. creating a new replicaSet

-

Complete - all new replicas are available and up to date, no old replicas running, confirms Old replicas are not running, all replicas are on the latest version

-

Failed - creation of replicaset couldn't be completed

Deployments used for updating, rolling back, scaling, autoscaling.

Designed for stateless applications.

Creating a deployment

-

Take a declarative approach and define a YAML file with the config - apply this using "kubectl apply" command

-

Take an imperative approach and use "kubectl create deployment" command with inline params for the deployment

- Use the google cloud console or the GKE workloads menu

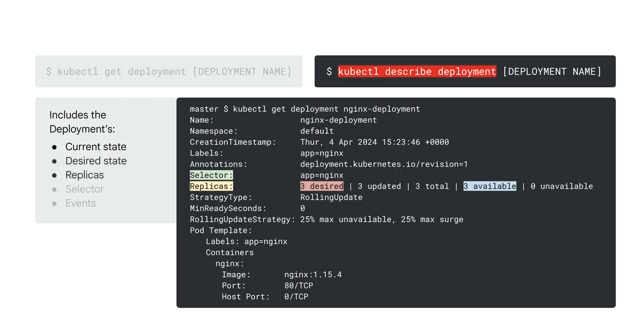

Inspecting Deployments

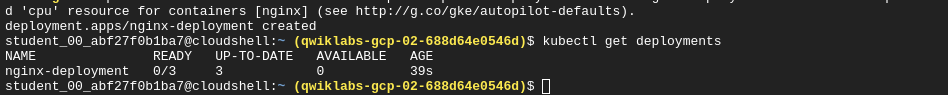

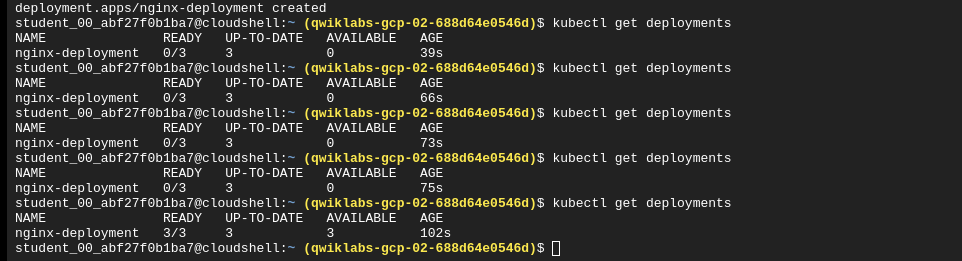

kubectl get deployment

- READY - how many replicas are available

- UP-TO-DATE - number of replicas that are up to date based on the deploy config

- AVAILABLE - number of replicas available to users

- AGE - user availability time

Can also output the yaml config from kubectl.

kubectl describe

You can also use the google cloud console.

Updating Deployments

When you update your container you will need to deploy again.

- kubectl apply - f [DEPLOYMENT FILE]

You apply an update deployment YAML - allows you to update deployment specifications like the number of replicas outside the Pod template.

- "kubectl set" command

e.g. kubectl set image deployment

This lets you make changes to the pod template specs like the image, resources or selector values.

- "kubectl edit"

Make changes directly to the specification file.

Ramped strategy - rolling update using 2 replicasets, one replicaset with the new container, bringing down one replicaset one at a time and bringing up the new ones

maxSurge - max pods on new version

maxUnavailable - max unavailable pods at the same time

e.g. maxSurge 1, maxUnavailable 0 - update one at a time with no downtime on the old pods

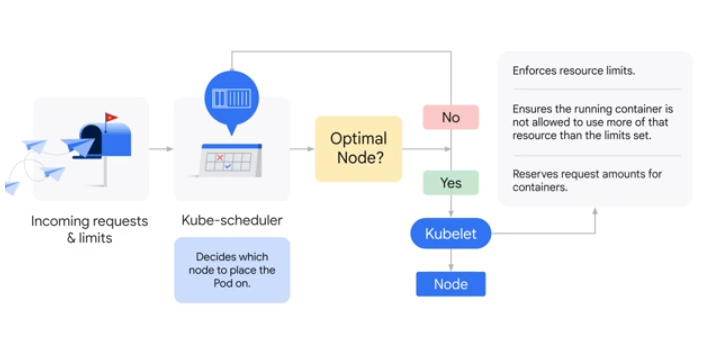

Requests and Limits

As rolling update occur, resource usage starts to vary - to control these, you can set manual requests and limits or have a pod set them for you.

Requests - minimum CPU and mem a container will be allocated on a node

The scheduler can only assign a container to a node if the node has enough resources for that container. Specifying more than is available will result in the pod not being scheduled.

Limits - upper boundary on CPU and mem

Requests and limits are set at the individual container level within a pod. Pods are scheduled as groups so the total requests and limits is the sum of the individual containers within the pod.

kube-scheduler is used to decide which node to place a pod. If an optimal node is not found, the request is fired back into kube-scheduler. A kubelet is used to enforce the resource limits once an optimal node is found. The kubelet also reserves for requests not just enforce limits.

millicores - unit of CPU resource

Other Deployment Strategies

Recreate

Delete old pods before creating new ones - all users get the update at the same time, but experience disruption whilst creating new ones

Blue-Green

Create a new deployment - blue si the old version, green is the new one. ONce pods are ready, the traffic is switched. Newer versions can be tested in parallel but resource usage is doubled during the deployment.

Canary

Gradually move traffic to the new versions - minimise excess resource usage compared to blue-green. Can be slower, may require a service mesh to accuratly move traffic.

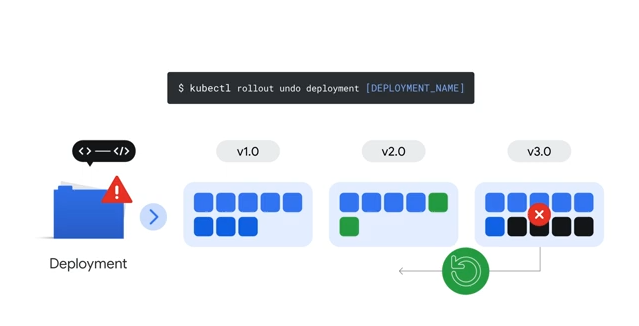

Rollout Undo

You can also inspect the rollout history using kubectl rollout history

It retains the history of the last 10 replicasets.

kubectl rollout undo - undo the last rollout

You can pause rollouts with kubectl rollout pause e.g. multiple rollouts happening at once on different versions

kubectl rollout status to monitor the status of a rollout

kubectl delete to delete a rollout or from the cloud console - this will delete all resources managed by the deployment including running pods.

Jobs and Cronjobs

A job is a k8s resource.

Jobs create pods to execute a task and then terminate them.

A job manages a task to completion rather than to a desired state like with a controller.

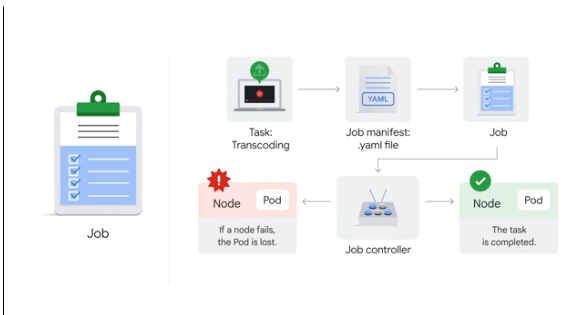

Example transcoding task

We create a job manifest in yaml for the task, then a job is created based on that yaml in the cluster.

The job controller then schedules a pod to complete the task and to monitor the pod. The job controller handles rescheduling on failure. Once the job is complete, the job controller removes the finished job and associated resources+pods.

-

Non-parallel jobs - single task, one time till completion. One pod created to execute, successful upon termination.

-

Parallel jobs - concurrent pods scheduled, each pod working independently, job finished when specified number of tasks are completed successfully e.g. bulk image resizing

kind: Job

Job spec - how the job should perform its task

restartPolicy - never or onFailure, refers to the restart policy of the container which is part of the job

-

never - any container failing, fails the job, entire pod is recreated

-

onFailure - the container restarts, pod remains on the node

backOffLimit field - how many failed restarts the job controller should tolerate before considering the actual job failed

spec.parallelism=2 - signal to the job controller this is a parallel job and will need to create multiple pods to schedule the task. The spec.parallelism tells the job controller how many pods to create.

It then keeps on launching new pods to replace any that finish until the number of successfull completions hits the target completion count.

```kubectl describe job`` - to describe jobs

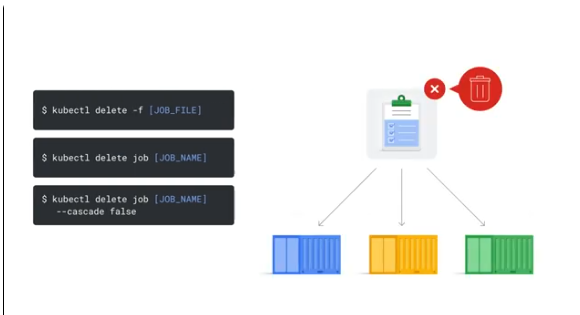

Deleting jobs

Cluster Scaling

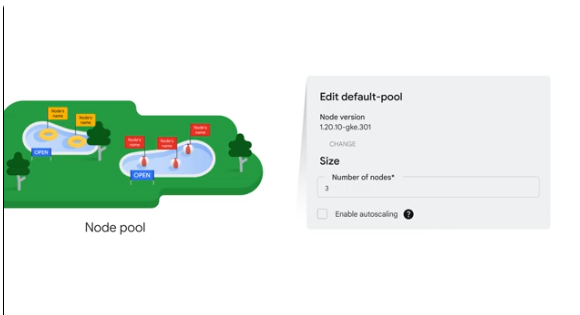

Standard mode - manual scaling

Cluster contains 1 or more node pools - groups of nodes with the same configuration type. Can specify max and min size of the pool. Uses a NodeConfig specification that allows for labelling.

After the cluster is created, you can add additional custom node pools of different sizes and types to the cluster. You can scale down the node pool to have 0 nodes, but the cluster itself won't be shut down.

Resize

Can use a resize command line which will remove instances at random, terminating pods gracefully.

Autoscaler disabled by default.

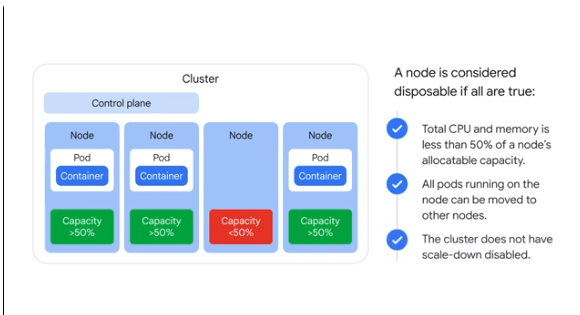

GKE can delete underutilised nodes.

Pod enters holding pattern whilst awaiting capacity. The scheduler marks the pod as unschedulable.

Disposable nodes:

If un-needed for 10 minutes, the autoscaler will delete it.

Cluster wide limit of 200k pods

gcloud container {clusters|node-pools} create {POOL_NAME}

--enable-autoscaling

--min-nodes 15

--max-nodes 50

Pod Placement

Use labels, affinity rules, taints and tolerations to help the scheduler place pods.

Zone specific labels from the kubelet at startup.

Nodeselectors - specify a pod's preferred node labels

Pod preference vs node capabilities - system of matching between these 2.

cloud.google.com/compute-class - a lavel in the nodeSelector rule for specifying a compute class for the pods

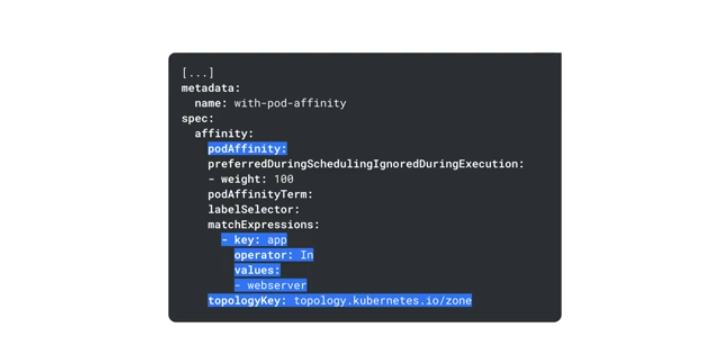

Node affinity rules - influence pod placement. Label based pod placement approached. Ensure 2 or more pods are on the same node, or use anti-affinity to spread.

Node affinity defines rules as preference, not requirements.

requiredDuringScheduling - strict requirement when scheduling pods

preferredDuringScheduling - a flexible preference that's not mandatory

A weight can be set for a preference - the higher the stronger the preference.

Node pools should be named using the compute instance used to create the nodes. GKE auto assigns labels to nodes based on their node pool names.

toplogyKeys - define preferences based on topology domains like zones and regions e.g. dont schedule a pod to a zone is other pods are already running in that zone.

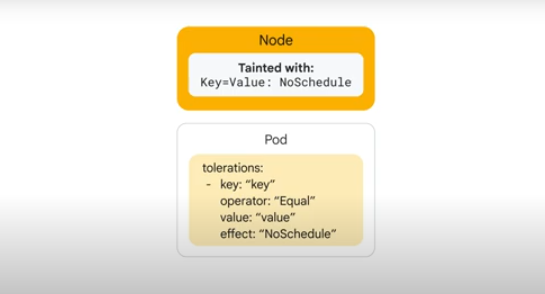

Taints and Tolerations

Taint - a label acting as a restriction, indicates that a node is not suitable for certain kinds of pods unless those pods tolerate the taint.

Toleration - a specification allowing the pod to be scheduled onto a node with a matching taint (an over-ride to the taint).

Taints are configured on nodes, not pods. Tolerations are at the pod level.

Taint specifications

Key - descriptive name representing the taint's purpose

Value - optional info to clarify the taint

Effect - to prevent or discourage pods from being scheduled on tainted nodes

Toleration specification

IN addition to key, value and effect, it also has an operator.

Pods can override taints if the toleration has matching key, value and operator.

The operator is a check which the toleration must pass.

'Equal' - if the toleration operator is equal, then it only needs matching values.

'Exists' - same key and effect as the taint regardless of value

Effect Settings

NoSchedule - prevent new pods from being scheduled on tainted nodes

PreferNoSchedule - encourages but doesn't prohibit pod scheduling

NoExecute - evict existing pods, prevent new ones from landing on the tainted node (without a toleration of NoExecute)

NodeSelectors are often used with node pools for tainting and tolerating.

Helm

Software install and management functionalities. Organize k8s resources in charts.

Helm and k8s API work together to install, upgrade and query k8s resources in an automated manner.

GKE Networking

ip-per-pod model : single ip address on a pod, all containers share the same network namespace

namespace - mechanism for isolating groups of resources in a cluster

On a node, pods connected via the node's root network namespace which is connected to the node's primary NIC.

IP addresses on pods must be routable.

Nodes get their pod ip addresses from address ranges assigned to the VPC being used.

When you deploy a GKE cluster you can select a region or zone, with an ip subnet for each region in the world by default.

alias ip - additional secondary ip or ranges

vpc-native gke cluster automatically create an alias ip range to reserve ip addresses for cluster wide services. A seperate alias IP range is also created for your pods which has a /14 block (CIDR) allowing for 250k addresses.

Each node is allocated a /24 block for approx 250 ip addresses.

THis lets you configure the number of nodes you expect to use and a max number of pods per node. The pod's ip addresses are in the alias IP created, GKE configures the VPC to recognize this IP as an authorized secondary subnet.

Each node maintains a seperate IP address space for pods. Pods can connect directly to one another via IP addresses.

Nativly routable ip addresses within the cluster's VPC and also other VPCs connected via VPC peering.

NAT-ed at the node ip address when exiting the google vpc.

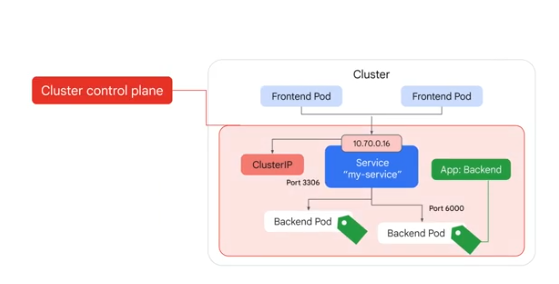

Services

A set of pods and a single ip address for accessing. HOw the external world accesses the cluster.

Provide stable ip and name for a pod. Remains the same through updates and upgrades.

Easier for apps to connect to one another or be found by clients.

VMs are designed to be durable - pods are deleted when updated and replaced with a new pod. Ip addresses are ephemeral.

Changing ip addresses can lead to service distruptions.

Endpoint - collection of ip addresses created by k8s service

The cluster reserves a pool of IP addresses for services - when a service is created, it gets issued one of these reserved ip addresses. This virtual ip is durable and published to all nodes in the cluster. Each cluster can handle 4k services (4k addresses reserved for services in a cluster).

Searching for and locating a service

-

Env vars - the kubelet adds a set of env vars for each service in the same namespace, so the pod can access the service via env vars. Changes made after a pod starts won't be visible to pods already running.

-

DNS Server - the dns server is part of GKE, watches the API server to know when new services are created. kube-dns creates a set of DNS records, allowing pods to resolve k8s service names automatically.

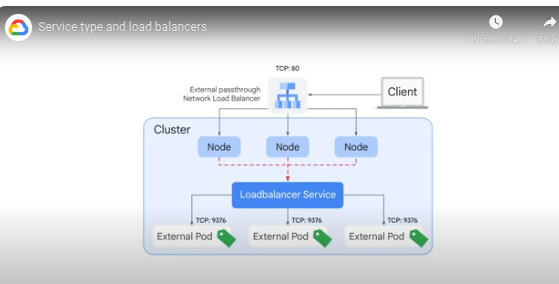

Service types and LBs

YOu can do service discovery with service types:

- ClusterIP - static IP, traffic distrib within the cluster, no accessible external to cluster

Cluster control plane selects pods to use and assigns an IP

-

NodePort Service - built ontop of clusterIP. Allows external access to the service via IP. Used to expose the service via an external LB.

-

Load balancer - built ontop of the clusterIP. Uses google's passthrough network LB which sits externally. Nodes forward traffic to the internal LB which forwards to one of the pods.

Specify the type as 'LoadBalancer'

Internal LB:

networking.gke.io/load-balancer-types: Internal

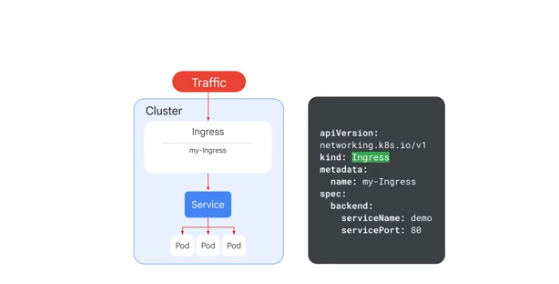

Ingress

Operates above services to direct traffic into your cluster.

Collection of rules to direct external traffic to internal services.

Implemented using cloud lb in GKE.

When you create an ingress, an app lb is created by GKE and configured to route traffic to the service - which can be a NodePort or a LoadBalancer service.

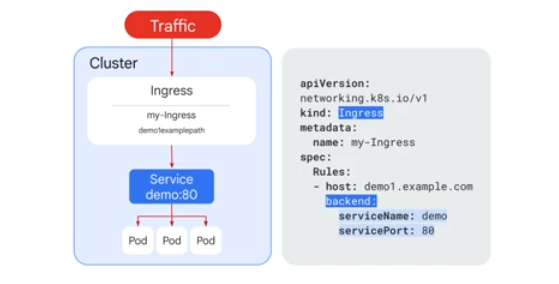

GKE supports HTTP rules - each rule takes the same name as the host. Host can be filtered based on the path, a path can have a service backend defining the service name and port.

Can have multiple host names with the same IP addy.

The app lb redircts traffic based on the hostnames to the correct backend service specified in the manifest.

Using the path, the traffic is directed to the correct service.

Unmatching traffic gets sent to the default backend which is specified in the ingress manifest. GKE can supply one which returns 404.

kubectl edit ingress [] for editing the manifest

kubectl replace [] for replacing the manifest entirely

Google also provides identity-aware proxy for granular access control at the application level.

Google cloud armour - protection against DDOS and has allow/deny list of IP addresses+ranges. Geolocation restriction.

Cloud CDN - content closer to users.

BackendConfig - custom resource used by ingress to define config for all services.

SSL certs managed in one place as ingress supports TLS termination at the network edge lb. Within cluster higher speed microservice based communication, but external access allows for TLS.

Ingress can also be used to lb traffic globally to multiple clusters and multiple regions, geo-balancing across multiple regions.

Container Native LB

- Double hop dilema - when traffic makes an un-needed second hop between VMs in a cluster.

Without Container Native Load Balancing

Regular app lb distributes traffic to all nodes in an instance group, regardless of whether the traffic was meant for the pods in a particular node.

The LB can route traffic to any node within an instance group.

When a client sends traffic, it comes in via a network LB. The network LB chooses a random node in the cluster and forwards the traffic too it. To keep the pod use even, kube-proxy is used to select a pod at random for the incoming traffic - the randomly chosen pod may be on the same node or on another node the cluster.

If the randomly chosen pod is on a different node, then the traffic must be sent to the node on which that pod resides. The return path involves sending the traffic back from the randomly selected pod (on another node to the initial node) to the initial node, before that initial node forwards the traffic back to the network LB which sends it to the client.

There are 2 levels of LB going on here - one by the network LB (to nodes) and the other via kube-proxy (to pods).

This keeps pod use even, but at the expense of latency, extra network traffic.

For latency sensitive stuff, the LB service can be configured to force kube-proxy to choose a pod local to the initial node that got the client traffic. This is done with the externalTrafficPolicy field being set to local in the manifest.

For even cluster load, google's app LB is used to direct traffic directly to the pods instead of to the nodes. This requires the cluster to operate in VPC native mode, relying on a data model called network endpoint groups

Network endpoint groups - IP to port pairs, so that pods are just another endpoint in the group like a VM would be. This allows for connections to be made directly between the LB and the intended pod.

This also allows for other LB features like traffic shaping. The LB has direct access/connection to the pod, more visibility to pod health.

Issues with Local external traffic policy

Constrains the mechanism by which traffic is balances across pods.

The app LB forwards traffic via nodes which may not be aware of the state of the pods themselves.

Network Policies

ALl pods can communicate with one another but access can be restricted using a network policy.

This is a set of firewall rules applied at the pod level to restrict access to other pods and services.

e.g. a web layer can only be accessed from a certain service

Prevent giving attackers a foothold from which to pivot, reduces the attack surface.

Dataplane v2

This is used to manage network policies - is used automatically for Autopilot clusters.

Uses eBPF for efficient packet processing, uses k8s specific metadata in the packets for processing, adds info for network annotated logs to provide greater visibility.

Enabling network policy enforcement consumes cluster resources at the node level.

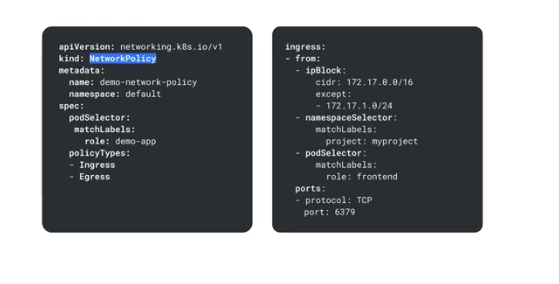

Policy Config

Policies are written in yaml

podselector - select pods based on labels

kind = NetworkPolicy

policyTypes - ingress, egress or both

no default egress policy

from section of ingress - specify the source, which can be specified as ipBlock, namespaceSelector or podSelector

egress - mains sections are 'to' and 'ports',

^^ this allows egress to 10.0.0.0/24

You have to enable network policy on the cluster, otherwise applying a network policy does nothing.

gcloud container clusters update - to disable a network policy

In cloud console, you first disable the policy for nodes and then for the control plane.

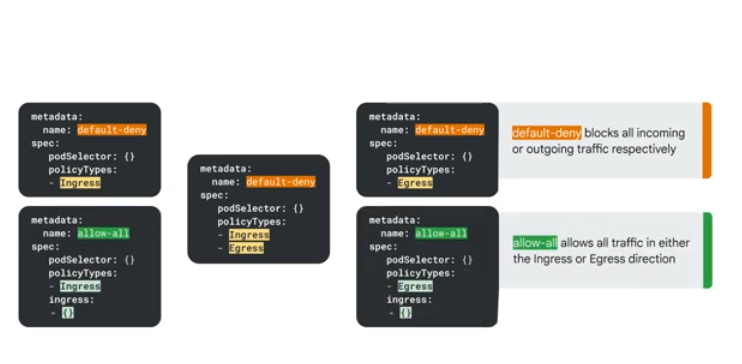

No network policy defined - all ingress and egress allowed between pods in the same namespace

default-deny - a policy for ingress or egress that blocks all incoming or outgoing traffic

allow-all policy - allow traffic in both directions (ingress and egress)

Persistent Data and Storage

Need to ensure app data is present after updates

Stateful sets - controller for managing pod lifecycles with stateful storage

config maps - store config data

k8s secrets - keep sensitive data

Volumes

Storage abstraction used to allow multiple providers.

Helps simplify provisioning and managing storage with a consistent interface.

Volumes - a directory accesisble to all containers in a pod, can be ephemeral or persisted. Attached to pods, not containers.

PersistentVolumes - Cluster resources, independent of pods, used to manage durable storage. Backed by disk. Can be provisioned dynamically through PersistentVolumesCLaims or manually by an admin.

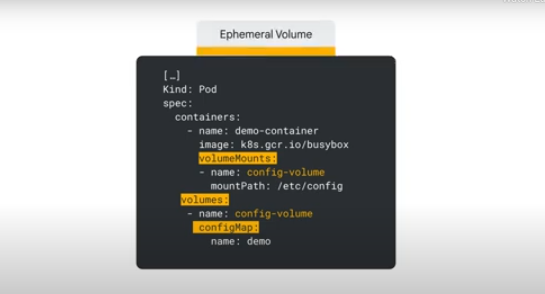

Ephemeral Volumes

emptyDir - basic ephemeral, empty read write directory on a pod. Storing temp files, data tht doesn't need to be persisted. Pod removal causes the data to be deleted. If container crashes, that wont cause a pod to be removed from a node so the emptyDir volume remains safe.

DownwardAPI Volumes

Pod labels, annotations, secrets, node info - for configuring apps based on their deployment context. This is how container learn about their pod environment.

ConfigMap Volumes

Key-value pairs that can be shared across multiple pods.

Can be references in a volume like a directory in a tree structure.

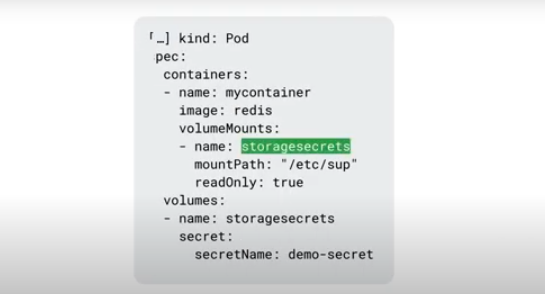

Secret Volume

Designed for sensitive data, but stores things in an unencrypted fashion?

Encrypted at rest, ensures secure access within a pod.

Backed by etcd so never written to non-volatile storage.

ConfigMaps are used for non-sensitive data, whereas secret volumes are used for sensitive data.

Secrets and configMaps are couples to the pod lifecycle, get deleted when the pod goes down.

The volumes associated with Secret and COnfigMap on a pod are ephemeral, though the backing data (via the abstraction) is not.

Durable Volumes

These are persistent volumes. Two components - the persistent volume (PV) and the persistent volume claim (PVC).

PVC - a request for persistent storage, specify characteristics of the storage like size, access mode and storage class.

The k8s controller matches the PVC to an available PV that matches the requirements. Once matched, the pod mounts the PV and has access to the storage.

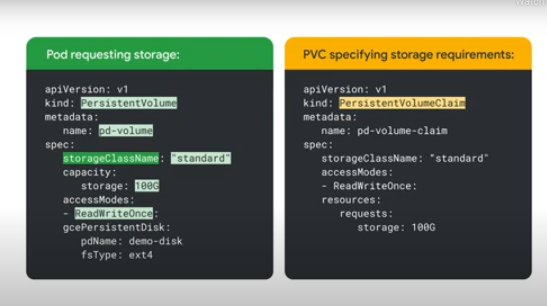

Persistent Volume Manifest

StorageClassName - references by PVCs in the pod.

A value of 'standard' means you will use the compute engine standard persistent disk type. This is the default if you don't specify a storage class.

Volume capacity - the storage capacity of the PV.

For an SSD specify the type as pd-ssd in a PVC.

Note: k8s StorageClass is not the same as Google Cloud Storage class. Google Cloud Storage class is for providing object storage for the web, whereas k8s storageclasses are for specifying how PersistentVolumes are backed.

Access Modes

The level of access, number of pods that can mount the PV, how pods can mount and access the storage provided by the PV.

-

ReadWriteOnce - the PV can be mounted as read-write by only a single node in the cluster, so any pod on that node can access the PV for reading and writing.

-

ReadOnlyMany - can mount as read only by multiple nodes simultaneously.

-

ReadWriteMany - can be mounted read-write by many nodes

Google cloud persistent disks do not support ReadWriteMany.

YAML config:

PVs cant be added to pod spec directly. You instead need to use PVC, and add that PVC to the pod.

ON startup of the pod, GKE looks for a suitable PV based on the claim. If there is no matching PV, then k8s will try to provision one dynamically as long as the storage class is defined. If it's not defined, then dynamic provisioning will only work if it's enabled at the cluster level and will use the "standard" StorageClass as the default.

Deleting a PVC also deletes the provisioned PV.

To retain the PV, set it's persistentVolumeReclaimPolicy to retain in your config.

Stateful Sets

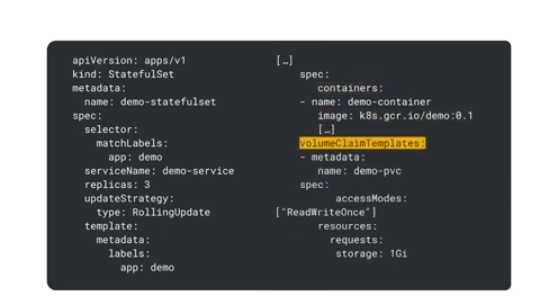

Replicas need to attach PersistentVolumes dynamically

This can lead to a deadlock. Stateful sets resolve this - when an app needs to maintain state in a PersistentVolume, it should be done via a StatefulSet

StatefulSets run and maintain a set of pods, define a desired state and it's controller achieves it. This allows for a persistent identity to be maintained for each pod. Each pod in the statefulSet has an index, pod name, stable hostname and some persistent storage which is also stably identifiable and is linked to the pod's index in the set.

Deployment, scaling and updates are ordered by the ordinal index of the pod within the statefulSet. This means if demo-1 doesn't come up, demo-2 wont be launched.

If demo-0 fails after the creation of demo-1 but before demo-2 comes up, then demo-0 needs to be restarted and come back up before we try to bring demo-2 up.

Scaling and rollouts happen in reverse order e.g. demo-2 will be updated before demo-1

PodManagementPolicy being set to Parallel will launch pods in parallel without waiting for the Running and Ready state.

For each pod to maintain it's own individual state, it must have durable storage to which no other pod writes - the statefulSet uses a unique PVC for each pod using ReadWriteOnce as the access mode.

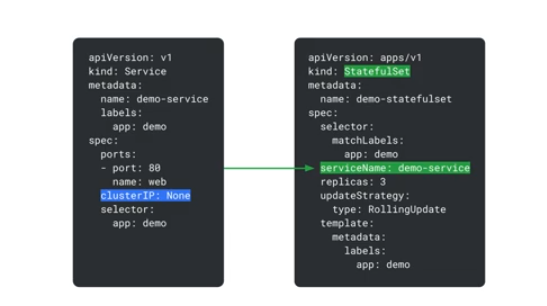

To control networking, StatefulSets require a service.

Headless service - no LB and no single service IP, by speciying 'None' for the cluster IP in the service definition

Add a specific service to a StatefulSet by adding that service to the serviceName field.

The service should have a label selector that matches the template's labels as defined in the template section of the StatefulSet

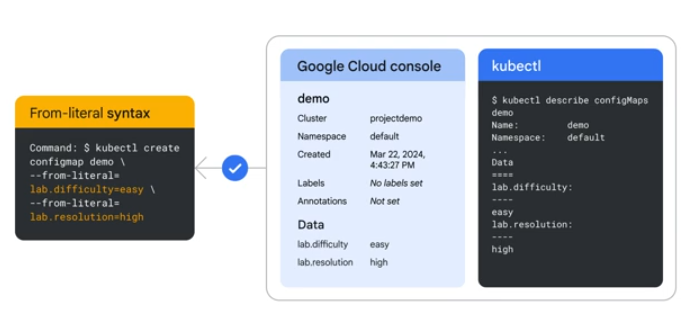

ConfigMaps

Store config as key-value pairs, used for storing config files, command line args, env vars, ports etc and make them available within containers.

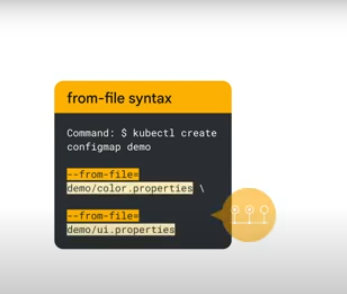

You can also add config maps from files using the from-file syntax:

You can add multiple files to a config map.

From a manifest:

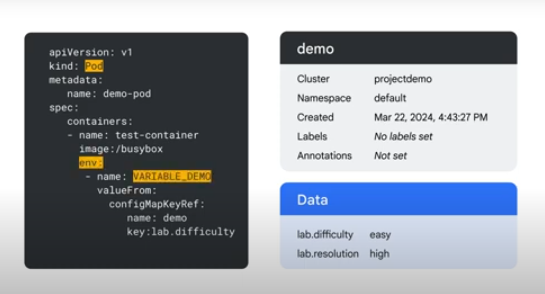

Pods referencing config maps

- As a container env var

You can see that it's using valueFrom as configMapKeyRef

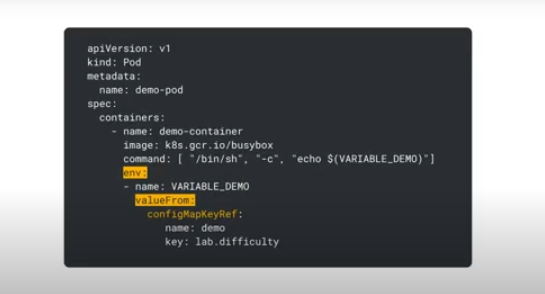

- In pod commands

YOu can see how the env var gets references in the pod command

- By creating a volume

A config volume is created for the pod and all the data from the map stored as files which are mounted on the container. If you change the keys of the map, the volume is eventually updated.

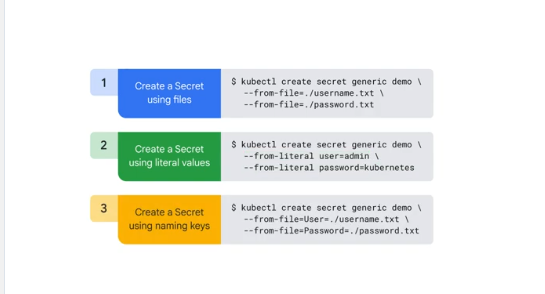

Secrets

Sensitive info within the user's control plane. Ensure k8s doesnt output sensitive data to logs.

- generic secrets - from files, literals, directories. Stored in Kv-pairs, base-64 encoded strings.

- tls secrets - store tls certs and pks

- docker registry secrets - creds for private docker registries

How do you create a secret?

kubectl create secret

from file or literals:

Separate control planes for configmaps and secrets. Provide a mechanism to create secure storage for storing and protecting secrets.

Kubelet periodically syncs with Secrets (the service) to keep a secret volume updated - eventual update.

Lab Notes

Set the zone and cluster env vars

export my_region=us-central1

export my_cluster=autopilot-cluster-1

Kubectl tab completion

source <(kubectl completion bash)

Let the kubectl command access the cluster

gcloud container clusters get-credentials $my_cluster --region $my_region

nginx deployment file

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

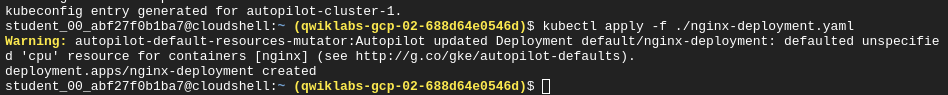

Deploying the Manifest

kubectl apply -f ./nginx-deployment.yaml

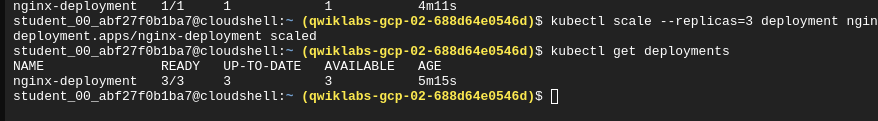

Scaling pods for a deployment

kubectl scale --replicas=3 deployment nginx-deployment

Trigger a deployment rollout

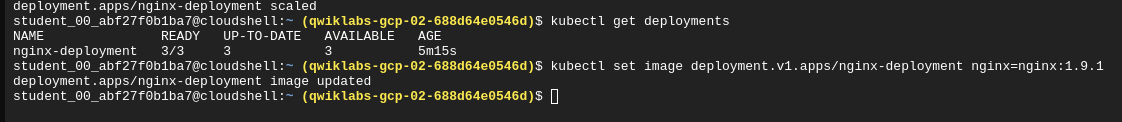

A deployment rollout gets triggered iff the deployment's pod template is changed e.g. the labels or the image is changed. Scaling doesn't trigger a rollout.

Update an image in the deployment:

kubectl set image deployment.v1.apps/nginx-deployment nginx=nginx:1.9.1

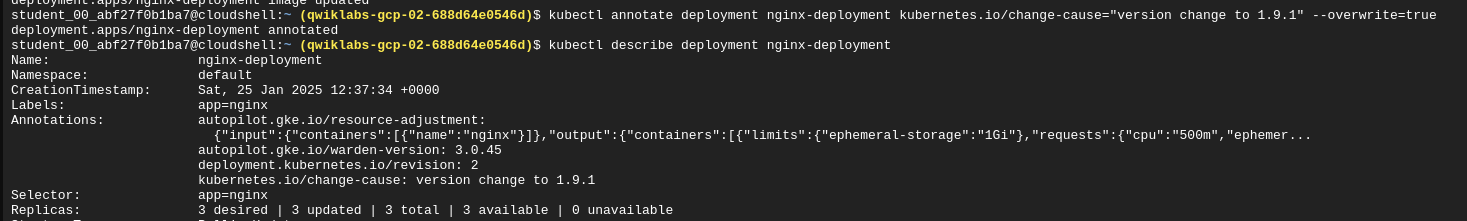

Annotate a rollout with change details

kubectl annotate deployment nginx-deployment kubernetes.io/change-cause="version change to 1.9.1" --overwrite=true

Viewing the change details annotation

kubectl describe deployment nginx-deployment

View rollout status

kubectl rollout status deployment.v1.apps/nginx-deployment

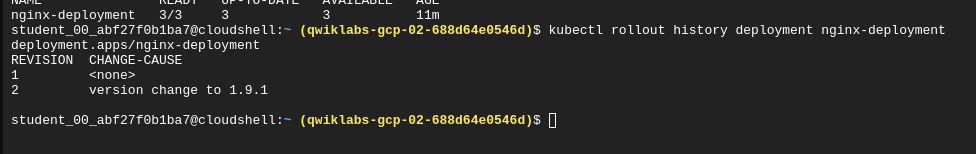

View rollout history

kubectl rollout status deployment.v1.apps/nginx-deployment

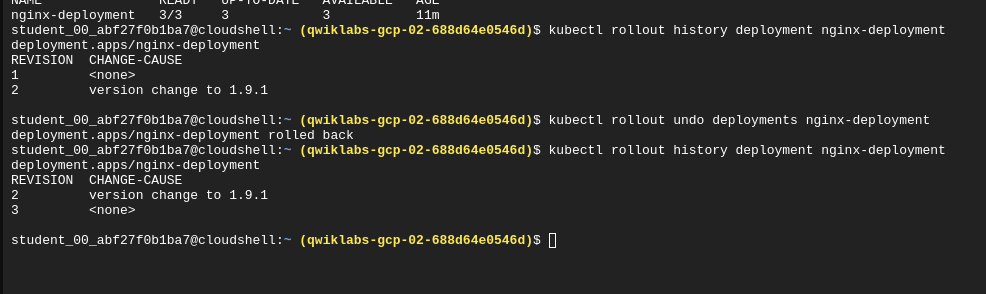

Triggering a rollback

kubectl rollout undo deployments nginx-deployment

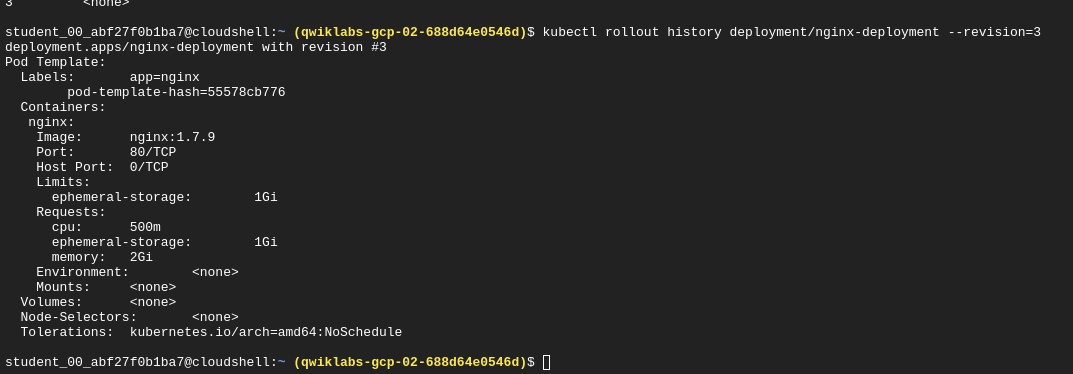

View rollout history of a specific revision

kubectl rollout history deployment/nginx-deployment --revision=3

Creating a Service

The service will control inbound traffic to the application. These can be ClusterIP, NodePort or LoadBalancer types.

Service manifest

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- protocol: TCP

port: 60000

targetPort: 80

Apply the service

kubectl apply -f service-nginx.yaml

This service also applies to any other Pods with the app: nginx label, including any that are created after the service.

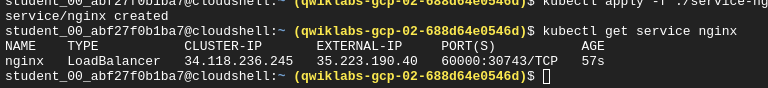

Check service creation

kubectl get service nginx

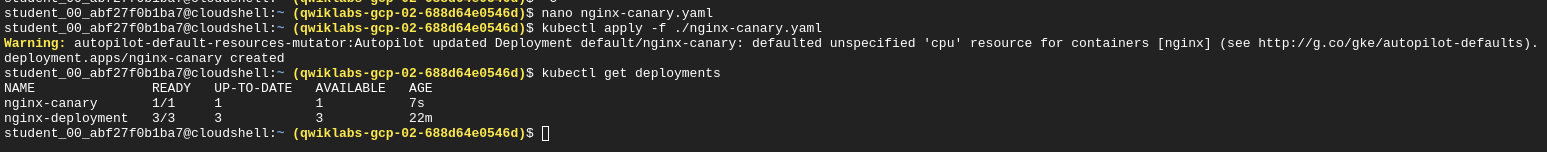

Canary Deployments

A single service targets both the canary and the normal deployment, directing a subset of traffic to the canary deployment.

A seperate deployment needs to be created for the canary deployment.

Canary manifest

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-canary

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

track: canary

Version: 1.9.1

spec:

containers:

- name: nginx

image: nginx:1.9.1

ports:

- containerPort: 80

Apply the canary deployment

kubectl apply -f nginx-canary.yaml

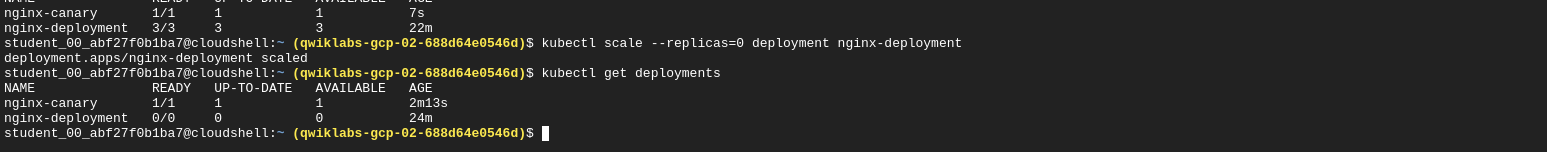

Scale down the primary deployment

kubectl scale --replicas=0 deployment nginx-deployment

Session Affinity

The service config doesn't pin client sessions to the same Pod. You can do this by setting the sessionAffinity to ClientIP in the service:

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: LoadBalancer

sessionAffinity: ClientIP

selector:

app: nginx

ports:

- protocol: TCP

port: 60000

targetPort: 80

Cluster Creation Private Endpoint Subnet

This setting allows you the range of addresses that can access the cluster externally. When this checkbox is not selected, you can access kubectl only from within the Google Cloud network.

You create a private cluster so you can provide internal APIs or services accessibly only by resources inside your network.

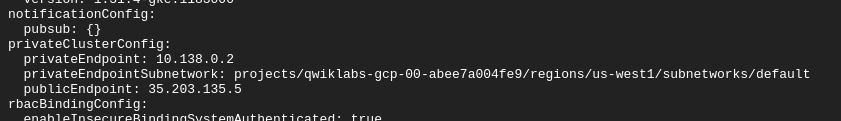

Describe Cluster

gcloud container clusters describe private-cluster --zone us-west1-a

-

privateEndpoint - internal IP, nodes use this to communicate with cluster and control plane

-

publicEndpoint - external IP, used by external services and for admins to communicate with the cluster control plane

Cluster lockdown options

- The whole cluster can have external access.

- The whole cluster can be private.

- The nodes can be private while the cluster control plane is public, and you can limit which external networks are authorized to access the cluster control plane.

Cluster Network Policy

To lock down communication between Pods to prevent lateral attacks.

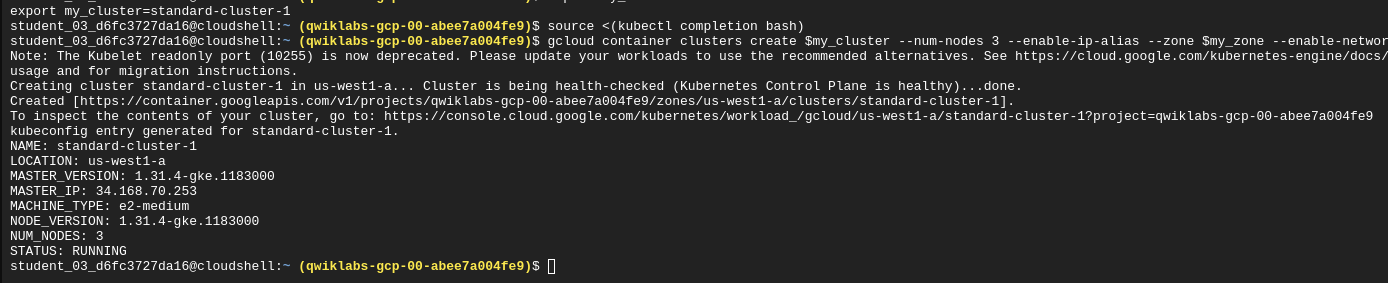

Create a new cluster

gcloud container clusters create $my_cluster --num-nodes 3 --enable-ip-alias --zone $my_zone --enable-network-policy

Configure access to the cluster for kubectl

gcloud container clusters get-credentials $my_cluster --zone $my_zone

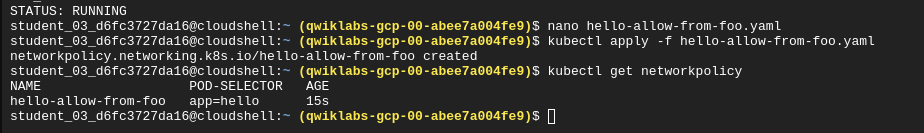

Restricting Incoming Traffic to Pods

We will create a NetworkPolicy manifest that defines an ingress policy that allows access to pods labeled app:targetApp from pods labeled ```app:sourceApp``

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: hello-allow-from-targetApp

spec:

policyTypes:

- Ingress

podSelector:

matchLabels:

app: targetApp

ingress:

- from:

- podSelector:

matchLabels:

app: sourceApp

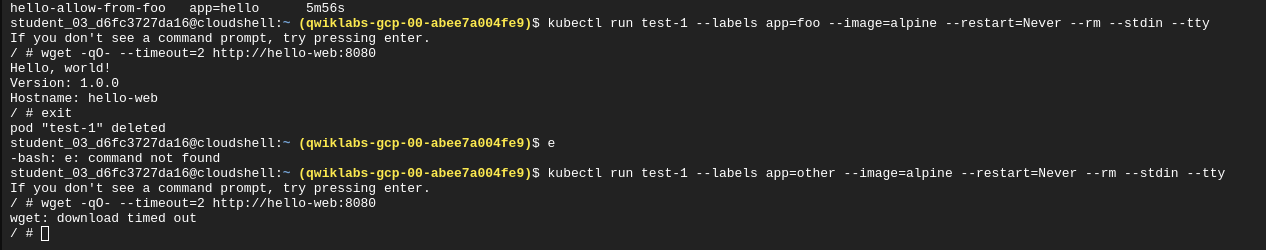

Run a temporary pod called test-1 and get a shell in that pod

kubectl run test-1 --labels app=foo --image=alpine --restart=Never --rm --stdin --tty

- --stdin creates an interactive shell

- --tty allocated a TTY for each container in the pod

- --rm means treat this as a temporary pod removed after its startup task. Because the startup task is an interactive session, the drop of that session kills the terminal and deletes the pod.

- --label add a lable to the pod

- --restart defines the restart policy of the temporary pod

Compare access to the hello app from the foo app:

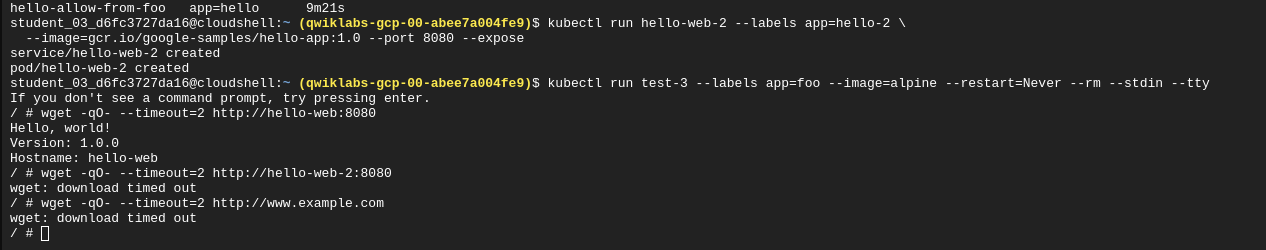

Restricting Outgoing Traffic and enabling DNS on port 53

You can restrict outgoing (egress) traffic as you do incoming traffic. However, in order to query internal hostnames (such as hello-web) or external hostnames (such as www.example.com), you must allow DNS resolution in your egress network policies. DNS traffic occurs on port 53, using TCP and UDP protocols.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: foo-allow-to-hello

spec:

policyTypes:

- Egress

podSelector:

matchLabels:

app: foo

egress:

- to:

- podSelector:

matchLabels:

app: hello

- to:

ports:

- protocol: UDP

port: 53

Verify egress:

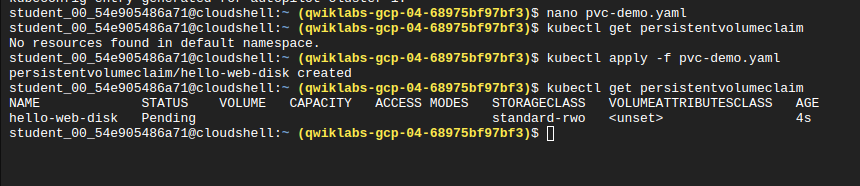

A manifest with a persistent volume claim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: hello-web-disk

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 30Gi

Check for existing persistent volume claims

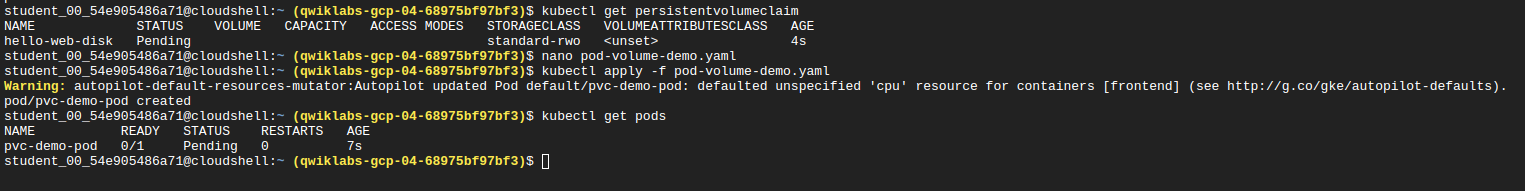

kubectl get persistentvolumeclaim

Mounting the persistent volume claim inside a pod

You mount the PVC as a volume within the pod manifest:

kind: Pod

apiVersion: v1

metadata:

name: pvc-demo-pod

spec:

containers:

- name: frontend

image: nginx

volumeMounts:

- mountPath: "/var/www/html"

name: pvc-demo-volume

volumes:

- name: pvc-demo-volume

persistentVolumeClaim:

claimName: hello-web-disk

Shell access to a running pod

kubectl exec -it pvc-demo-pod -- sh

Create a statefulset service with LB, 3 replicas and a persistent volume claim

kind: Service

apiVersion: v1

metadata:

name: statefulset-demo-service

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

type: LoadBalancer

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: statefulset-demo

spec:

selector:

matchLabels:

app: MyApp

serviceName: statefulset-demo-service

replicas: 3

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: MyApp

spec:

containers:

- name: stateful-set-container

image: nginx

ports:

- containerPort: 80

name: http

volumeMounts:

- name: hello-web-disk

mountPath: "/var/www/html"

volumeClaimTemplates:

- metadata:

name: hello-web-disk

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 30Gi

Describe the statefulset resource you created

kubectl describe statefulset statefulset-demo

Describe a PVC within a stateful set

kubectl describe pvc hello-web-disk-statefulset-demo-0