Hybrid Cloud Multi Cluster

Notes I took whilst studying the "Hybrid Cloud Multi-Cluster with Anthos" course.

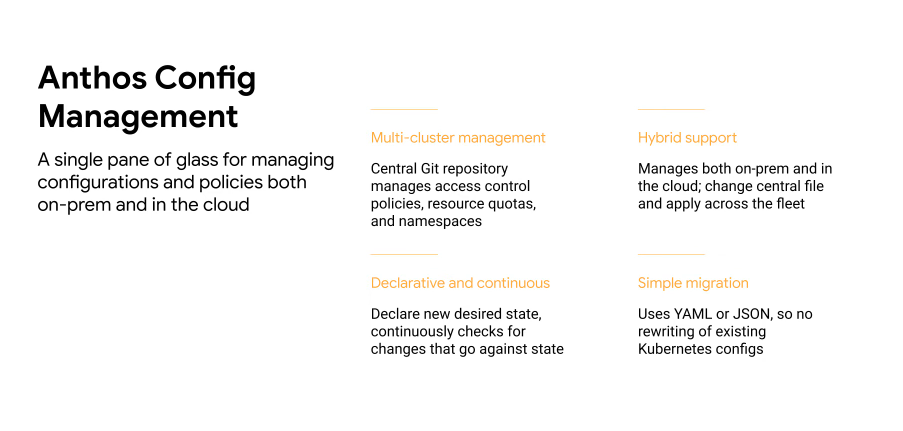

Anthos Config Management (ACM)

Apply software development processes to K8s config - code reviews, git etc.

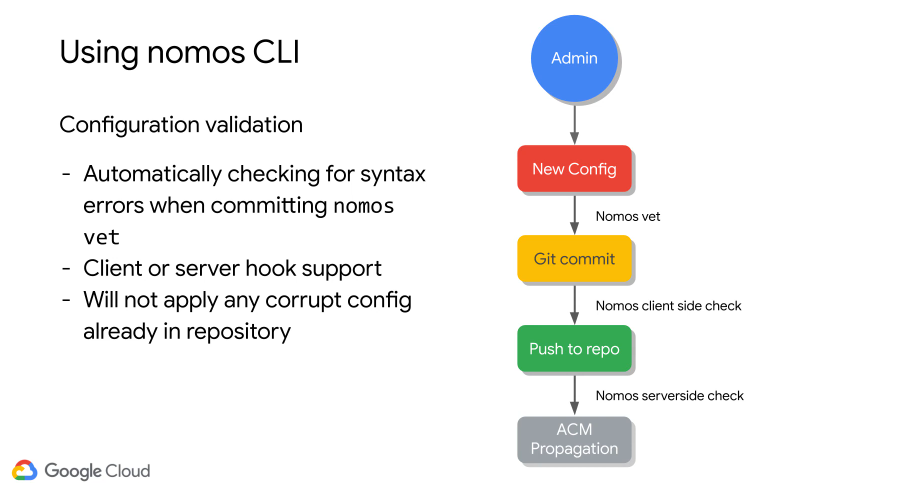

Use validators via git hooks to validate configs.

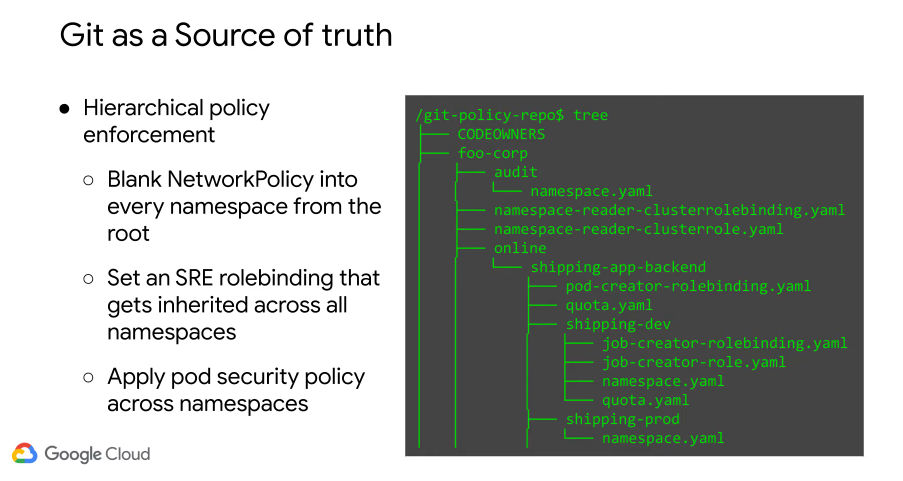

Hierarchy of your git repo facilitate propogation e.g. put something higher in the hierarchy and all namespaces will get that policy/config e.g. pod security policy across namespaces.

e.g. we want a daemonset, add it to the repo, ACM enforces that config on all of your clusters.

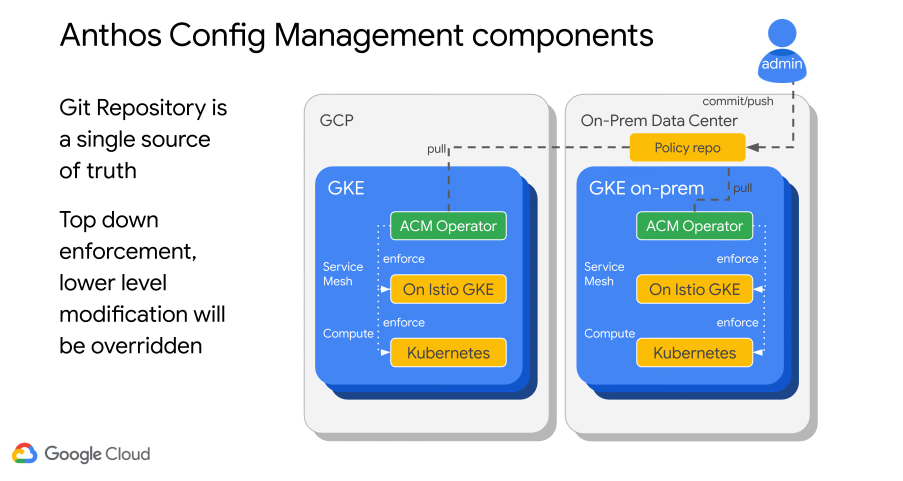

ACM Components

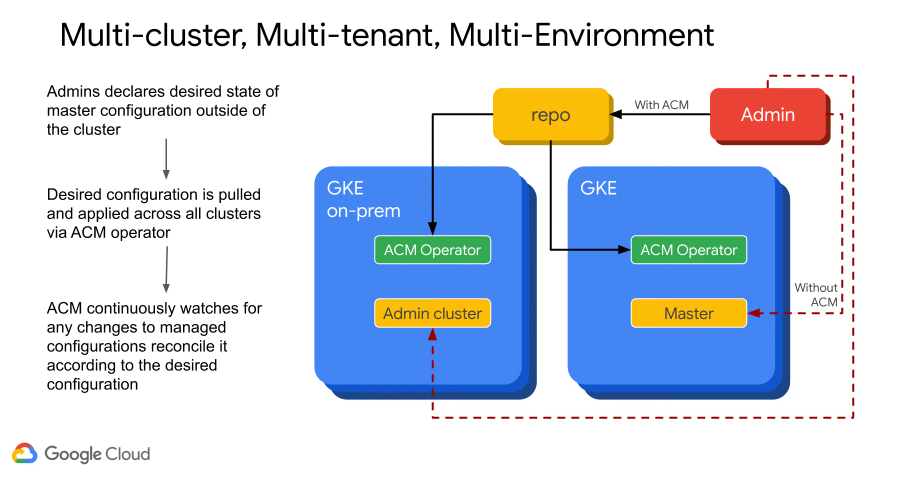

Push config to some git repo, could be on prem or off prem. The ACM pulls and enforces the service mesh and the compute layer.

You also control Istio policies via ACM.

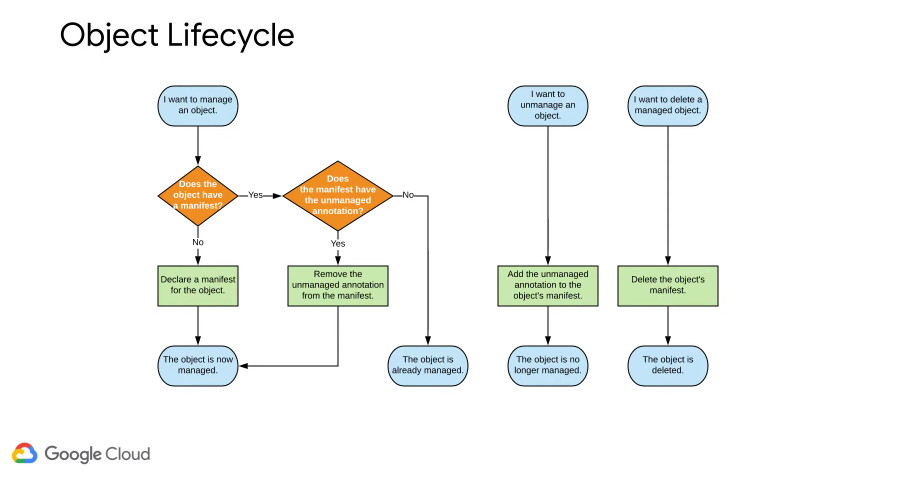

Object Lifecycles

Consider for a second, ACM as an object manager.

Anytime an object changes, ACM changes it back to the source of truth (the git repo) e.g. if a deployment is accidentally deleted.

The desired state propogates from git to all clusters, ACM detects deltas between existing objects and defined objects.

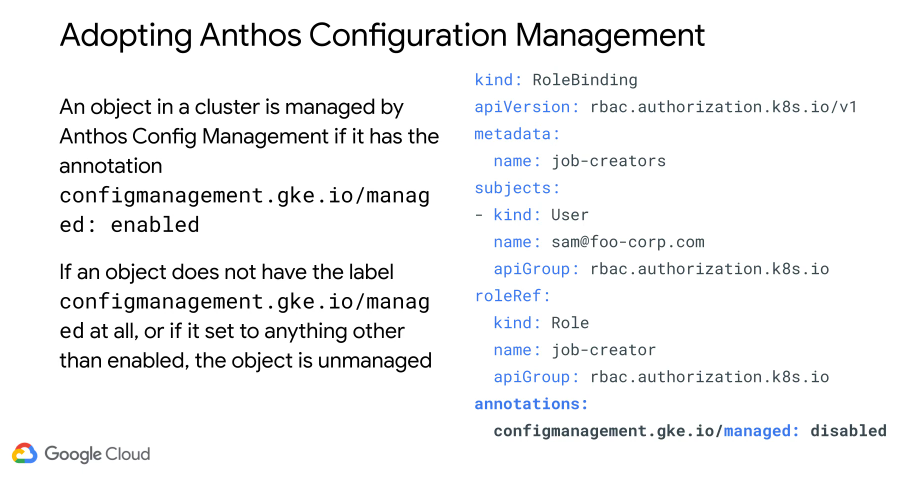

Therefore, objects which must be managed must be declared in yaml.

There is also an unmanaged declaration in ACM - you tell ACM the object is not managed and that gets propagated across the cluster.

The nomos client can help check syntax, including via Git hooks:

Multi-Cluster Operations

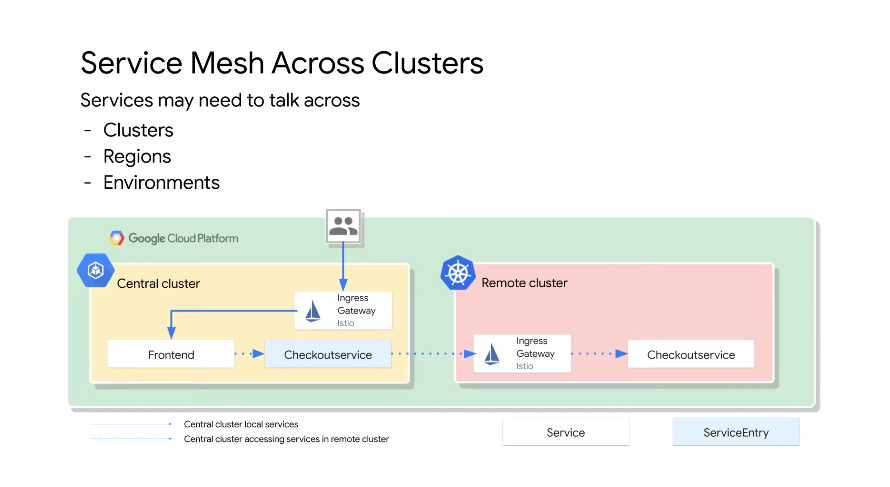

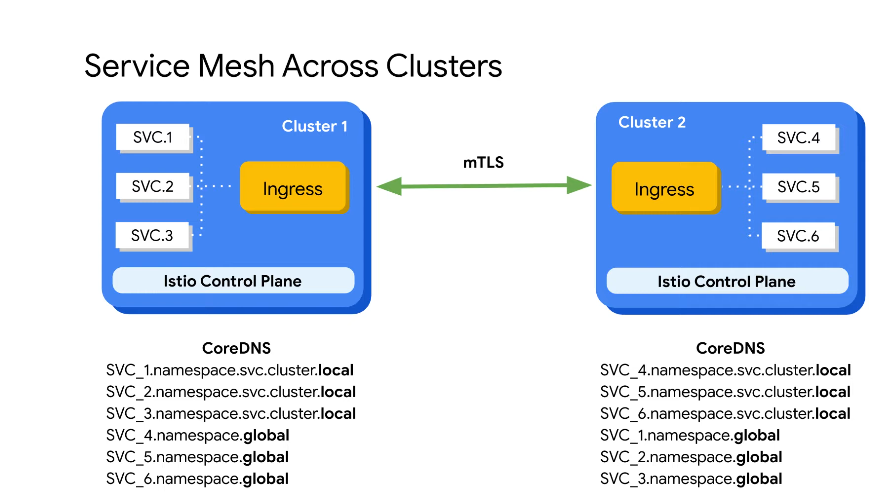

We can have a service mesh that communicates across clusters e.g. multi region communication.

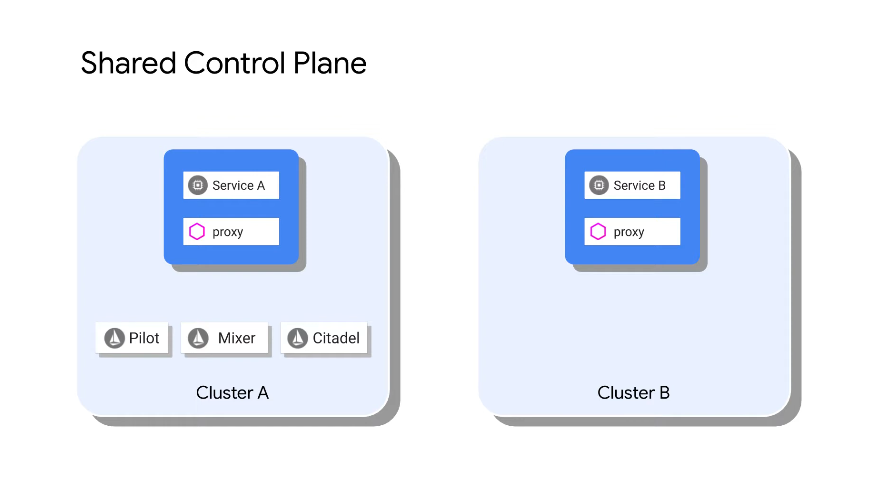

Shared Control Plane

The core components live in one cluster

But the proxies in cluster B are also managed by the shared control plane which runs on cluster A. Both clusters are in the same VPC.

This shared control plane becomes a single point of failure. There is envoy/istio proxy side caching, but they cant operate for a long time without the control plane.

No overlapping IP addresses between on prem cluster and off prem cluster.

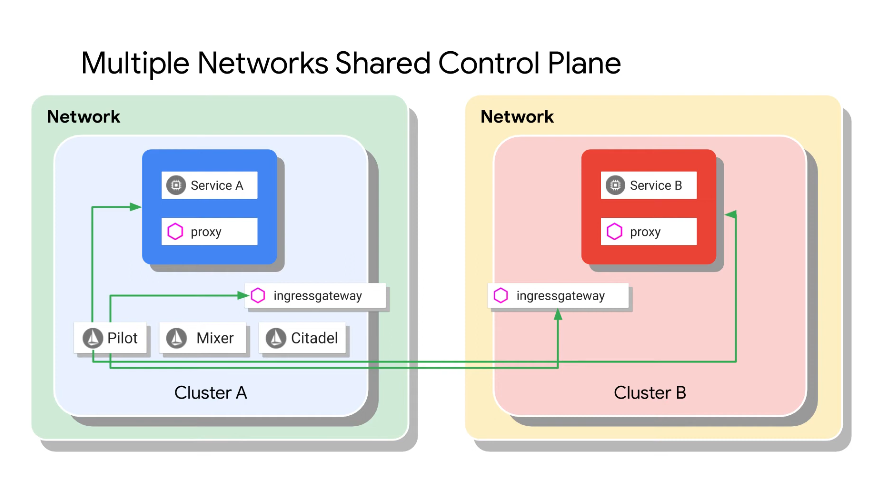

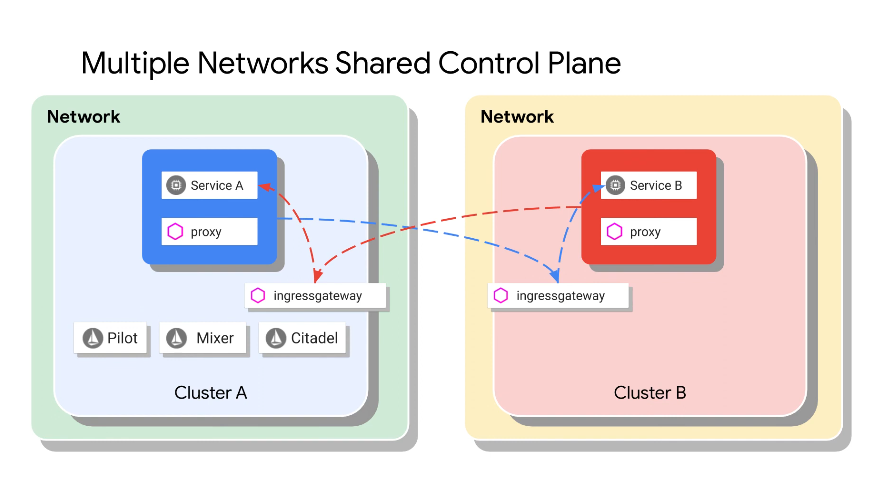

Multi-network shared control plane

Control plane across ingress, the two cluster are not in the same network.

The services also communicate via ingress gateway:

Cluster A is still a single point of failure as there is only one control plane (pilot, mixer, citadel).

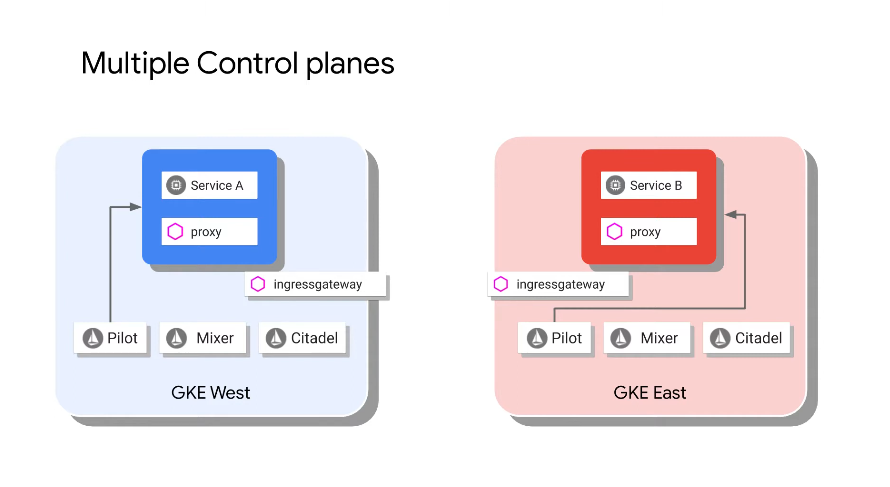

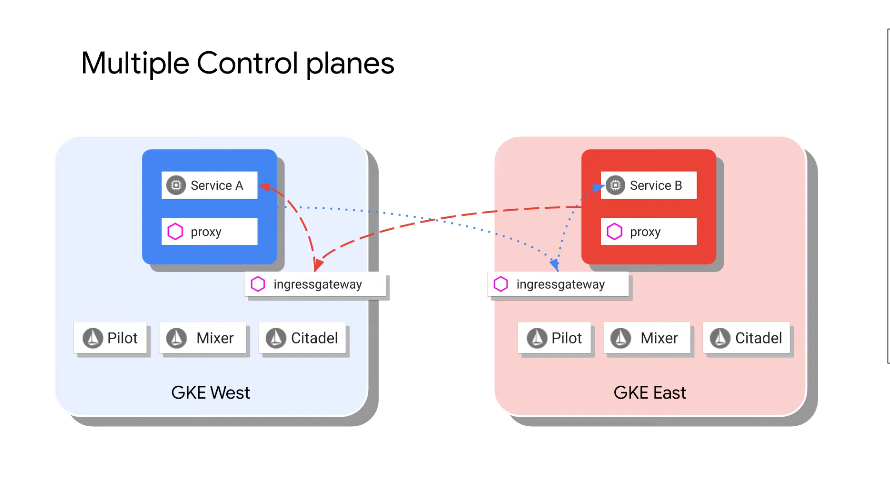

Multi-Control Plane

The services still communicate via Ingress:

Control planes are duplicated across clusters.

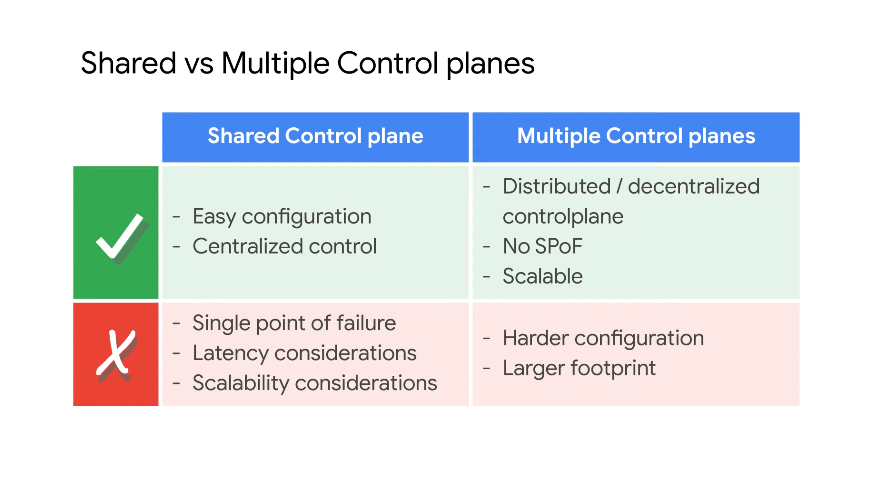

Shared vs Single Control Plane

Latency for shared control plane due to pilot latencies in managing/getting info from remote clusters.

Multi-control plane - when there is a looser collab of services across multiple clusters i.e. a subset of cluster services need to communicate with a subset of another cluster's services.

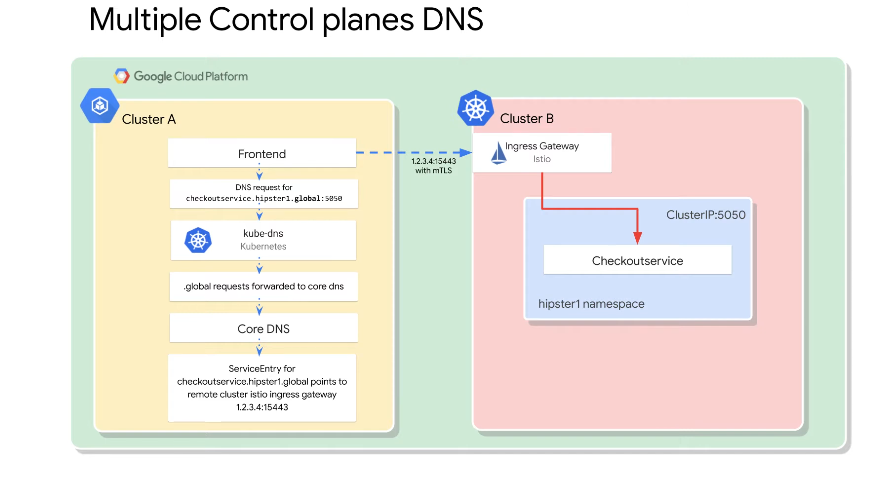

Naming and Security for Multi Control Plane

In the above example, .global gets forwarded to core-dns, which the mesh now takes care off. core-dns gives you the ingress for the service in the other cluster.

The services with .local in cluster 1 resolve locally with kube-dns.

The services with .global go to kube-dns which then goes to core-dns.

You need to manually configure the services outside of your cluster if you have multiple control planes. Manual service entry for cross cluster services which have a dependency upon one another.

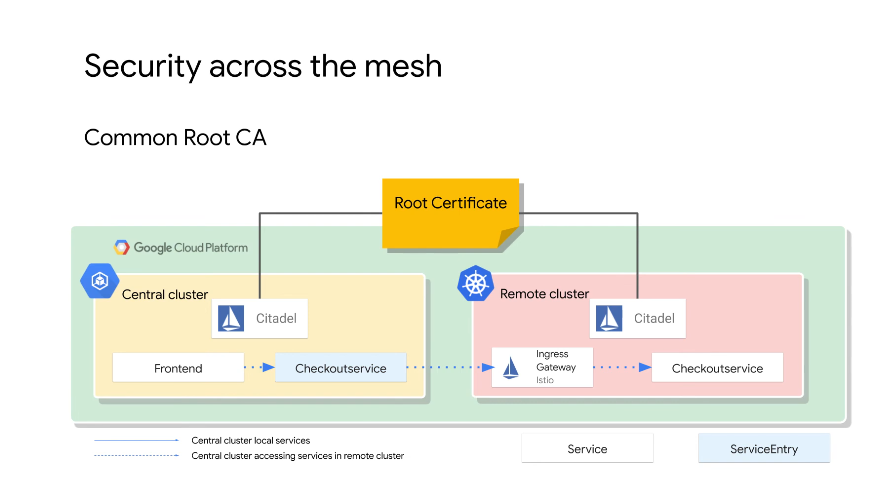

Security Across Meshes

We need a root certificate that is used by both meshes. Citadel on each mesh points to the same root CA, services outside of the cluster/mesh must also be updated to use the same root certificate.