Tensorflow - Data API

Some notes on the high level data API in Tensorflow.

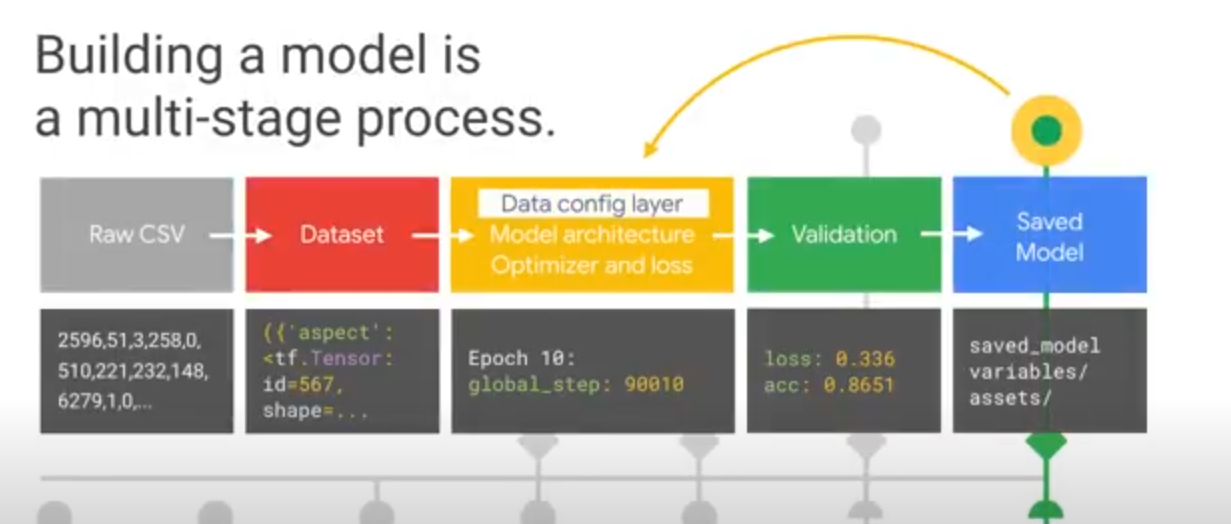

Process

- Collect clean and process your data

- Prototype and develop your model architecture

- Train and evaluate results

- Prepare the model for production serving

Tensorflow provides functionality at each stage.

Dev Process with Eager Execution

Develop models in eager whilst iterating:

import tensorflow as tf

tf.enable_eager_execution()

Executes the graph immediately.

Loading Data

TF Dataset is similar to a dataframe or numpy array. Optimised for data consumption for training.

Rows are tuples of different tensor types.

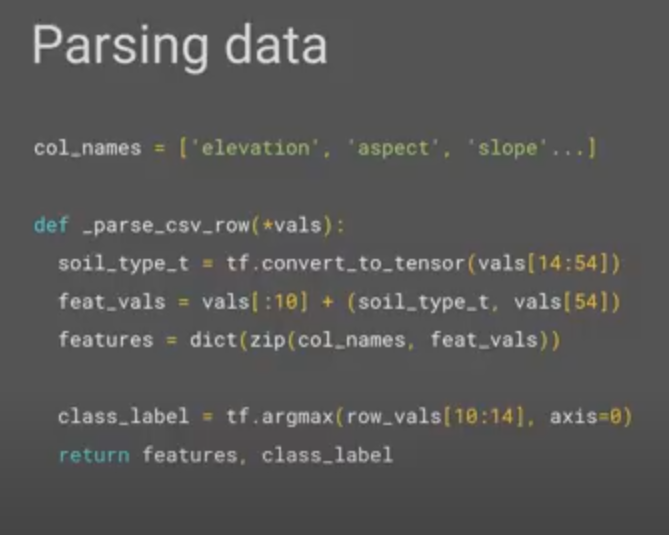

Can apply a function to data row by row to transform the data into a set of features and class label.

def _parse_data_function(*vals):

...

...

Separate and group columns of features at this stage.

e.g. say we have an enum that's been one hot encoded in our dataset across columns 14-54. We can group that into a single tensor:

soil_type = tf.convert_to_tensor(vals[14:54])

This means soil_type can be learnt as a single feature, not a set of features that are in columns 14-54

Apply Function to Dataset

dataset = dataset.map(_parse_data_function).batch(BATCH_SIZE)

Apply the function on sets of the BATCH_SIZE.

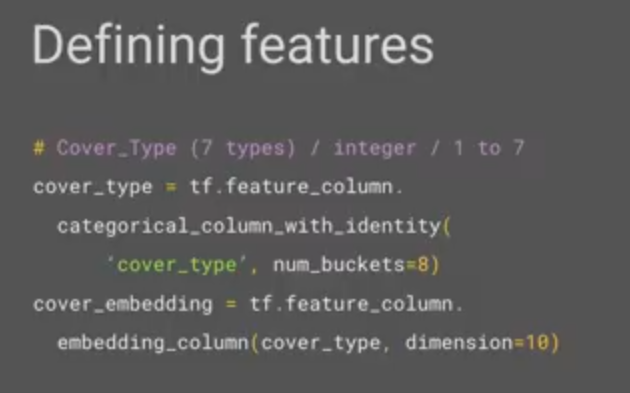

Feature Columns

Config class that defines a mapping function for turning categorical data into continuous numeric data.

Convert categories into embedding columns:

The transformations are part of the model graph so can be exported as part of the saved model. This is an advantage vs preprocessing it yourself.

Feature Layer

The feature columns make up an initial feature layer - this layer's responsibility is to transform the data into the representations the neural net is expecting.

model = tf.keras.Sequential([

feature_layer.

...

...

...

])

The feature layer is also used to train any embedding types.

Compiling the Model

Different optimizers and loss functions are available through Tensorflow.

Validate Model

Load the validation dataset using the same process as the train dataset.

Call evaluate on the model you created.

Saving for Deployment

Tensorflow saved model format for serving - can export in this format and reuse for serving the model and using with the full suite of Tensorflow tools.

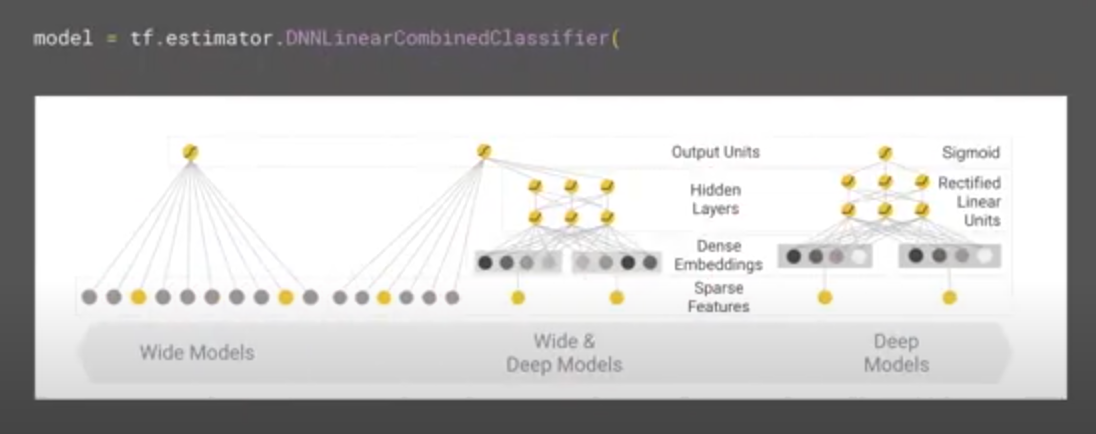

Swapping the Model using Canned Estimators

Tensorflow comes with a bunch of canned estimators that don't necessarily fit nicely into a layered sequential style as expressed in keras. Exploit existing research on model structures.

Wide and Deep Model

tf.estimator.

DNNLinearCombinedClassifier(....)

Linear learning with deep learning. Categorical to the linear half. Numerical to the DNN half.

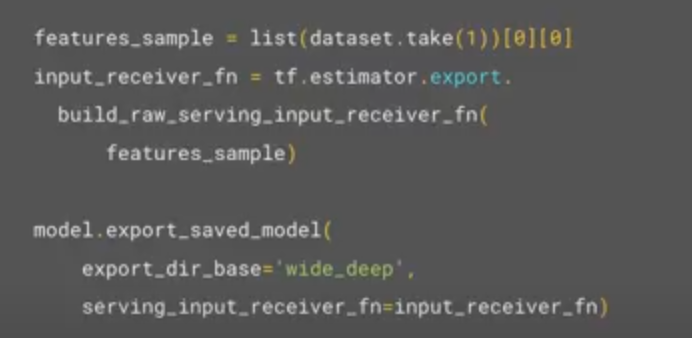

Input Receiver Function

Used to build the tensor shapes we expect at serving time - those that we want to run inference on.

This means you can have the inference data in a different format to training.

You can pass this input serving function to the model export so that it's included for use in the entire Tensorflow suite.