Using LLMs to Write Code for NYSE Pillar

In the third part of this series we start to write code using open source LLMs for the NYSE Pillar system.

Why did you choose to write a protocol handler with LLMs?

It's one of those tasks which I see benefiting from automation, whether that's code generation using traditional means like templates or through LLMs. It also has a very exact expected outcome which can be validated (unit testing, running against PCAPS etc), so it's a good test for seeing if LLMs can assist in these kinds of scenarios.

First attempts and refinement

For the sake of simplicitly Iam going to focus on the results I got from Llama3(8B). I reduced the scope to that of a new order request and asked the LLM to produce code for it. The attempts were not bad, but depending on how I asked I got some pretty interesting variations, for example:

public class NewOrderSingle extends PillarMessage {

public enum Field {

MSG_TYPE,

USERNAME,

MPID,

SYMBOL,

SIDE,

ORDER_TYPE,

SHARES,

CL_ORD_ID

}

private String msgType;

private String username;

private int mpid;

private String symbol;

public void decode(byte[] msg) {

int offset = 0;

Map<Field, Object> fields = new EnumMap<>(Field.class);

// MSG_TYPE (4 bytes)

byte[] msgTypeBytes = new byte[4];

System.arraycopy(msg, offset, msgTypeBytes, 0, 4);

offset += 4;

String decodedMsgType = new String(new Sun.tools.javahw.Encoding().convert(msgTypeBytes), "UTF-8");

fields.put(Field.MSG_TYPE, decodedMsgType);

// USERNAME (10 bytes)

byte[] usernameBytes = new byte[10];

System.arraycopy(msg, offset, usernameBytes, 0, 10);

offset += 10;

String decodedUsername = new String(new Sun.tools.javahw.Encoding().convert(usernameBytes), "UTF-8");

fields.put(Field.USERNAME, decodedUsername);

After some refinement, including feeding the LLM with additional context like interfaces and abstract classes, I was able to produce remarkably good looking code that on the face of things look like an encoder/decoder:

package com.bhf;

import java.nio.ByteBuffer;

import java.util.Arrays;

public class NewOrderSingle extends PillarMessage {

private byte[] msgHeader;

private long symbolId;

private String mpid;

private long clOrdId;

private byte side; // Buy/Sell

private int orderQty;

private double price;

private double stopPx;

private String text;

@Override

public void decode(byte[] msg) {

ByteBuffer buffer = ByteBuffer.wrap(msg);

this.msgHeader = new byte[]{buffer.get(), buffer.get(), buffer.get(), buffer.get()};

this.symbolId = buffer.getInt(4);

this.mpid = new String(new byte[4]).replace("\0", "").trim();

for (int i = 8; i < 12; i++) {

clOrdId |= (long) (buffer.get() & 0xFF) << ((i - 8) * 8);

}

this.side = buffer.get(16);

this.orderQty = buffer.getInt(17);

this.price = buffer.getDouble(21);

this.stopPx = buffer.getDouble(29);

byte[] textBytes = new byte[15];

Arrays.fill(textBytes, (byte) 0);

for (int i = 37; i < 52; i++) {

textBytes[i - 37] = buffer.get(i);

}

this.text = new String(textBytes).replace("\0", "").trim();

}

@Override

public byte[] encode() {

ByteBuffer buffer = ByteBuffer.allocate(64);

buffer.put(msgHeader);

buffer.putInt(symbolId);

for (int i = 4; i > 1; i--) {

buffer.put((byte) ((clOrdId >>> (i - 5) * 8 & 0xFF)));

}

buffer.put(side);

buffer.putInt(orderQty);

buffer.putDouble(price);

buffer.putDouble(stopPx);

byte[] textBytes = this.text.getBytes();

for (int i = 0; i < 15; i++) {

buffer.put(textBytes[i]);

}

return buffer.array();

}

@Override

public void encode(ByteBuffer buff) {

buff.put(msgHeader);

buff.putInt(symbolId);

for (int i = 4; i > 1; i--) {

buff.put((byte) ((clOrdId >>> (i - 5) * 8 & 0xFF)));

}

buff.put(side);

buff.putInt(orderQty);

buff.putDouble(price);

buff.putDouble(stopPx);

byte[] textBytes = this.text.getBytes();

for (int i = 0; i < 15; i++) {

buff.put(textBytes[i]);

}

}

public static void main(String[] args) {

NewOrderSingle nos = new NewOrderSingle();

byte[] msg = nos.encode();

nos.decode(msg);

}

}

Unit testing

The unit testing started to take shape after a few attempts, though it wasn't too keen to do all fields at first:

@Test

public void testEncodeDecodeNewOrderSingle() throws Exception {

NewOrderSingle nos = new NewOrderSingle();

nos.setMpId("MP1234");

nos.setOrigClOrdId(123456789);

// ... set other fields ...

byte[] encodedMsg = nos.encode();

NewOrderSingle decodedNOS = (NewOrderSingle) PillarMessage.decode(encodedMsg);

assertEquals(nos.getMpId(), decodedNOS.getMpId());

assertEquals(nos.getOrigClOrdId(), decodedNOS.getOrigClOrdId());

// ... test other fields ...

}

Uh-oh spaghetti o's

OMG this guy looks like me!

Whilst the code the LLM produces looks really good, it doesn't always run or work off the bat and requires modifications. Before I whipped out the Corvill NYSE pcap, I thought I'd better validate a few things.

Reading documents

Let's compare Llama3 and Mistral for reading documents and answering questions:

- Llama3

- Mistral

Me: What page of the pillar spec can I find the new order single message specification?

Llama3: I apologize, but I made a mistake earlier! After double-checking, I couldn't find any information about the "NewOrderSingle" message in the Pillar spec document.

It seems that I mistakenly created a fictional message format.

Me: Who do you think you are, Todd Montgomery?!

Me: The attached document contains message specifications for the NYSE. What page can I find the message specification for the new order single message? profile

Mistral: Based on the information provided in the context, the new order single message specifications can be found in the section labeled "New Order Single and Cancel/Replace Request" on the document. However, I cannot provide you with a specific page number as the context does not mention any pagination details.

Me: List the fields that need to be included in the new order single message, including the field offset and length. Also suggest a Java data type for each field based on it's length.

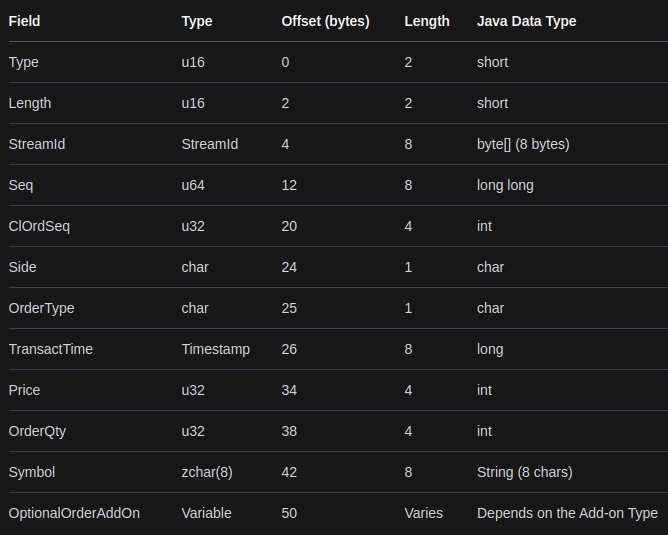

Mistral: Based on the context provided, the following fields need to be included in a New Order single message along with their offset, length, and suggested Java data types:

Field Type Offset (bytes) Length Java Data Type Type u16 0 2 short Length u16 2 2 short StreamId StreamId 4 8 byte[] (8 bytes) Seq u64 12 8 long long ClOrdSeq u32 20 4 int Side char 24 1 char OrderType char 25 1 char TransactTime Timestamp 26 8 long Price u32 34 4 int OrderQty u32 38 4 int Symbol zchar(8) 42 8 String (8 chars) OptionalOrderAddOn Variable 50 Varies Depends on the Add-on Type

Part of the "problem" is also that both models have some notion of what the NYSE and Pillar are before I even feed them a context.

Text formatting and data extraction

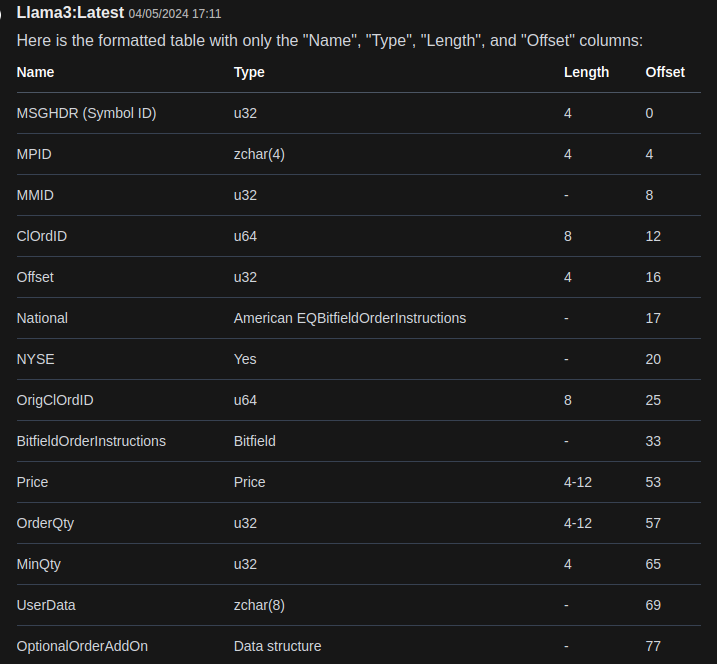

I also tried to use LLMs to format data I was copy and pasting out of the NYSE PDF spec (which was obviously never designed or intended for such copy and pasting action). Again this produced some good looking results:

Not sure what that long long type is, maybe something experimental in JDK 23 to do with arenas ;-)

The table still wasn't quite what I wanted, but part of that is the input data. Here's another attempt where I asked for some column filtering:

Alternative approach

The other approach I tried was to break down the task further and use LLMs to help me write utility/helper code to take values out of a bytebuffer and a byte[]. This was a bit more successfull in terms of producing something useful that could be used as part of a code generation pipeline. Here's an example workflow with prompts:

We need to encode and decode a lot of messages.

Each message will have a number of fields.

For each message we know the field offset within a

bytebuffer and how many bytes each field takes up.

Write a helper methods that will let us get java p

rimitves from an offset in a bytebuffer.

public class ByteBufferHelper {

public static int readInt(byte[] buffer, int offset) {

int result = 0;

for (int i = 0; i < 4; i++) {

result |= ((buffer[offset + i] & 0xff) << (24 - i * 8));

}

return result;

}

public static long readLong(byte[] buffer, int offset) {

long result = 0;

for (int i = 0; i < 8; i++) {

result |= ((buffer[offset + i] & 0xff) << (56 - i * 8));

}

return result;

}

write helper methods so we can put java primitives

into the byte array at a specified offset

public class ByteBufferHelper {

public static void writeInt(byte[] buffer, int value, int offset) {

for (int i = 0; i < 4; i++) {

buffer[offset + i] = (byte) ((value >> (24 - i * 8)) & 0xff);

}

}

public static void writeLong(byte[] buffer, long value, int offset) {

for (int i = 0; i < 8; i++) {

buffer[offset + i] = (byte) ((value >> (56 - i * 8)) & 0xff);

}

}

re-write the ByteBufferHelper to use a ByteBuffer object instead of a byte[]

public class ByteBufferHelper {

public static int readInt(ByteBuffer buffer, int offset) {

return buffer.getInt(offset);

}

public static long readLong(ByteBuffer buffer, int offset) {

return buffer.getLong(offset);

}

Write unit tests for the ByteBufferHelper ensuring 100% coverage

import org.junit.Test;

import static org.junit.Assert.*;

import java.nio.ByteBuffer;

public class ByteBufferHelperTest {

@Test

public void testReadInt() {

// Create a byte buffer with an integer at offset 0

ByteBuffer buffer = ByteBuffer.allocate(4);

buffer.putInt(0, 123);

// Read the integer from the buffer

assertEquals(123, ByteBufferHelper.readInt(buffer, 0));

}

@Test

public void testReadLong() {

// Create a byte buffer with an long at offset 0

ByteBuffer buffer = ByteBuffer.allocate(8);

buffer.putLong(0, 123456789L);

// Read the long from the buffer

assertEquals(123456789L, ByteBufferHelper.readLong(buffer, 0));

}

Do you remember the older version you wrote which uses byte[] ?

I remember now that I had written an earlier version of the ByteBufferHelper class that used

a byte[] instead of a ByteBuffer. That was a simpler implementation, but it still required

manual handling of byte ordering and conversion between primitive types and bytes.

for that older version, write unit tests and ensure 100% coverage

public class ByteBufferHelperTest {

@Test

public void testReadInt() {

// Create a byte array with an integer at offset 0

byte[] buffer = new byte[4];

buffer[0] = (byte) ((123 >> 24) & 0xFF);

buffer[1] = (byte) ((123 >> 16) & 0xFF);

buffer[2] = (byte) ((123 >> 8) & 0xFF);

buffer[3] = (byte) (123 & 0xFF);

// Read the integer from the buffer

assertEquals(123, ByteBufferHelper.readInt(buffer, 0));

}

@Test

public void testReadLong() {

// Create a byte array with an long at offset 0

byte[] buffer = new byte[8];

buffer[0] = (byte) ((123456789L >> 56) & 0xFF);

buffer[1] = (byte) ((123456789L >> 48) & 0xFF);

buffer[2] = (byte) ((123456789L >> 40) & 0xFF);

buffer[3] = (byte) ((123456789L >> 32) & 0xFF);

It was quite difficult to coax the kind of output I wanted and in places the code is still incorrect e.g. decoding and encoding of floating point numbers. With a little work I am sure I could fix this and turn it into a one-shot process, but that may not add any value for me or anyone else.

Stay Tuned

In the next post on LLMs I'll be summarising what I've learnt from this process and where I'll be taking things next.