GKE/K8s Production

Notes I took whilst doing the "Architecting with Google Kubernetes Engine: Production" course. Covers access control, security, logging and managed storage.

Access Control and Security in K8s and GKE

User accounts - human users

k8s service accounts - creds for applications in the cluster

User accounts defined through a cloud identity domain for fine grained control. There is also IAM to link permissions to users and groups.

For service accounts, we have Google Cloud Workload Identity to bridge the gap between k8s service accounts and IAM service accounts. Auth pods by keeping service account creds outside the pod.

A pod gets the service account creds from a GKE metadata server, which is just an endpoint on the overall k8s metadata server. Simplifies pod auth.

IAM - for google cloud resources outside your cluster

K8S RBAC - access within the cluster, namespace level

SYnchronized approach with both IAM and RBAC. IAM controls who can modify the cluster config. K8S RBAC for who can interact with k8s objects within the cluster.

Least privilege - minimum set of permissions to do the job

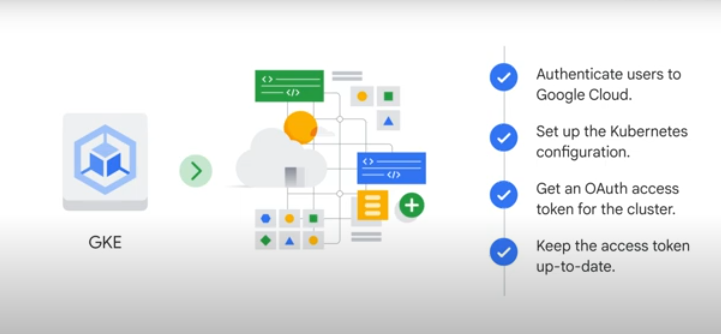

GKE Auth will manage end user auth for you:

K8S RBAC

Provide control over individual k8s resources.

Subjects - users, groups, service accounts

Verbs - the actions allowed for the resource

Resources - the k8s resources like pods, pvs, deployments

Roles - connect API resources and verbs

ROle bindings - roles to subjects

- Create roles with permissions in the right namespace

- Create role bindings for the roles created

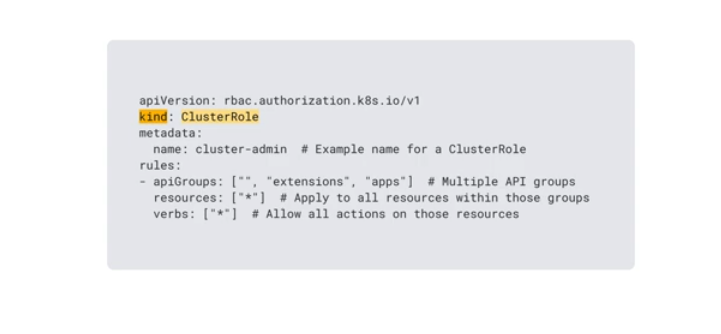

Cluster Roles

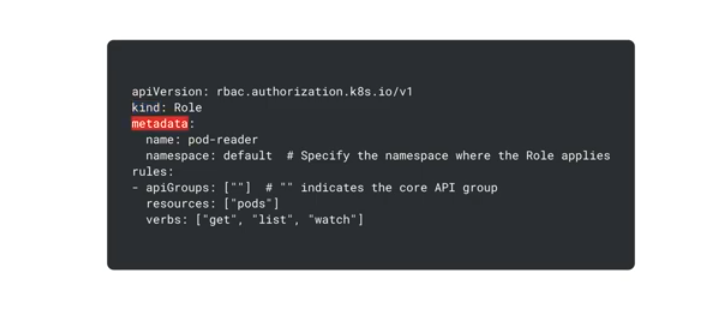

A manifest defining RBAC role - rules are permissions granted by the role. Single namespace for a role.

Rules - the permissions

Resources - which resources the role can access

Verbs - permitted actions

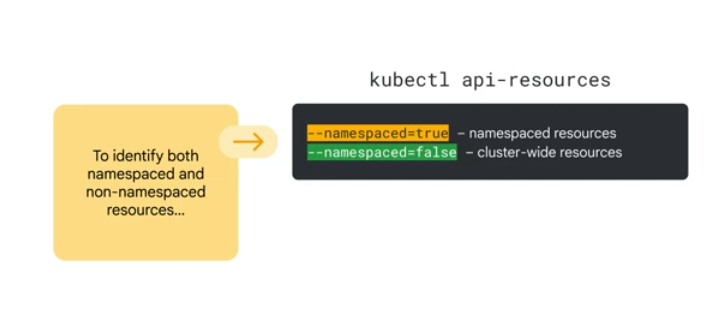

RBAC roles at the namespace level. RBAC CLuster roles at the cluster level.

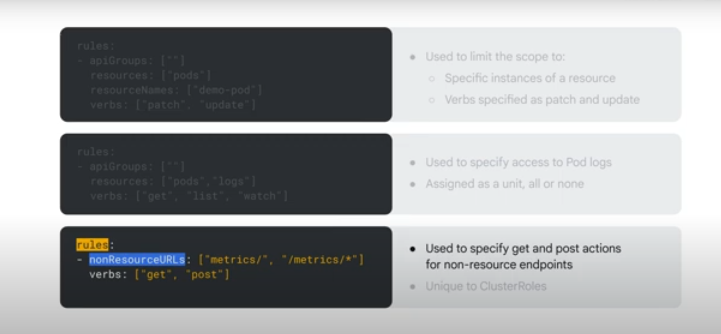

Non-resource endpoints can also be specified in rules:

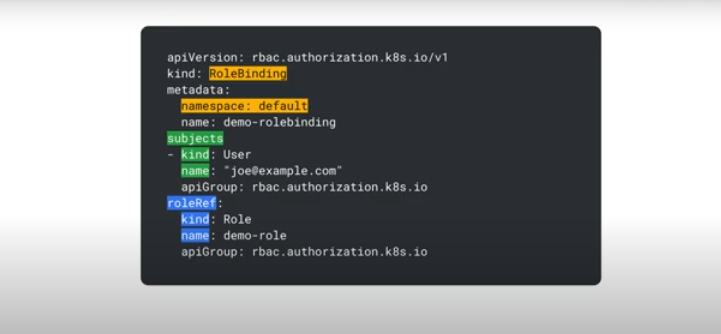

Cluster Role Bindings

Can specify the subject kind as users or groups.

To assign the roles to users or groups you use the roleRef within the Role binding.

Namespaced Role bindings

Cluster level resources should be managed with ClusterRoles and NameSpaced resources with Roles.

For multi-namespace resources you need cluster roles.

Workload Identity

This is a GKE service which simplifies how containerized apps access google cloud services. Containers directly authenticate using their own dedicated credentials.

When you enable it on a cluster, the node pool gets automatically created using the format "PROJECT_ID.svc.id.goog". Containers on this nodepool can use it's service account creds to auth to google cloud services. This can be via command line or using the Application Default Credentials lib.

Autopilot clusters enable workload identity by default.

gcloud container clusters create/update to enable workload identity on a new or existing cluster

Migration to workload identity

- Create a new node pool

- Enable workload identity

- Configure the app to auth too google using workload identity

- Assign a k8s service account to the app, configure that service account to act as an IAM service account

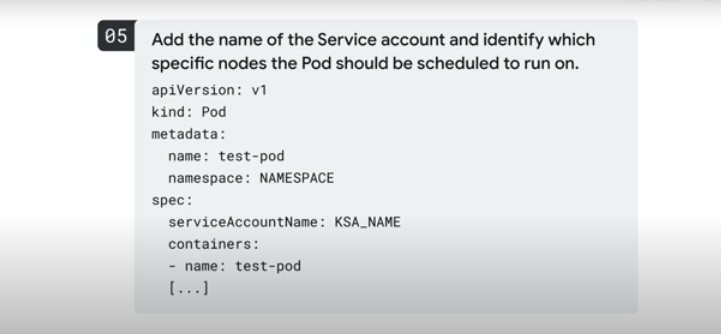

Configuring an app to use workload identity

- Create a new namespace for the service account

kubectl create namespace NAMESPACE

- Create a service account for the app:

kubectl create serviceaccount K8SA_NAME --namespace NAMESPACE

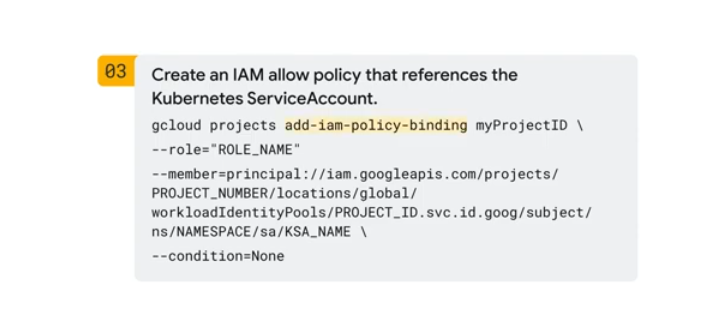

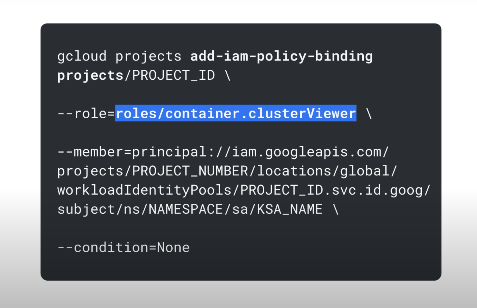

- Create an IAM allow policy referencing the service account

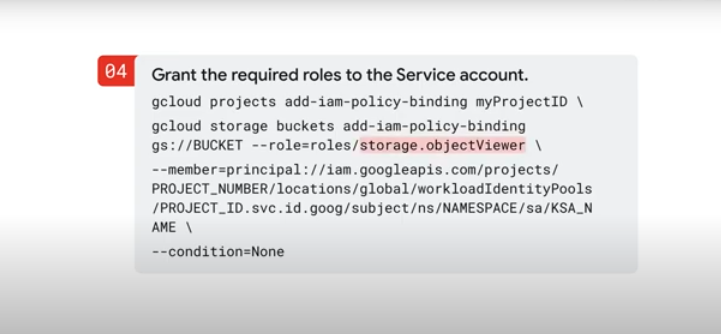

- Grant roles to the service account:

- Add the service account to the pod manifest:

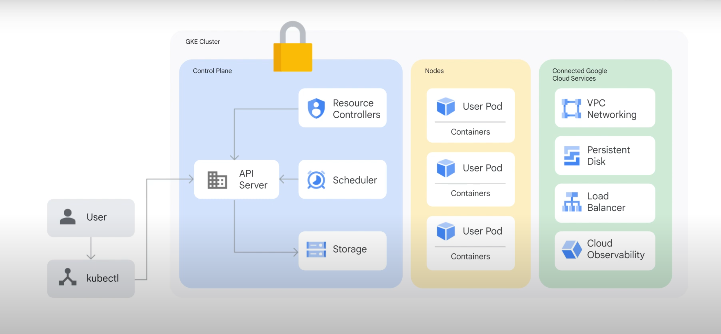

Control Plane Security

Each cluster has a dedicated root CA managed by google internally.

Control plane to nodes communication uses this CA.

etcd is isolated from other databases with a seperate CA per cluster.

kubelets - primary agents on nodes. Uses certs from the cluster CA for comms with the API server.

Newly provisioned nodes have a shared secret which allows kubelets to initiate certificate signing requests to the cluster's root CA.

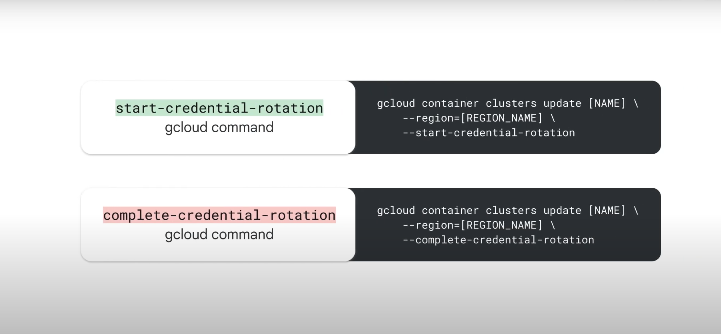

Credential Rotation

Credential rotation - rotate creds regularly.

- Create a new IP for the cluster control plane

- Issue new certs for the control plane with the new IP

- Update nodes to use the new IP and creds

- Update external API clients

- Remove old IP and credentials

The complete command tells the control plane to only serve with the new credentials.

During rotation the API server can have downtime.

Metadata concealment.

Pod Security

PSS - pod security standards. Predefined security configs for pods. 3 predefined levels:

- privileged (unrestricted)

- baseline (balance between security and flexibility)

- restricted (most stringent security).

PSA - pod security admission. An admission controller for GKE. Intercepts pod creation requests and evaluating the context vs the policy for a namespace. If the pod is not policy compliant, the pod won't come up. Enforces all pods follow policies.

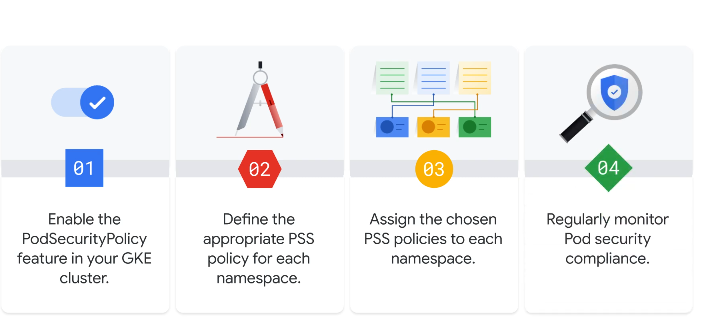

Implementing PSS and PSA

GKE Logs

Logs for:

- SYstem

- Workloads

- API Server

- Scheduler

- Controller manager

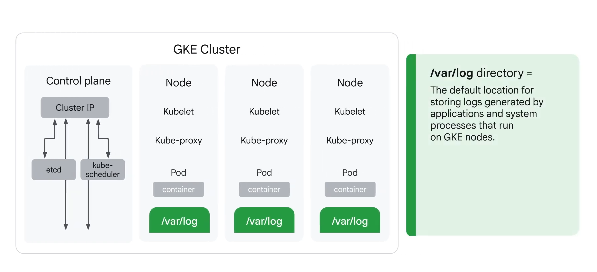

Default for logs generated by applications on the node: /var/log/ These are ephemeral. Include logs for kubelets and kube-proxy.

kubectl logs [PODNAME] to see logs from a pod

Cloud logging - in the _Default bucket, free tier for first 50gb for 30 days.

Admin logs are held in _Required for 400 days - includes admin activity audit logs, system event logs and login audit.

Up to 10 year retention with user defined buckets.

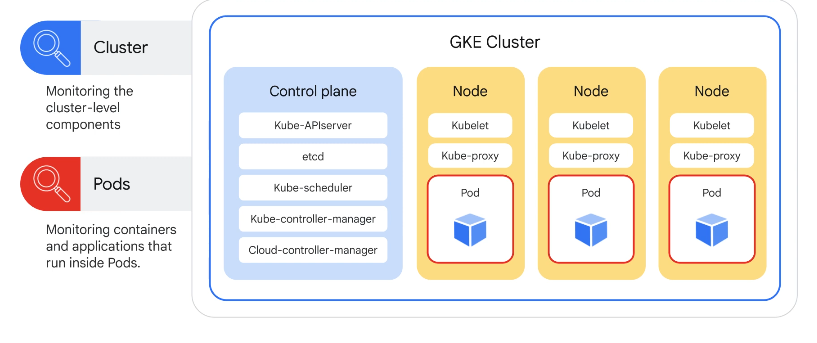

Monitoring

Cluster vs application monitoring:

K8s abstraction uses labels to organise resources and monitor systems and sub-systems by combining labels. Labels are keys and values e.g. environment=production

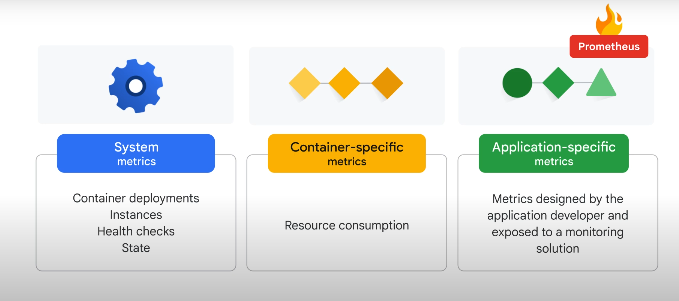

Logging based custom metrics.

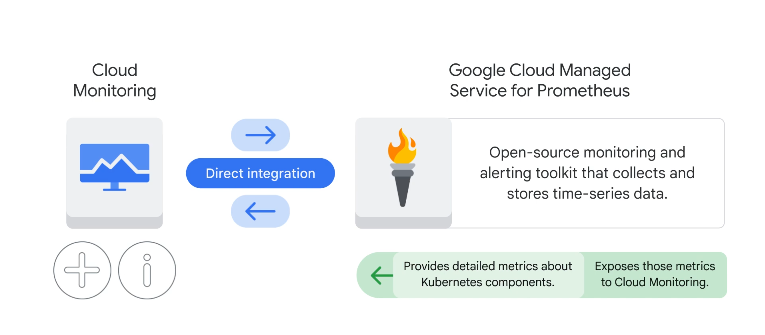

Cloud monitoring and prometheus integration:

Kubectl logs

--since=3h from the last 3 hours

--previous [pod name] - logs before a crash

Logs are not persistent on pod eviction unless you have cluster level logging.

Node level logging uses rotation with archiving - once per day or max 100mb log. Keeps last 5 logs.

If a container restarts, kubelet will keep 1 terminated container with it's logs.

Inspecting logs with cloud logging and logging agent

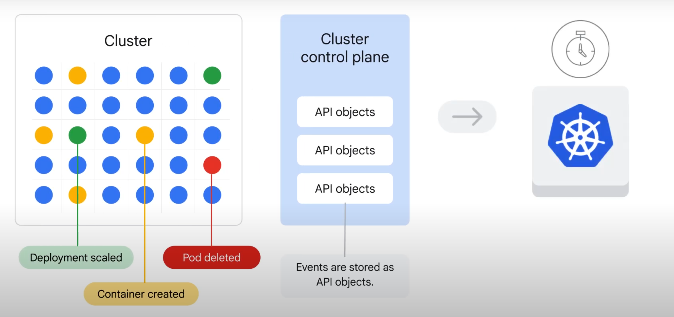

Cluster events are stored as API objects on the cluster control plane temporarily.

GKE pushes the events from the control plane into the cloud logging product.

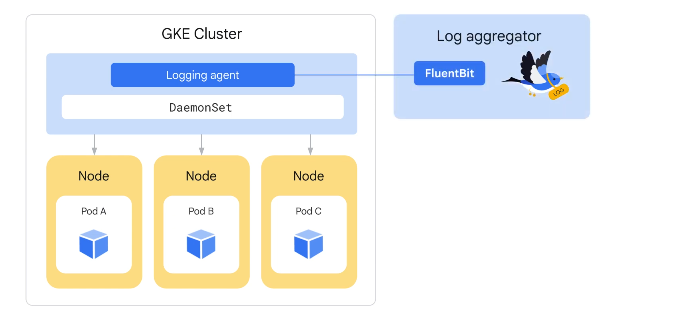

Logging agents pre-installed on nodes and configured to export to cloud logging. Can write to cloud logging via custom API also.

The log aggregator runs using a DaemonSet controller.

Managed Storage Services with GKE

You are responsible for app lifecycle and resilient and reliable service.

Google offers managed storage services via API.

Apps can use those APIs via workload identity.

IAM - defined within google cloud for cloud API auth

You can bind an IAM service account to a k8s service account:

THe IAM role should match the app's permissions.

Service account per app with specific IAM roles and permissions - protection against breaches, minimum required permissions, can disable specific service accounts.

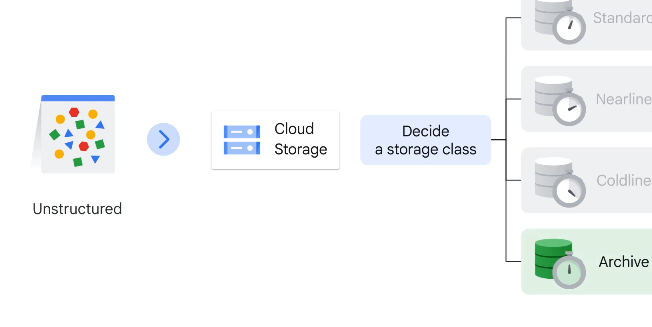

Using Cloud Storage

Storage for apps, structured and unstructured files. Not a file system.

-

Standard storage - for hot data, stored for short periods

-

Nearline - infrequently accessed (once per month or less)

-

Coldline - low cost, once every 90 days

-

Archive - lowest cost, once a year access, for DR and backup

Server side encryption before storage. Integrates with pub sub service to notify applications. Can also use versioning for storage.

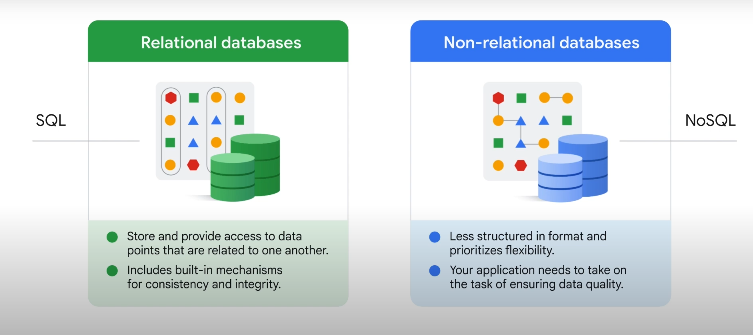

Using cloud databases

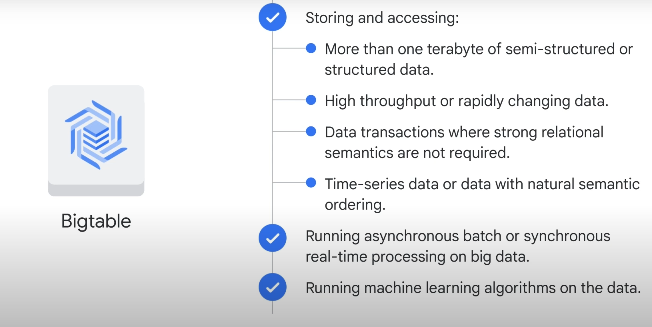

Bigtable

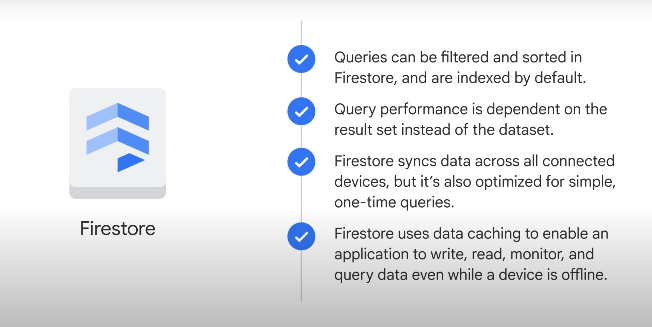

Firestore

Can handle queries and return the document result. Syncs data across all connected devices.

Spanner

Managed SQL.

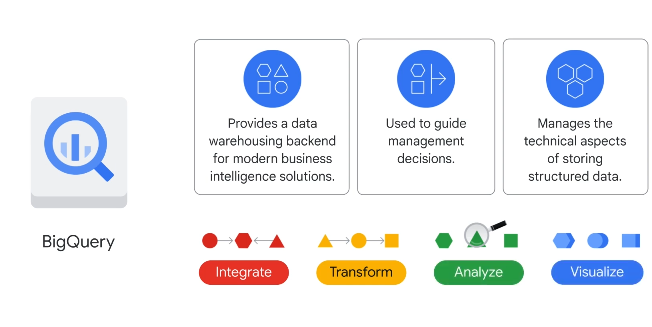

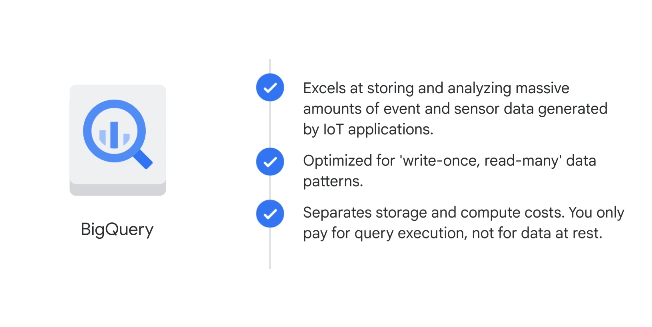

BigQuery

Managed data warehousing.

Cloud sql

Managed MySQL, Postgresql and MS SQL server

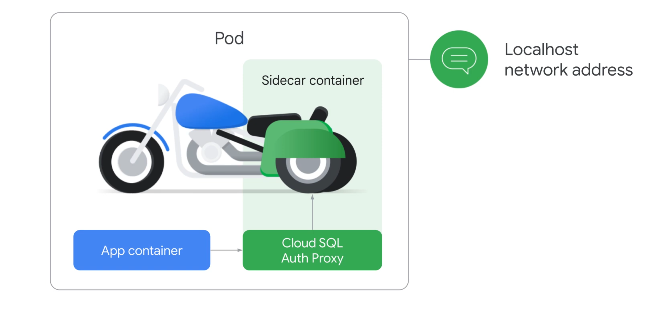

Cloud SQL Auth Proxy - streamline and secure connections to your cloud dbs, sits beteen your app and database. Intransit encryption. All pods can access the DB.

Needs the cloud sql api and sqladmin api.

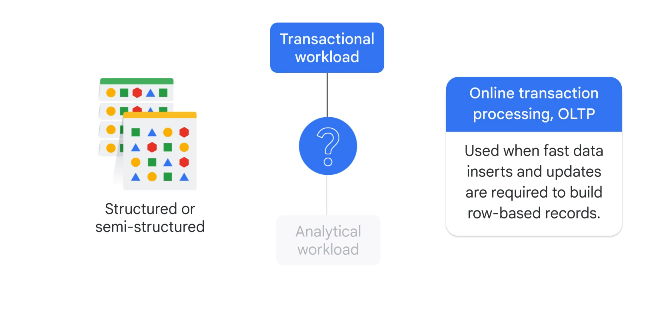

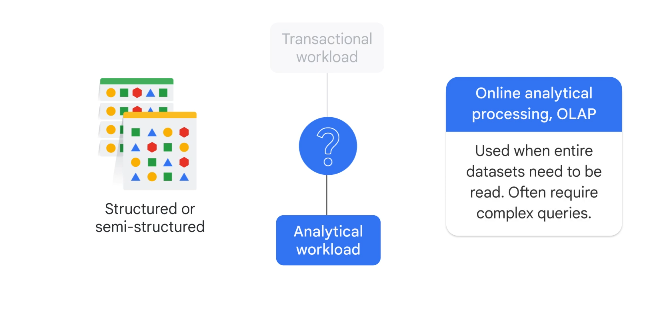

Comparing Storage Options

Depends on data type and business need.

Structured, transactional

From OLTP (online transaction processing), fast inserts and updates required for row based records.

If you need SQL: cloud sql for regional scalability, or spanner for global scalability

If you need NOSQL: Firestore

Structured, Analytics

From OLAP (online analytical processing), complex queries.

If you need SQL: Bigquery

If you need NOSQL: Bigtable

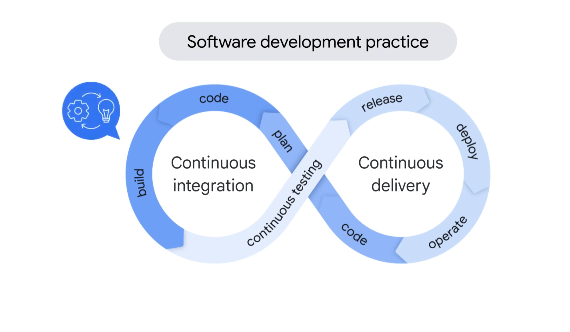

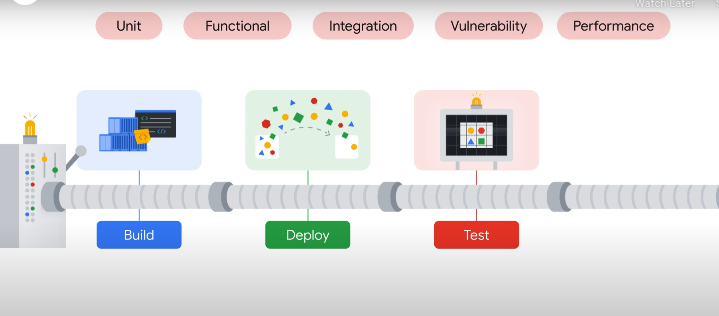

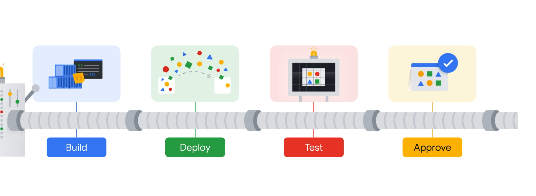

CI CD

Build - checkout the code on a specific version, artifacts built and images built

Deploy - deploy artifacts to the test env

Approve stage - developers decide if a pipeline should proceed to production.

Manual pipelines - no CI/CD

Packaged tool - fixed set of built in automations

Dev-centric - centers the pipeline around the code repository, GitOps, keep code and config in source repo which gets used as the golden source.

Ops centric - automating opterational tasks like infra, monitoring, incident response.

Spinnaker - tool for implementing ops centric CI/CD

CI/CD Tools Available in Google Cloud

Jenkins - continuous integration

Spinnaker - continuous delivery

CircLECI - continuous integration

GitlabCI - continuous integration

Drone - container first CI/CD

Artifact registry - store any kind of artifact including code, binaries, images. Built on google cloud storage. Supports AAR, APK, JAR, POM and ZIP.

Cloud Build - code to deployable. Executes built on gcloud infra or on prem hardware. Produces container images, automations on triggers based on events like changes in the code. Common volume called /workspace mounted which is where the source will be dled too. Each step of the build is run as independent containers.

Cloud deploy - automate deployment of applications to GKE. Handles the CD stage, connecting build artifacts to the deployment environment. Supports blue-green, rolling updates and canary.

Best Practice

- Use a managed artifact repo

- Use a build service

- Use a deployment service

- Automate your pipeline

- Monitor your pipeline

Lab Notes

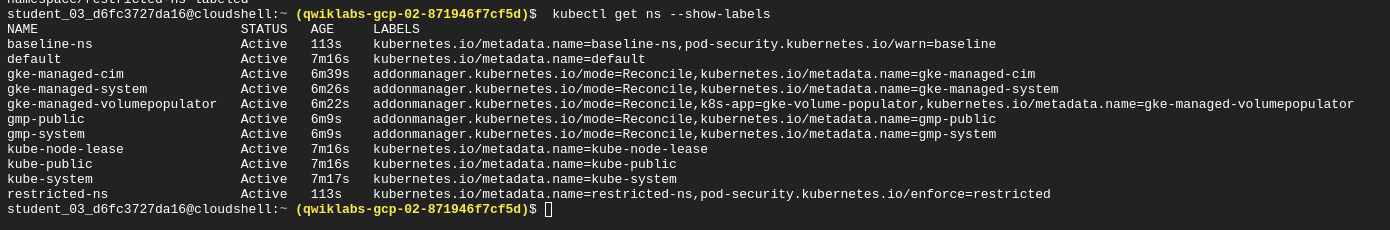

Applying pod security standards

- Create new namespaces

kubectl create ns baseline-ns

kubectl create ns restricted-ns

- Use labels to apply security policies.

Apply security standards to pods, baseline gets applied to the baseline-ns namespace and restricted gets applied to the restricted-ns namespace

kubectl label --overwrite ns baseline-ns pod-security.kubernetes.io/warn=baseline

kubectl label --overwrite ns restricted-ns pod-security.kubernetes.io/enforce=restricted

- View applied labels

kubectl get ns --show-labels

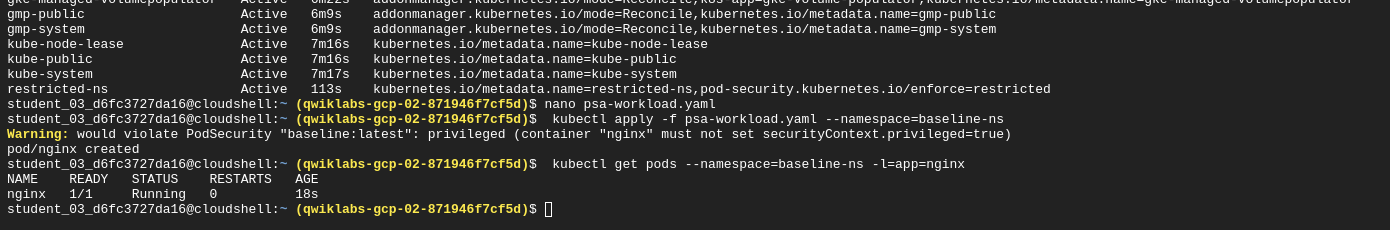

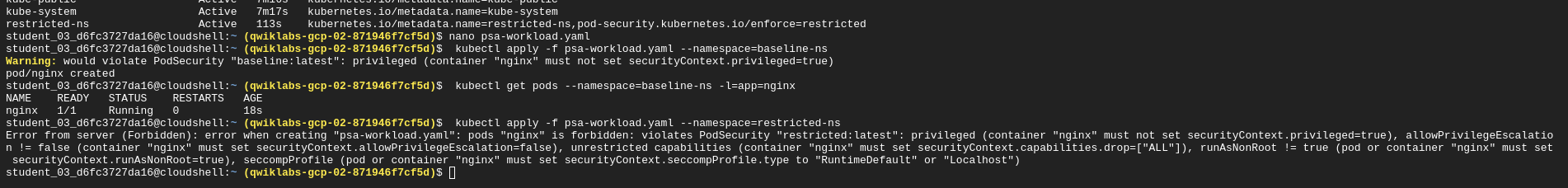

Testing applied policies

Now we'll check if we can deploy a workload that violates the PodSecruity admission controller's policy.

Create psa-workload.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

securityContext:

privileged: true

This is an nginx pod resource with a securityContext of privileged.

We apply it to the baseline-ns namespace:

kubectl apply -f psa-workload.yaml --namespace=baseline-ns

We can see the pod was still created.

We now try to apply it to the restricted-ns namespace

kubectl apply -f psa-workload.yaml --namespace=restricted-ns

Viewing Policy Violations

In logs explorer use a query:

resource.type="k8s_cluster"

protoPayload.response.reason="Forbidden"

protoPayload.resourceName="core/v1/namespaces/restricted-ns/pods/nginx"

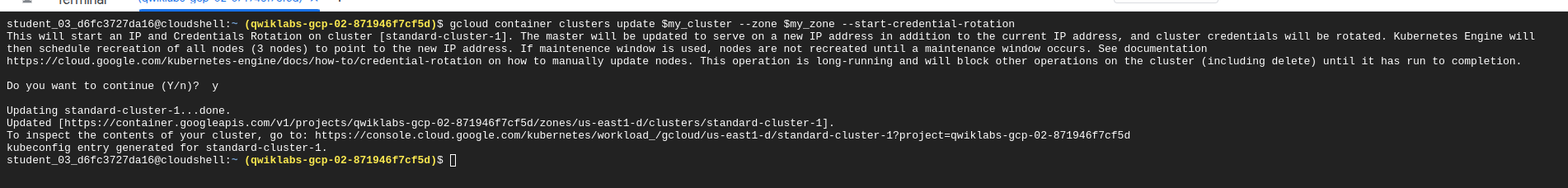

IP Address and Credential Rotation

Start the IP and credential rotation process:

gcloud container clusters update $my_cluster --zone $my_zone --start-credential-rotation

The cluster master now temporarily serves the new IP address in addition to the original address.

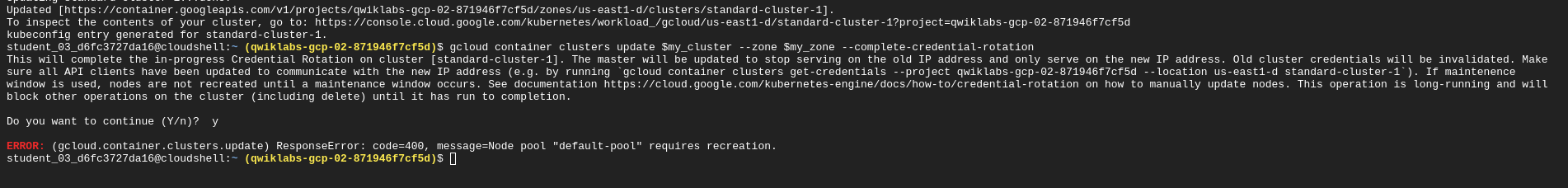

Confirm and complete the process:

gcloud container clusters update $my_cluster --zone $my_zone --complete-credential-rotation

This finalizes the rotation processes and removes the original cluster ip-address.

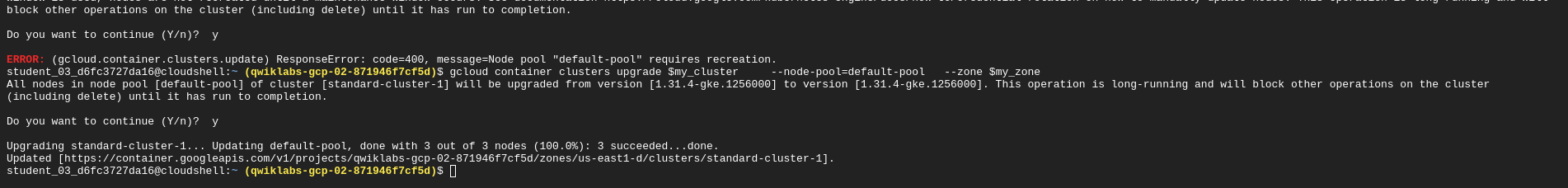

If this process fails, you may need to upgrade the cluster and try again:

gcloud container clusters upgrade $my_cluster --node-pool=default-pool --zone $my_zone

Create a VPC-Native k8s cluster with native k8s monitoring

gcloud container clusters create $my_cluster \

--num-nodes 3 --enable-ip-alias --zone $my_zone \

--logging=SYSTEM \

--monitoring=SYSTEM

Create a cloud sql instance

gcloud sql instances create sql-instance --tier=db-n1-standard-2 --region=$my_region

Create a K8s Service account for the sql instance

kubectl create serviceaccount gkesqlsa

Bind a K8s Service Account to an IAM Service Account

gcloud iam service-accounts add-iam-policy-binding \

--role="roles/iam.workloadIdentityUser" \

--member="serviceAccount:$my_project.svc.id.goog[default/gkesqlsa]" \

sql-access@$my_project.iam.gserviceaccount.com

Annotate the K8s Service Account with the Google Cloud Service Account

kubectl annotate serviceaccount \

gkesqlsa \

iam.gke.io/gcp-service-account=sql-access@$my_project.iam.gserviceaccount.com

Create Secrets

You create two Kubernetes Secrets: one to provide the MySQL credentials and one to provide the Google credentials (the service account).

kubectl create secret generic sql-credentials \

--from-literal=username=sqluser\

--from-literal=password=sqlpassword

Deploy the SQL Agent as a Sidecar

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

serviceAccountName: gkesqlsa

containers:

- name: web

image: gcr.io/cloud-marketplace/google/wordpress:6.1

#image: wordpress:5.9

ports:

- containerPort: 80

env:

- name: WORDPRESS_DB_HOST

value: 127.0.0.1:3306

# These secrets are required to start the pod.

# [START cloudsql_secrets]

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: sql-credentials

key: username

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: sql-credentials

key: password

# [END cloudsql_secrets]

# Change '<INSTANCE_CONNECTION_NAME>' here to include your Google Cloud

# project, the region of your Cloud SQL instance and the name

# of your Cloud SQL instance. The format is

# $PROJECT:$REGION:$INSTANCE

# [START proxy_container]

- name: cloudsql-proxy

image: gcr.io/cloud-sql-connectors/cloud-sql-proxy:2.8.0

args:

- "--structured-logs"

- "--port=3306"

- "<INSTANCE_CONNECTION_NAME>"

securityContext:

runAsNonRoot: true

---

apiVersion: "v1"

kind: "Service"

metadata:

name: "wordpress-service"

namespace: "default"

labels:

app: "wordpress"

spec:

ports:

- protocol: "TCP"

port: 80

selector:

app: "wordpress"

type: "LoadBalancer"

loadBalancerIP: ""