LLM Workflows and Agents

Notes on workflows and agents from Anthropic's course on Claude. Covers evaluator-optimizer pattern, chaining, routing, parallelization.

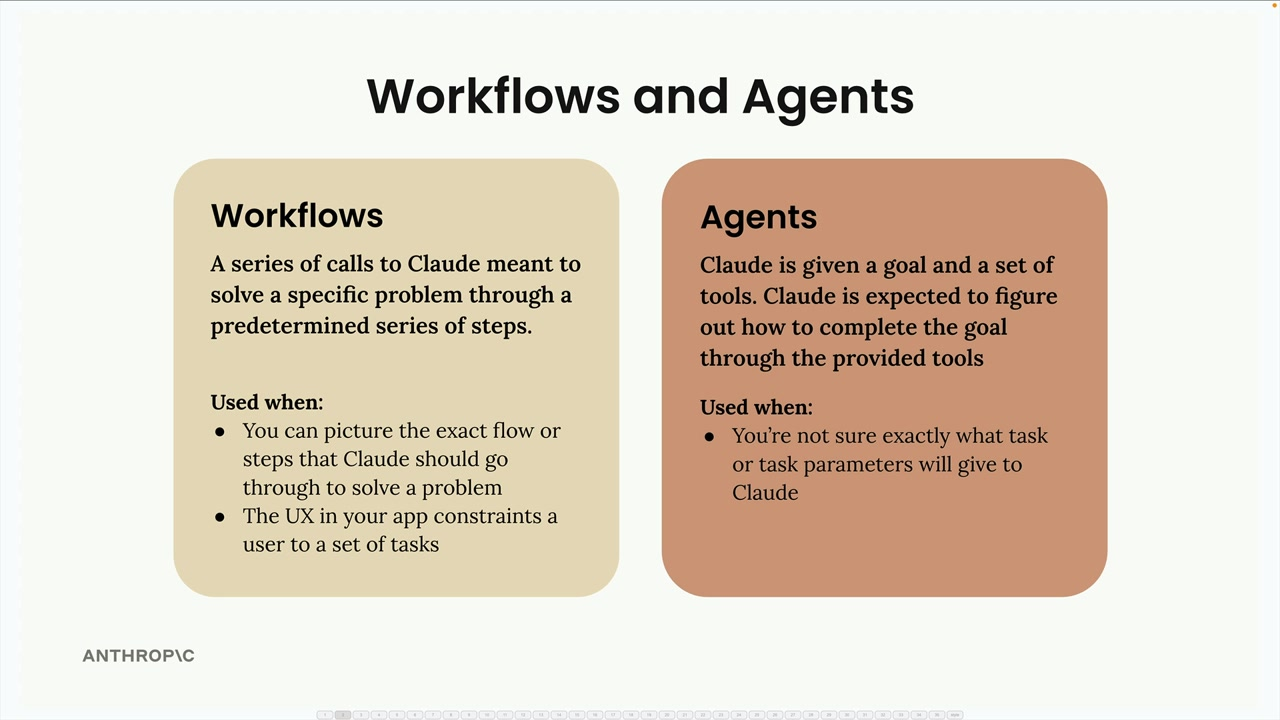

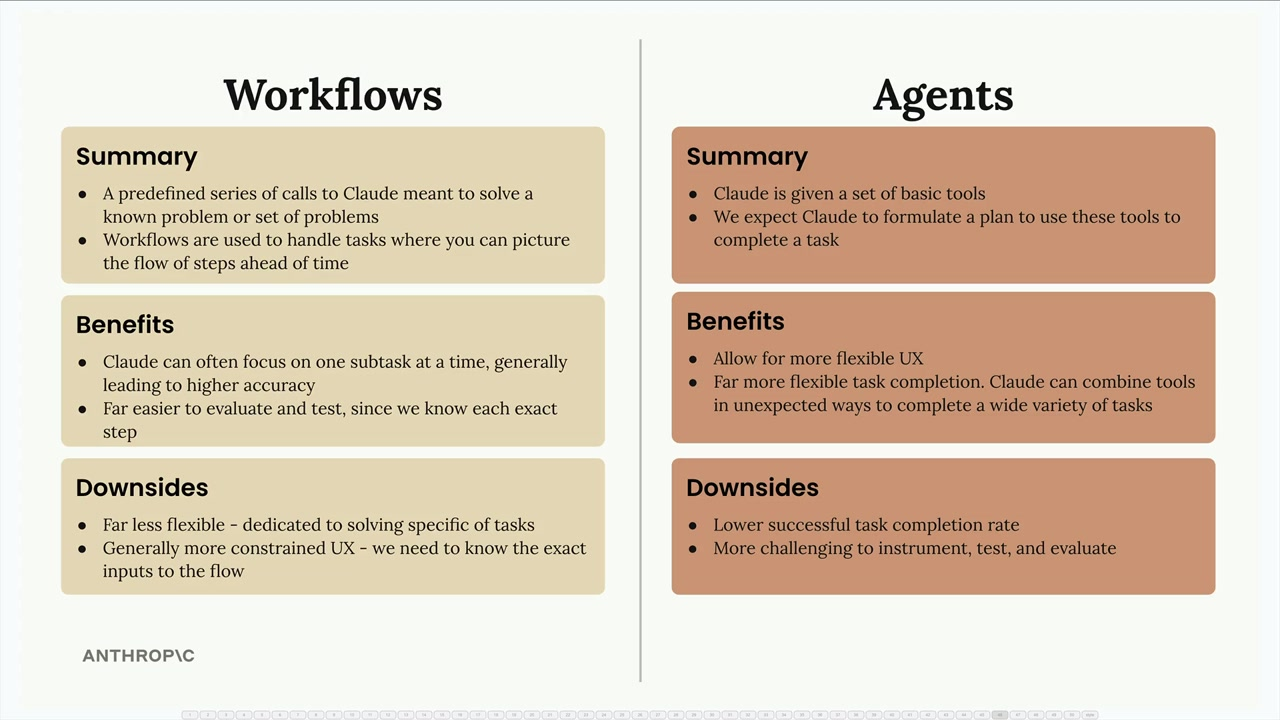

Workflows and Agents

-

Workflows - predetermined steps

-

Agents - use goals and a set of tools to determine the outcome

"The general recommendation is to always focus on implementing workflows where possible, and only resort to agents when they are truly required. Workflows provide the reliability and predictability that most production applications need, while agents offer flexibility for scenarios where the exact requirements can't be predetermined."

Evaluator-Optimizer Pattern

An iterative pattern where a producer is used in conjunction with a grader in a feedback loop - if the grader doesn't accept the output then feedback goes back to the producer for iteration and improvement.

Different workflow patterns can be used to create repeatable recipes for implementing features.

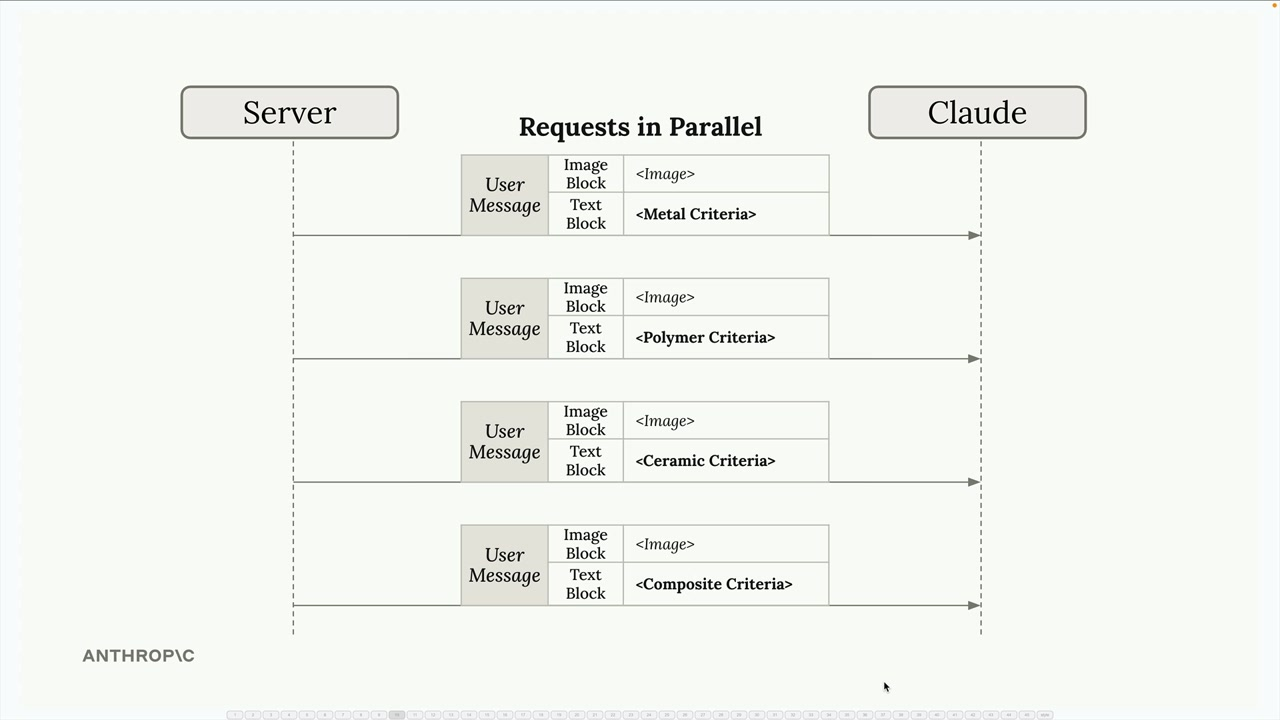

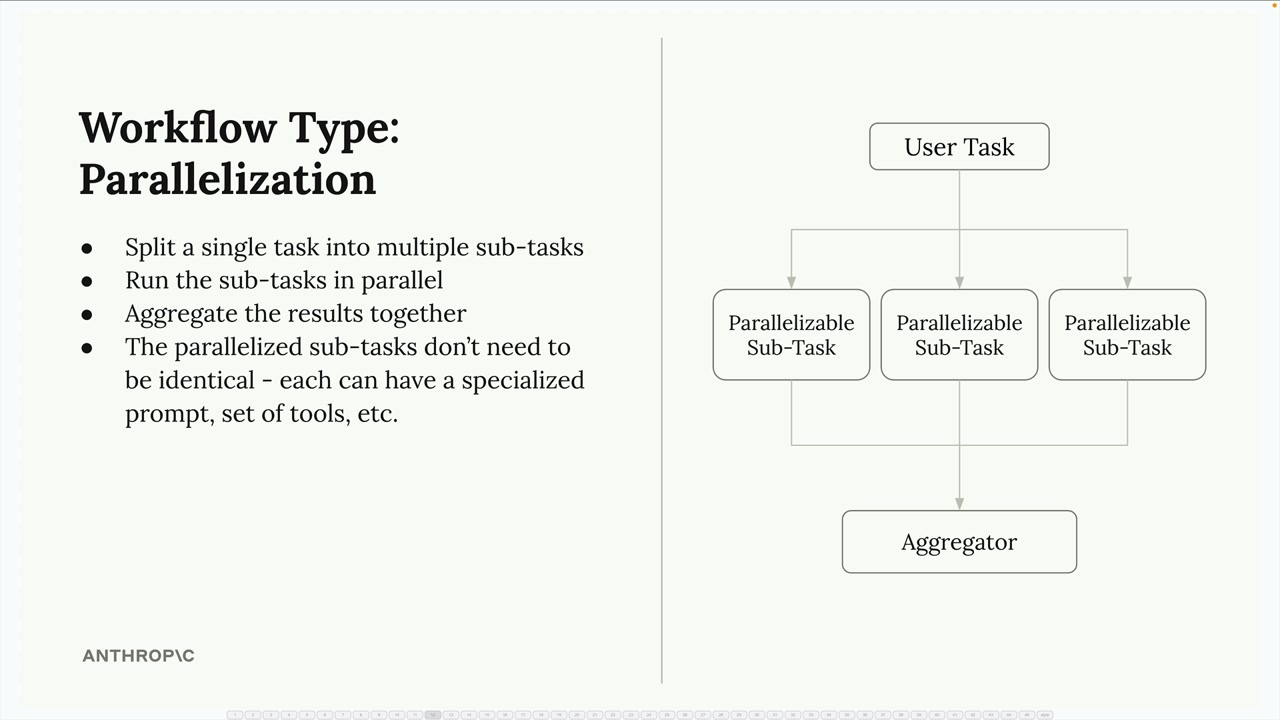

Parallelization Workflows

Split task into multiple parallel requests, each focusing on evaluating a particular criteria for example:

You then need to feed each result into a final aggregation step which can also be done by the LLM.

This kind of pattern is good for when we can break down a complex decision into independent evaluations e.g. when we want to consider multiple criteria, compare options. Each subtask should be independent and contribute to a distinct piece of the final decision.

Benefits

-

Focused attention - not having to balance competing considerations simultaneously

-

Easier optimization - each sub task can have different prompts that are optimized for that task

-

Scalability - by adding parallel requests

-

Reliability - reduce cognitive load on the AI for more consistent and reliable results

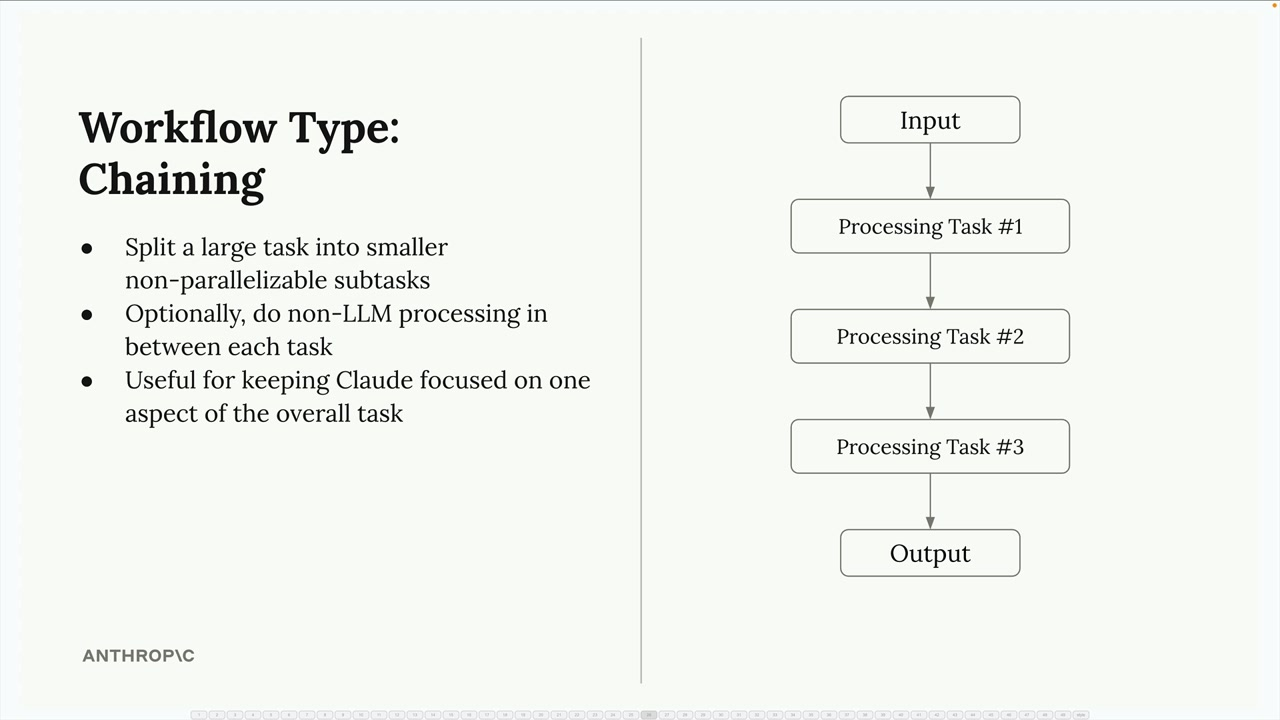

Chaining Workflows

Breaking down a large task into smaller sequential subtasks which can build on one another. This is instead of using a single larger prompt, which is also better for composition and reuse.

Benefits

- Split large tasks into smaller, non-parallelizable subtasks

- Optionally do non-LLM processing between each task

- Keep Claude focused on one aspect of the overall task

The Long Prompt Problem and Chaining

If you make a long prompt with lots of conditions, the output generated may not hit the spec, or be off in a few of the conditions.

You can instead use a 2 step chaining process - take the original response then use an LLM to correct the mistakes.

You should consider using chaining when:

- You have complex tasks with multiple requirements

- Claude consistently ignores some constraints in long prompts

- You need to process or validate outputs between steps

- You want to keep each interaction focused and manageable

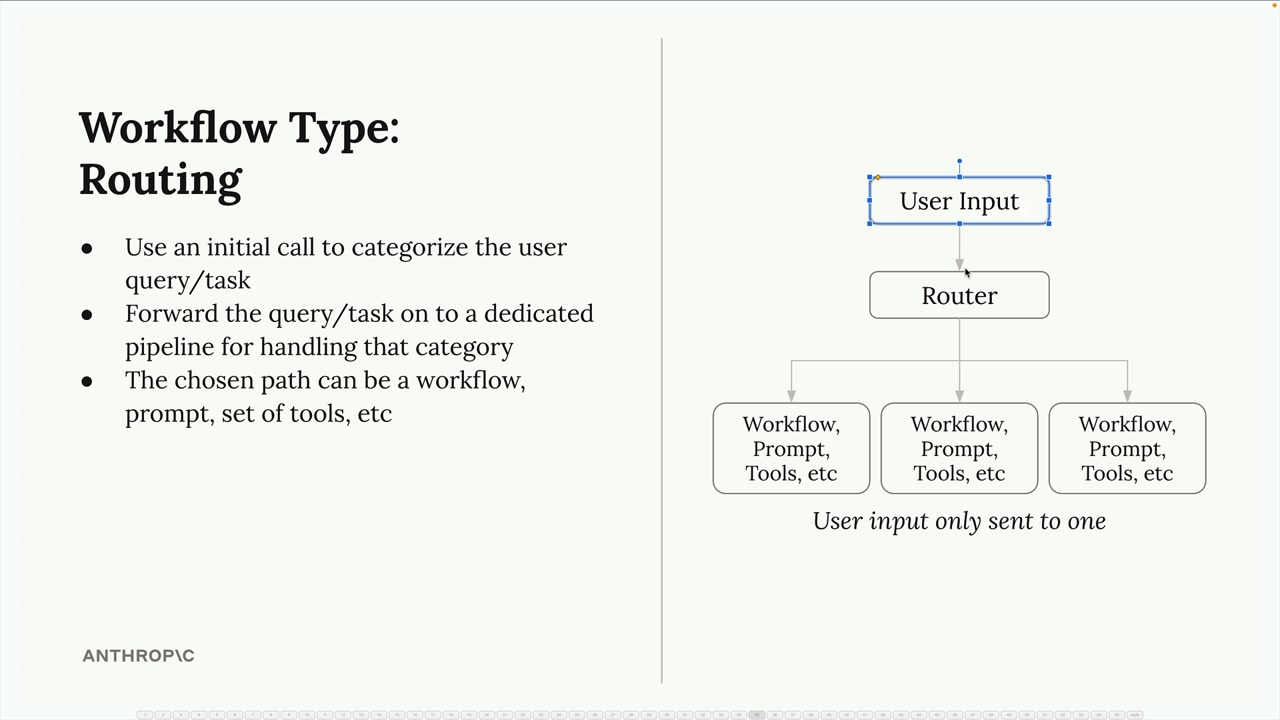

Routing Workflows

Categorize incoming requests and then route them to specialized processing pipelines, each with their own optimized prompts and workflow.

Works well when:

- Your application handles diverse types of requests that need different approaches

- You can clearly define categories that cover your use cases

- The categorization step can be handled reliably by Claude

- The performance benefit of specialized processing outweighs the overhead of the routing step

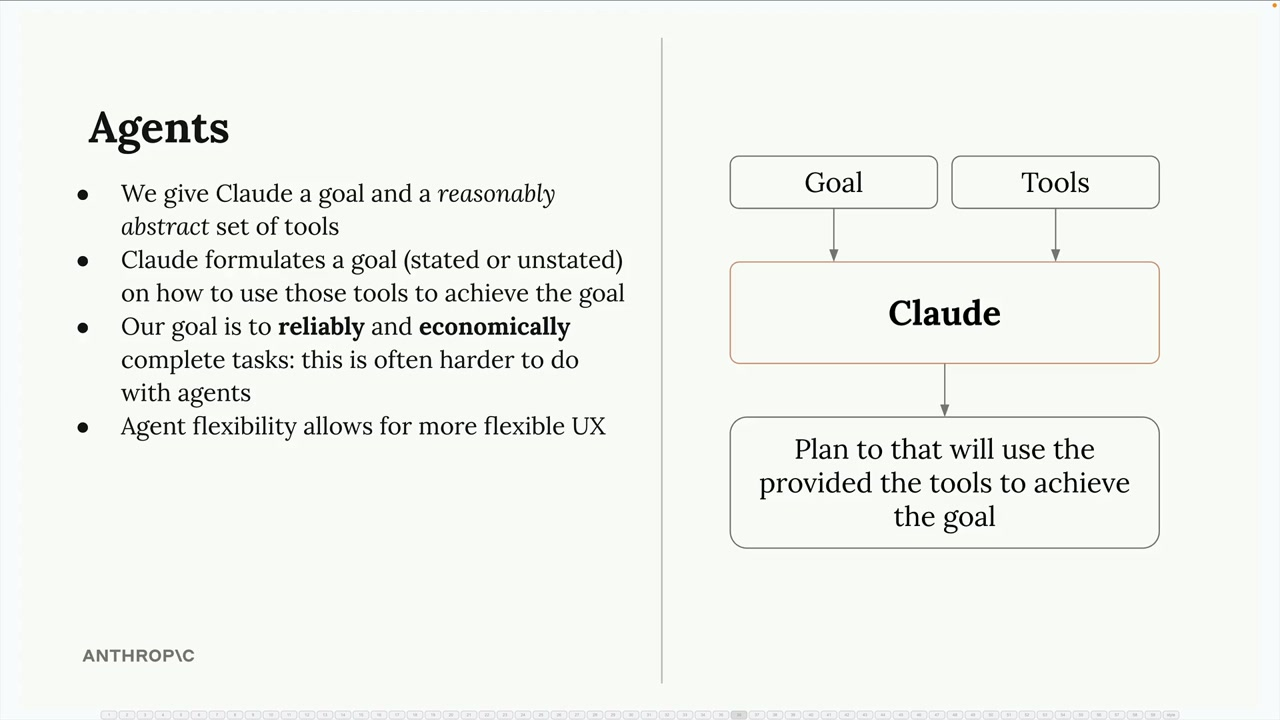

Agents

Good for when you don't have a well defined sequence of steps - you provide tools and a goal and then Claude figures out how to combine those to achieve what you want. The tradeoff is between reliability and costs.

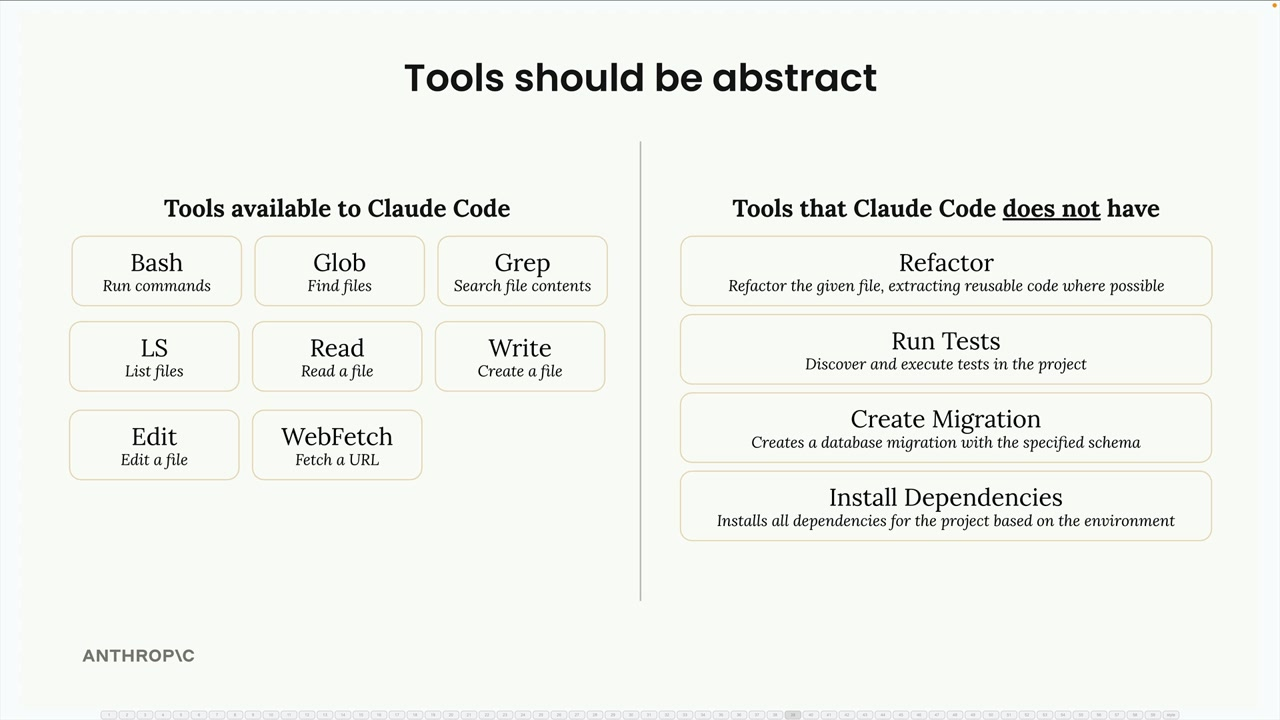

Tools are chained together to achieve more complex outcomes. This means good tools should be sufficiently abstract and allow for composition

Environment Inspection

When computer use is executed, a screenshot is provided so that the LLM knows what the result of it's actions are.

Some examples:

- Reading file contents before modifications

- Taking screenshots after UI interactions

- Checking API responses for expected data

- Validating generated content against requirements

Reading Before Writing

This means understanding the existing content and structure before modifying it.

Benefits

- Better progress tracking - Claude can gauge how close it is to completing a task

- Error handling - Unexpected results can be detected and corrected

- Quality assurance - Output can be verified before considering a task complete

- Adaptive behavior - Claude can adjust its approach based on what it observes