Claude with Anthropic

Introductory notes from Anthropic's course on Claude. Includes multi-turn conversations, temperature, prefilling, stop sequences.

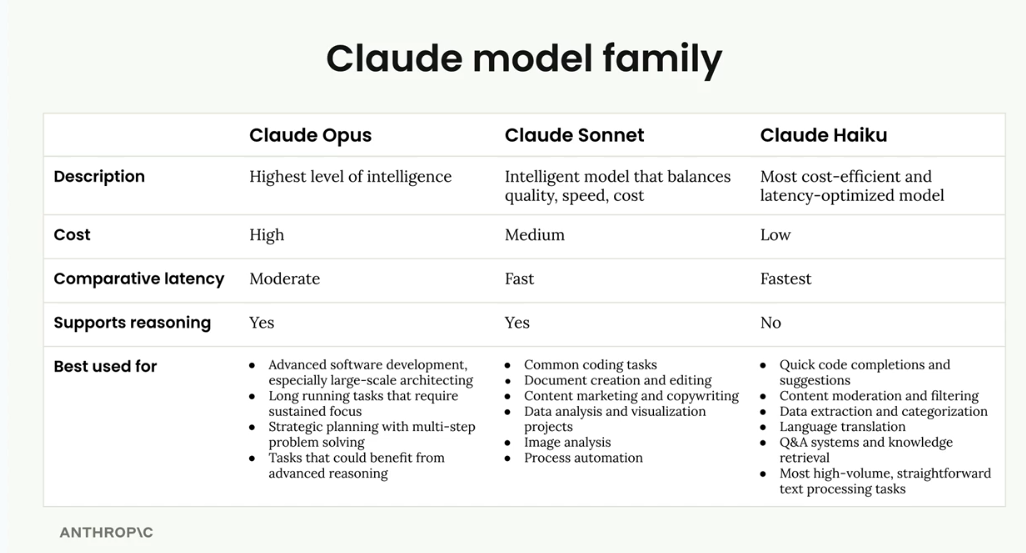

Claude Model Families

Pick models as a function of intelligence, expense and latency. Use all 3 models in combination to do different things or handle different aspects of a business.

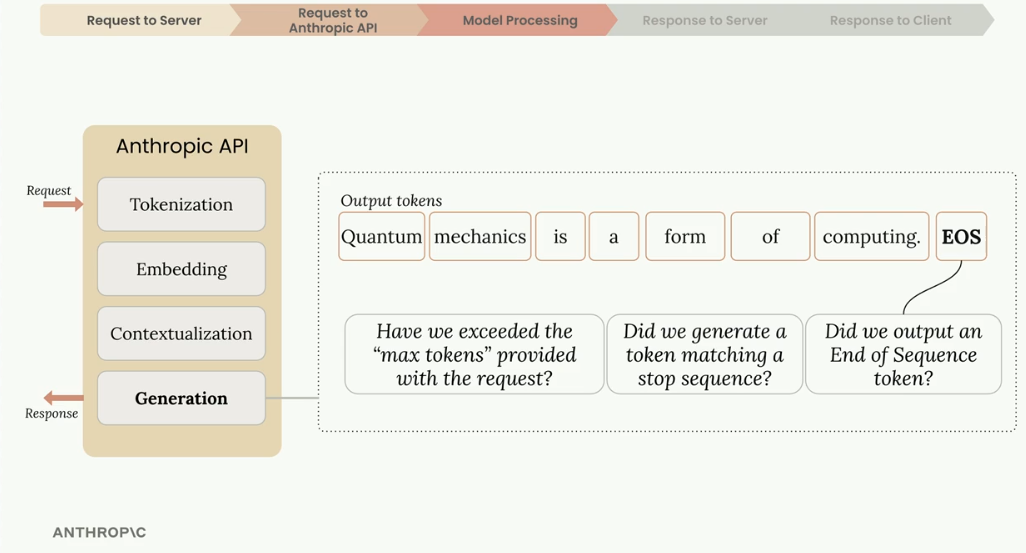

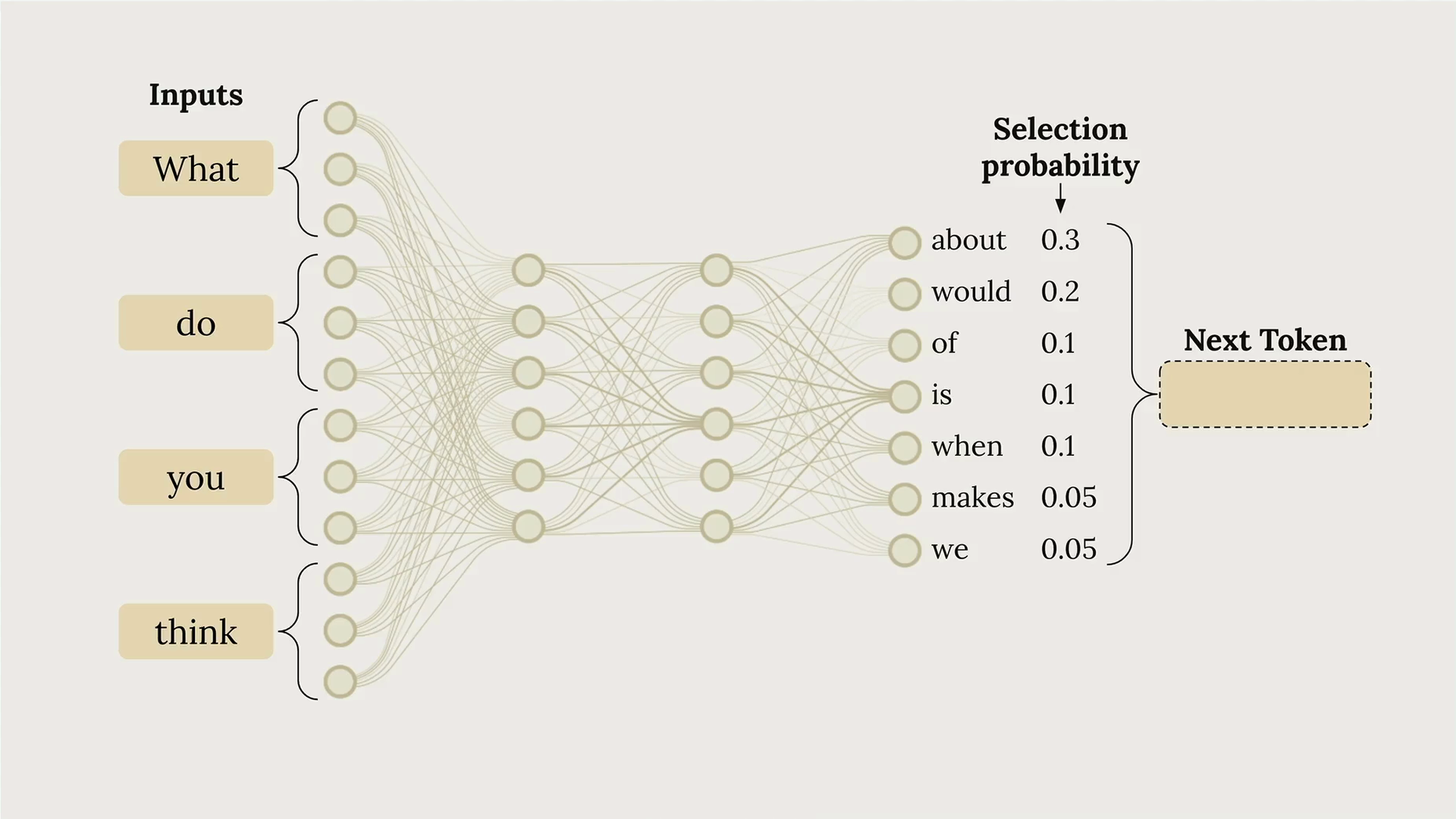

High Level Text Generation Process

- Tokenization

- Embedding - word/token embeddings

- Contextualization - refine the embedding based on context (different tokens have different context or meanings given it's context or neighbours)

- Generation - use embeddings to build a probability distro of the next word/token on an output layer of the neural net

EOS - Natural end to generation or exceeded allotted tokens from the request.

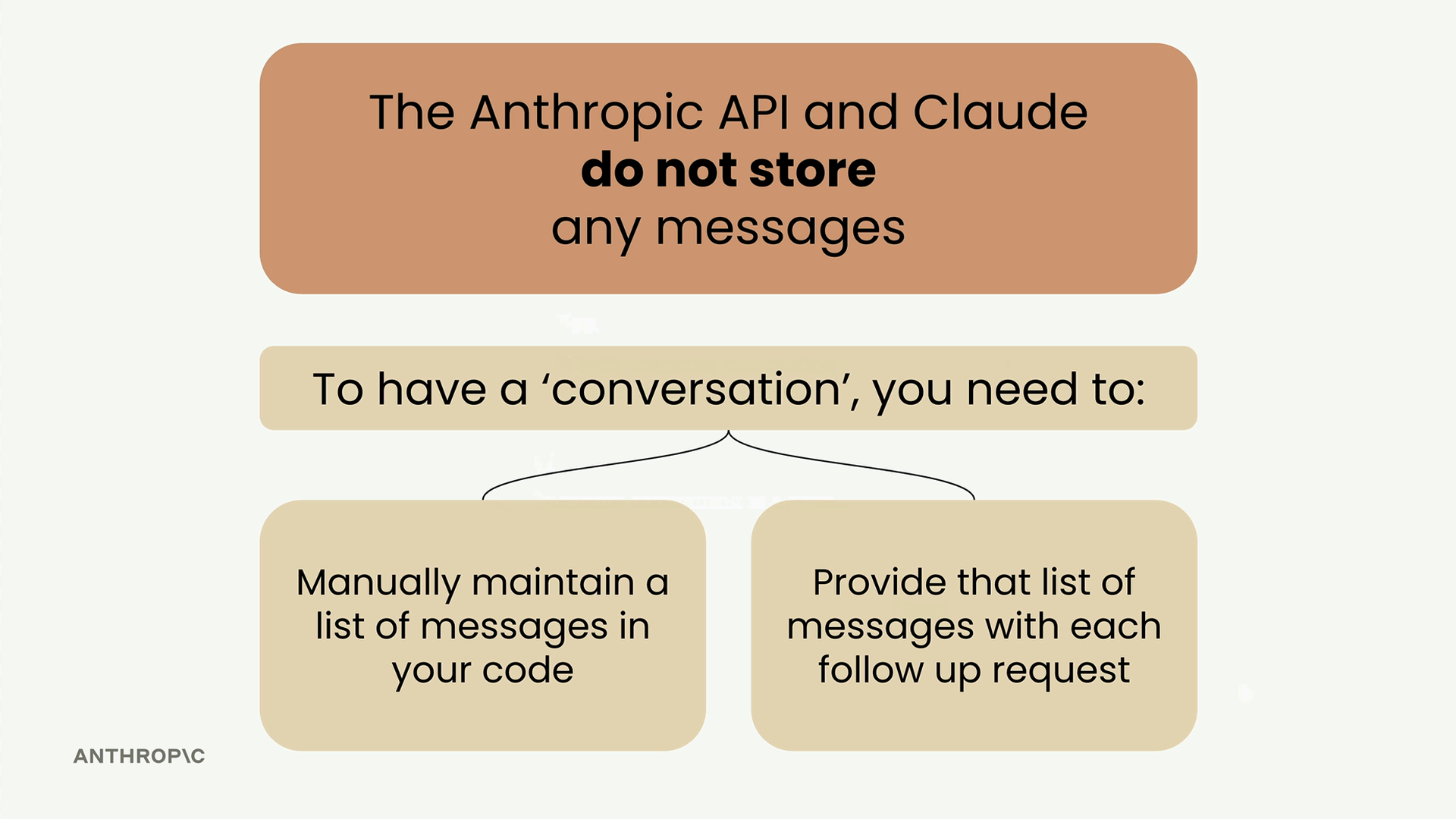

Multi-Turn Conversations

Claude doesn't store any of your conversation history.

Each request you make is completely independent, with no memory of previous exchanges.

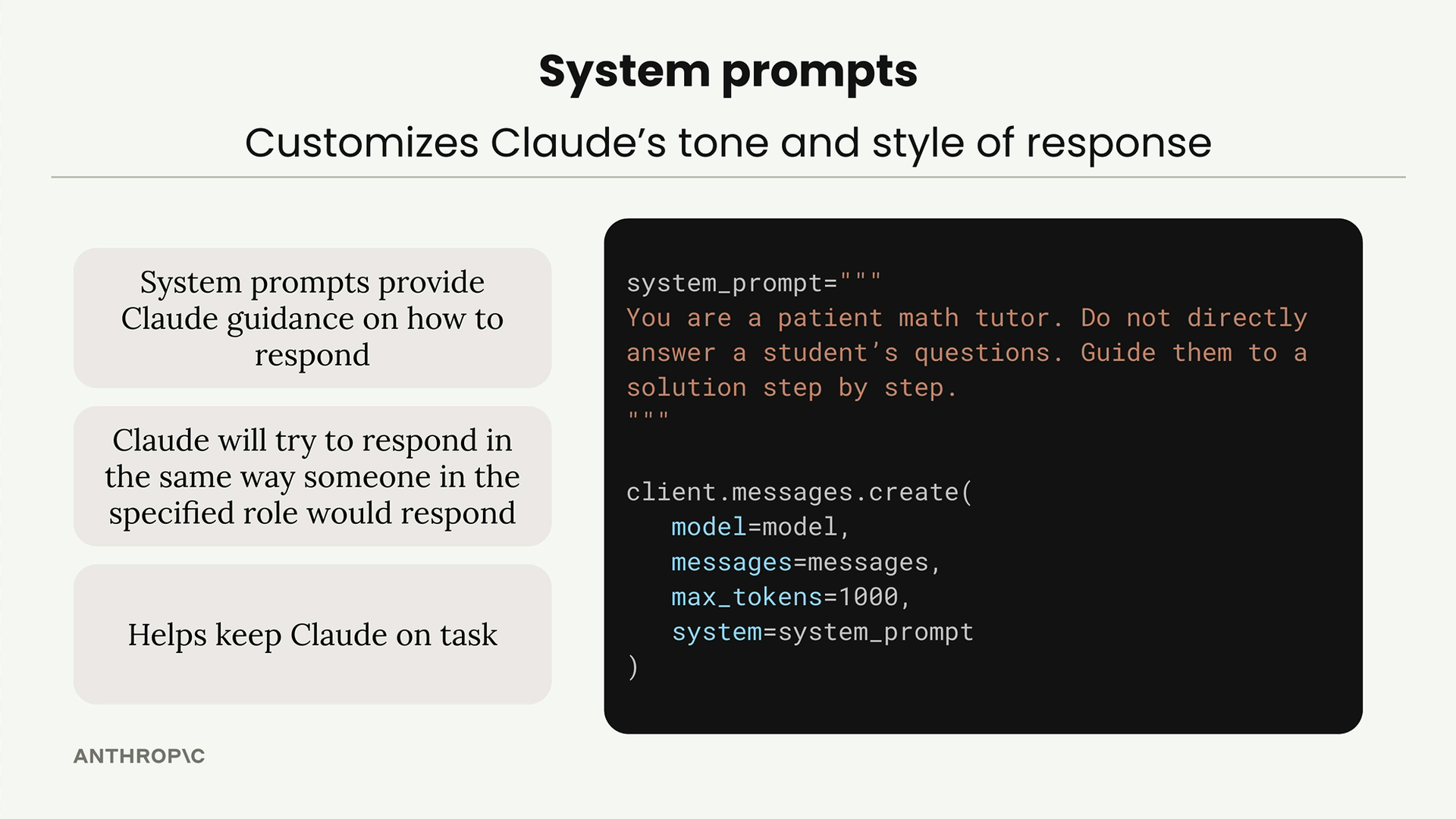

System Prompts

To customise the tone and style of responses.

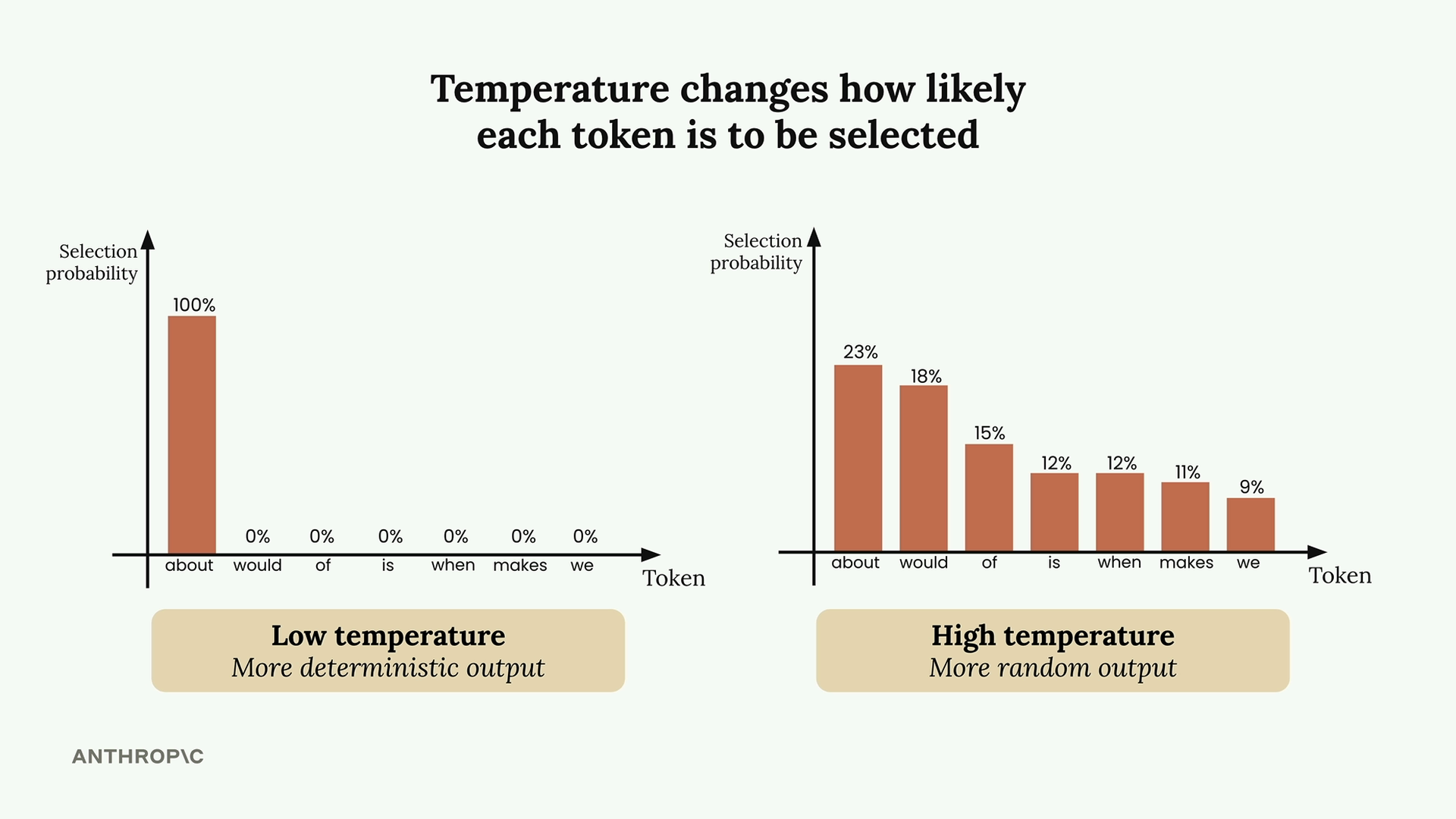

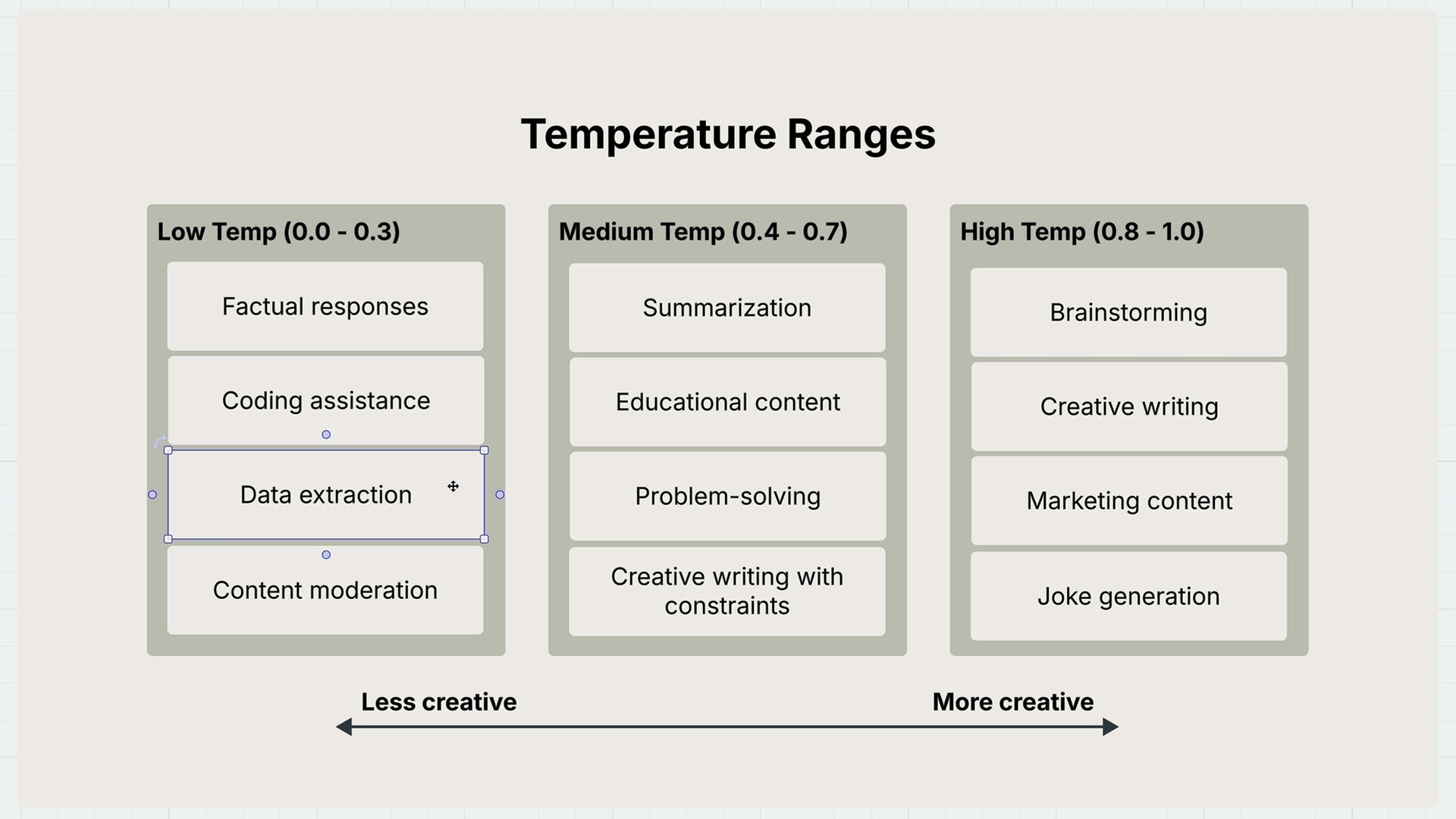

Temperature

A weight which influences the next word selection strategy - lower temperature is more deterministic i.e. chooses the highest probability next word, whereas at higher temperatures the selection probability is spread out across a number of words.

Different tasks can call for different temperature selection strategies:

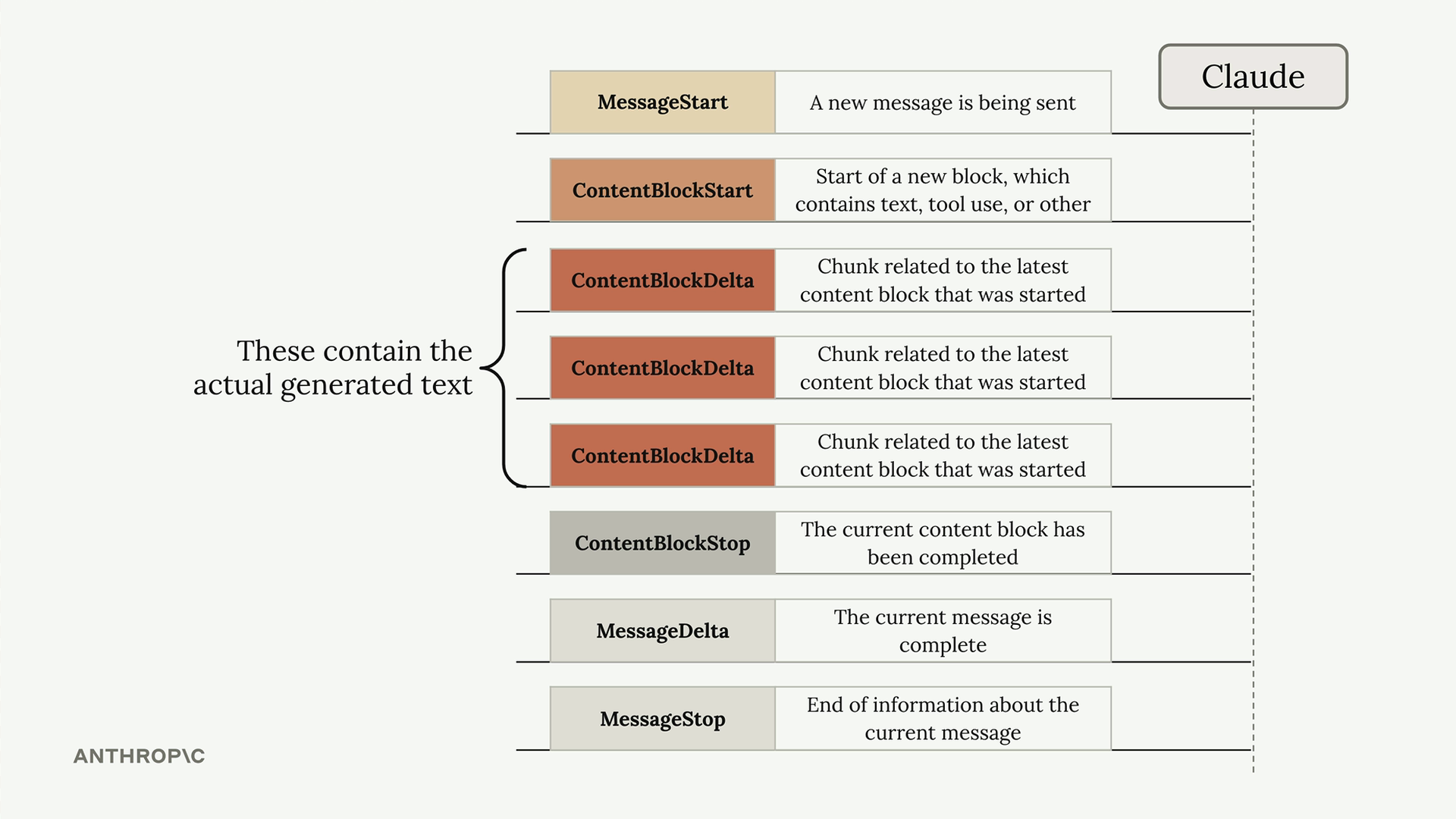

Streaming Response Model

- MessageStart - A new message is being sent

- ContentBlockStart - Start of a new block containing text, tool use, or other content

- ContentBlockDelta - Chunks of the actual generated text

- ContentBlockStop - The current content block has been completed

- MessageDelta - The current message is complete

- MessageStop - End of information about the current message

Controlling Model Output

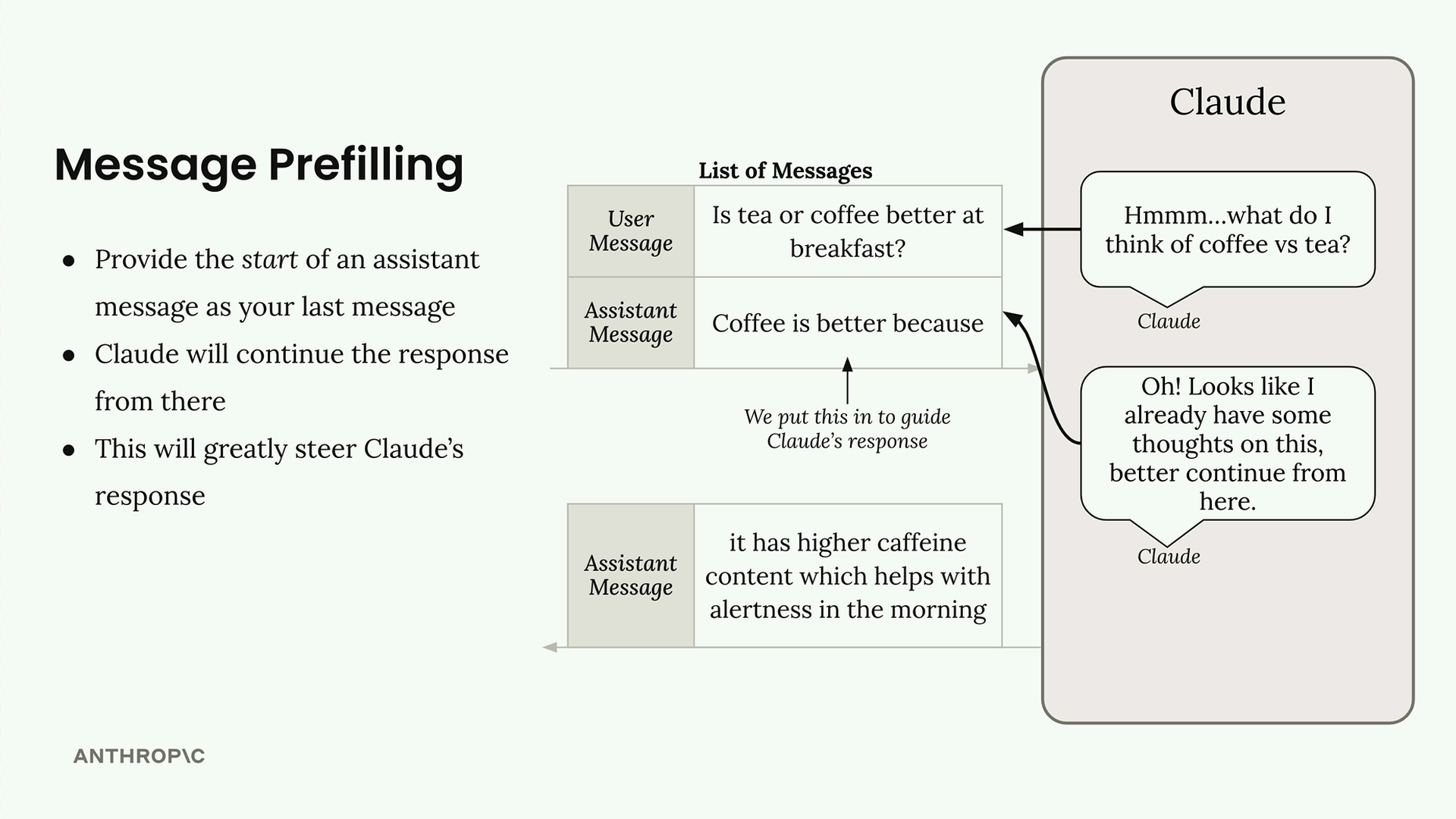

Message Prefilling

To provide the start of a generated assistant message as the last message in the call to the API. Used to steer a response.

You can steer the response in any direction, for example:

- Favor coffee: "Coffee is better because"

- Favor tea: "Tea is better because"

- Take a contrarian stance: "Neither is very good because"

Consistent formatting: Use prefilling to ensure responses always start with a specific structure

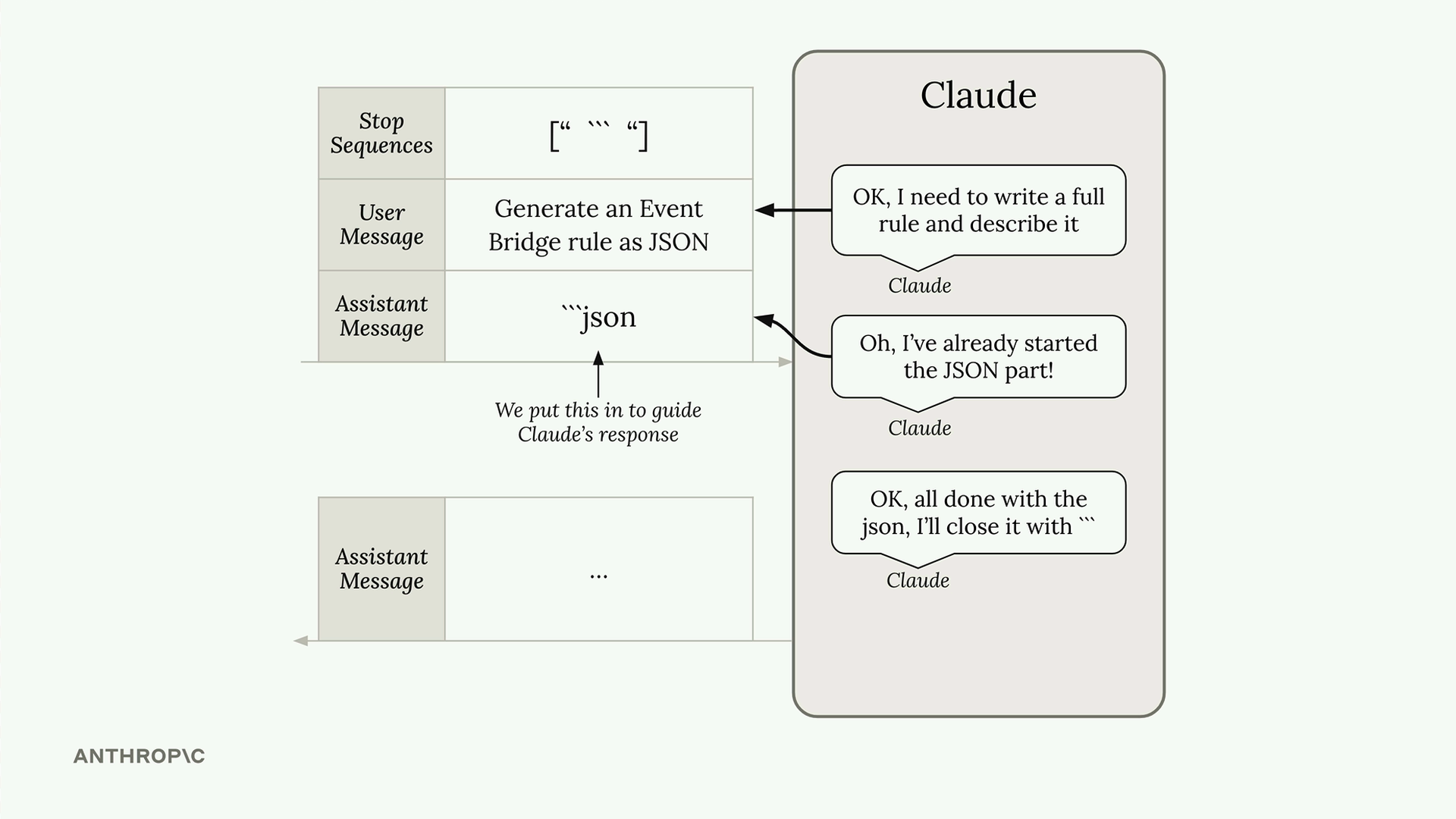

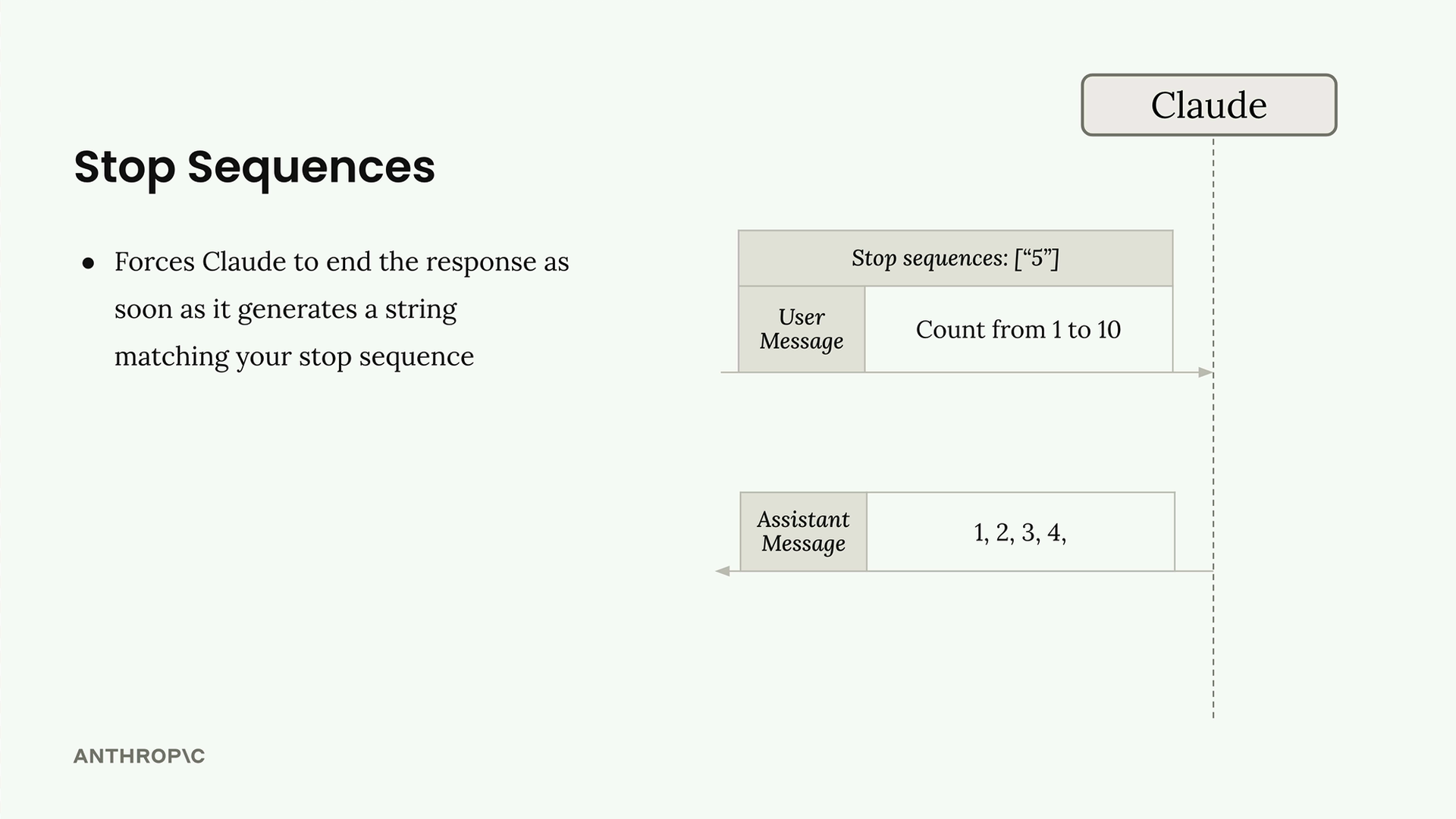

Stop Sequences

Force the end of a response when a certain sequence of characters is generated e.g. to control the length of a response. Use stop sequences to cap responses at natural breakpoints.

Structure Data

The key is identifying what Claude naturally wants to wrap your content in,

then using that as your prefill and stop sequence. For code, it's usually

markdown code blocks. For lists, it might be different formatting markers.

Using stop sequences with assistant prefilling to extract just the response you need: