Cost Aware Prompt Optimization

Notes on "CAPO: Cost Aware Prompt Optimization" (June 2025) from the Munich Center for Machine Learning.

There has been a paradigm shift over the years from fine-tuning individual models for specific tasks, to using a single pre-trained LLM to solve diverse problems via prompting and without additional training. This increases the importance of prompts and the process by which they're developed.

LLM Performance Drivers

LLM performance is sensitive to:

- Prompt quality

- Choice and order of examples provided

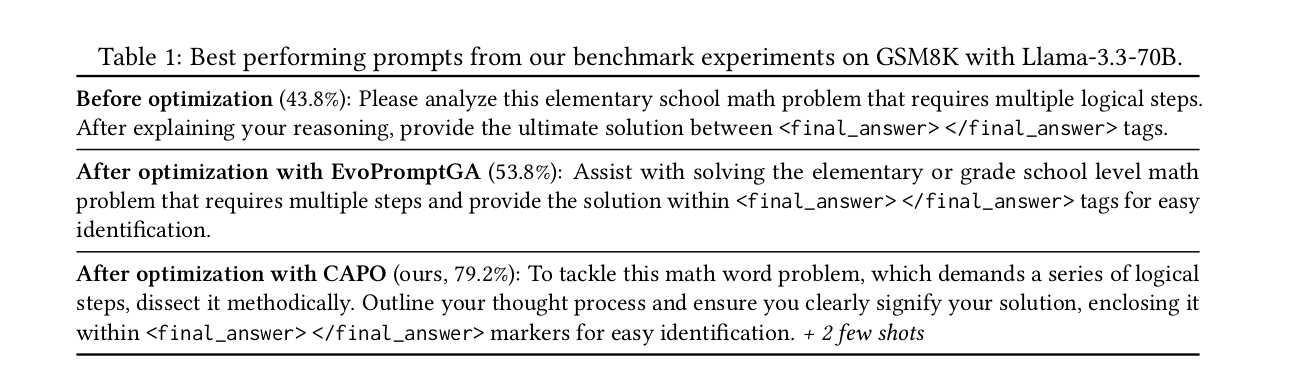

Semantically similar prompts can perform very differently. For example:

Prompt performance also does not necessarily follow predictable patterns and so is hard to model.

Automatic Prompt Optimization

Manual prompt optimization takes time and skill. Auto prompt optimization is therefore an attractive option.

There are a few different schools of automated prompt optimization:

-

Continuous approaches - learnable soft prompts (can produce non-human interpretable prompts by optimizing the prompt and the LLM params)

-

Discrete methods - operating directly on textual prompts (good for black box LLMs from providers where you don't have access to the LLM params). Prompt candidates are modified using a meta-LLM with a meta-prompt.

EvoPrompt is an example of a framework that uses discrete methods - relying on good quality initial prompts for the specific task.

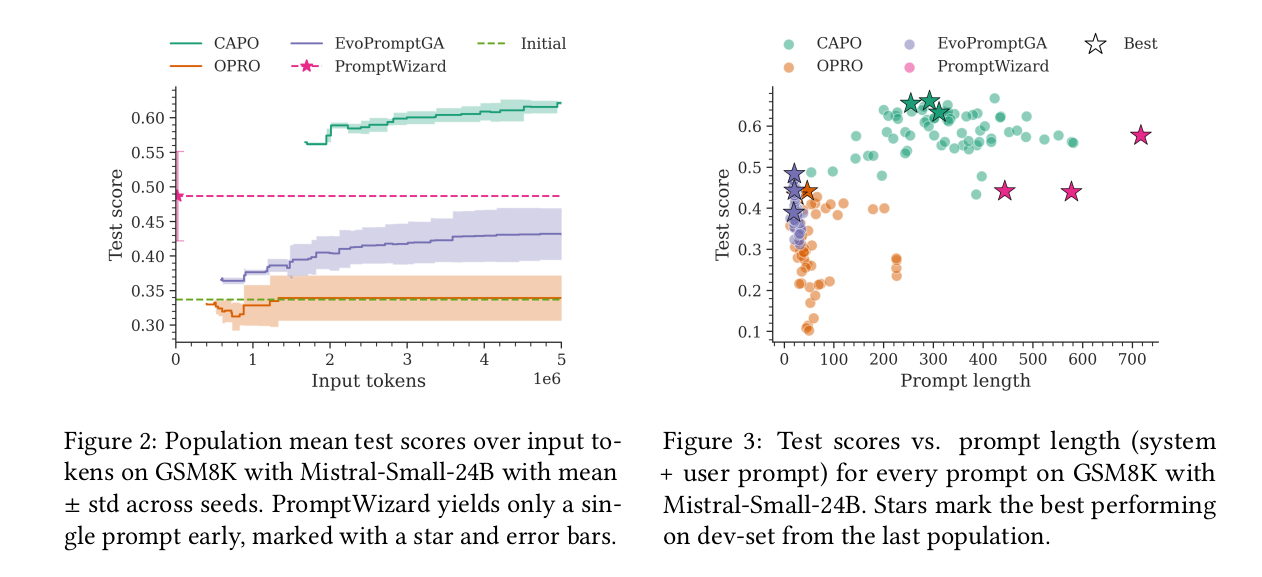

Cost of optimizing prompts is also expensive - some of the models require 4-6 million input tokens per original task (the task to be optimized). This can mean a round of optimization can cost anywhere between $30 and $300 before you even start using the prompt.

What is CAPO?

"Since we aim to maximize performance while keeping prompt length minimal, i.e., shorter instructions, fewer examples, and reasoning only when necessary, we implement a form of multi- objective optimization. This is particularly important given our inclusion of few-shot examples, which can considerably increase the prompt length"

-

A discrete prompt optimization technique that uses AutoML techniques.

-

Combined EvoPrompt (existing discrete framework) with racing in order to improve cost efficiency and reduce the number of evaluations.

-

Uses prompt length as part of it's multi-objective function by penalizing the length.

-

Utilizes few shot examples and task descriptions for robustness.

-

Uses a shortened meta-prompt

-

Uses a genetic algorithm approach with a meta-llm for cross-over and mutation - child prompts are created from parent prompts.

-

Few shot examples are randomly shuffled after randomly selecting how many examples to include

In the paper they provide an ablation study (a method for understanding the contribution of different components within a model by systematically removing or altering them) indicating "few-shot example selection greatly enhances performance, racing improves cost-efficiency, the prompt length objective reduces average prompt length, and task descriptions make the algorithm robust to initial prompt quality".

What is Racing?

You can decompose an objective into multiple sub-objectives which can be evaluated sequentially. You order the sub-objectives in such a way that you don't need to evaluate all of them in order to eliminate weak candidates

For example:

If after the first 3 sub-objectives your score is < 0.5 then don't waste any more computational budget but drop that candidate and move onto the next. You're saving your latency budget by not continuing to evaluate the non-promising candidate.

Summary of Conclusions

- Few shot examples improve performance for complex tasks especially

- Length penalty reduces average prompt length

- Racing provides a lot of savings

- The performance gains are due to few shot examples, length penalty, racing and their interplay

- Task descriptions make CAPO robust to generic initial instructions

Links

CAPO: Cost-Aware Prompt Optimization - Tom Zehle, Moritz Schlager, Timo Heiß, Matthias Feurer, Department of Statistics, LMU Munich, Munich Center for Machine Learning (MCML)