HTTP - Servers and Protocols

Notes on HTTP2 and web server threading models. Covers servlets, web server/container architecture (Jetty), HTTP2, HTTP streams (prioritization, flow control) and SSE.

What are Servlets?

A server side Java API/programming model for handling HTTP requests and sending back responses. A lot Java based frameworks use Servlets under the hood.

A Servlet container is created to encapsulate Servlets and decouple them from the web server implementation - you can attach a servlet container to a web server.

You can annotate a class with @WebServlet (jakarta.servlet.annotation) to specify the path. Here are some examples for a GET and a POST:

GET:

@WebServlet("/product")

public class ProductServlet extends HttpServlet {

@Override

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

// do stuff

}

}

POST:

@WebServlet("/login")

public class LoginServlet extends HttpServlet {

@Override

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

// do stuff

}

}

In some implementations, there is some delegation involved in the implementation, for example:

public void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

this.doGet(request, response);

}

ServletContext

Created at startup, used to register Servlets, Filters and Listeners. Annotation configurable init() method called on startup.

This context is shared across all requests and all sessions.

Requests and Responses

The Servlet container is responsible for the instantiation/construction (there is also reuse of requests and responses) of HttpServletRequest and HttpServletResponse objects and passing them through the filter chain via doFilter().

The service() method is called for Servlets which determines the specific do.*() method to call based on the HTTP verb.

Conceptually only for the lifetime of the request and the completion of the response.

HTTPSession

Shared across all requests in the same session (see HTTP protocol details below). Created for the first time when a client connects/visits your page.

Threading��

Servlets, Filters and Listeners are shared across all requests and sessions.

Multiple threads can be calling your Servlet instances.

You should not have state or assign instance vars:

public class ExampleServlet extends HttpServlet {

private Object thisIsNOTThreadSafe;

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

Object thisIsThreadSafe;

thisIsNOTThreadSafe = request.getParameter("foo"); // BAD!! Shared among all requests!

thisIsThreadSafe = request.getParameter("foo"); // OK, this is thread safe.

}

}

Web Server Architecture

Here we'll take the Eclipse Jetty project as an example.

It's also a good example of a pretty nicely modularized Maven project.

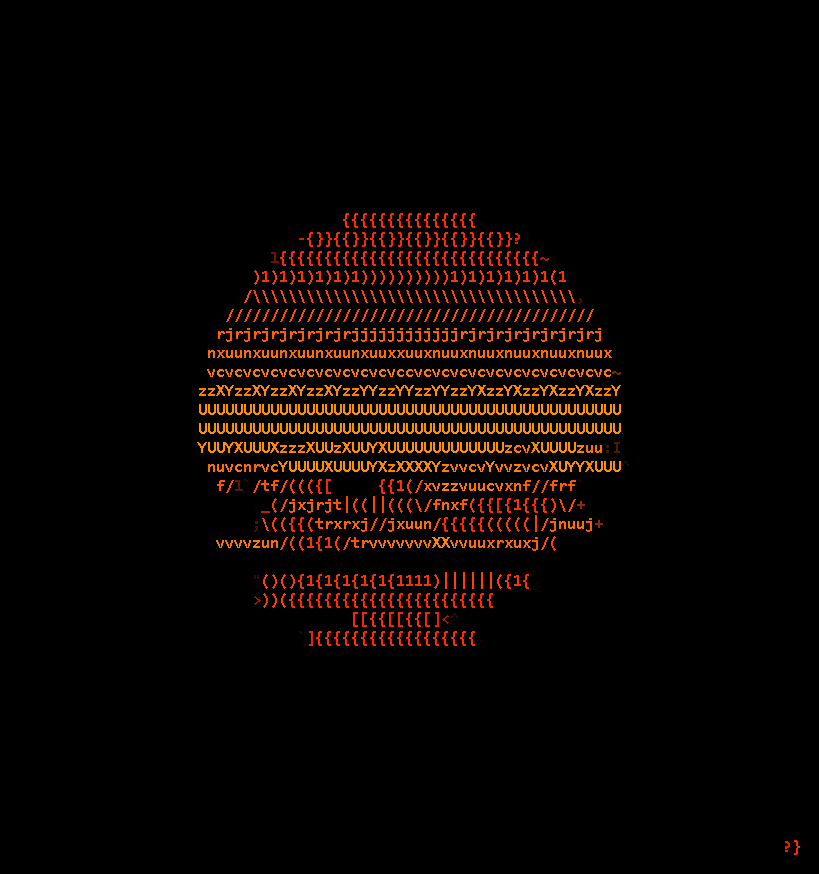

Jetty Component Architecture

HTTP clients and servers are made by composing various classes

Apps are built as component trees - components have lifecycle and event listeners.

Jetty Threading Architecture

Jetty produces Tasks which can be a wrapper around an NIO SelectionEvent or a wrapper around blocking application code (servlet being invoked to handle a HTTP Request).

Tasks are run by a threadpool - handle request bursts, processing of IO failures.

The other thing to consider here is that multiplexed protocol implementations must be able to write in order to progress reads.

A Task can be consumed in 1 of 3 ways:

Produce-Consume

Producer thread loops to produce a Task which gets run on that same Producer thread.

Thread per selector mode under NIO.

- Cache efficiency - same CPU

- Head of line blocking - Tasks being processed can delay subsequent tasks

For scenarios where you can handle head of line blocking well semantically (domain based).

Produce-Execute-Consume

Producer thread loops to produce Task which are submitted to an Executor - the task is queued and then executed by a worker thread like a CompleteableFuture.

-

Cache inefficient - task is accessed and processed by a non-producing thread, so won't have data in it's caches.

-

Unbounded Task queue

-

Queuing latency

Execute-Produce-Consume

Producer loops to produce a Task. The production of tasks is then handed off to another thread via an Executor, whilst the current thread (the original producer) actually executes the task.

Execution of the task occurs on the producing thread, so more cache efficient.

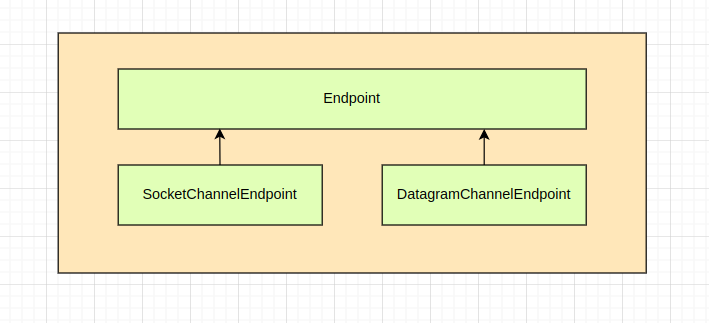

Jetty IO Architecture

Jetty uses a wrapper around an NIO Selector:

- Create SocketChannel

- Pass SocketChannel to SelectorManager

- Pass SocketChannel to ManagedSelector

- Register NIO Selector

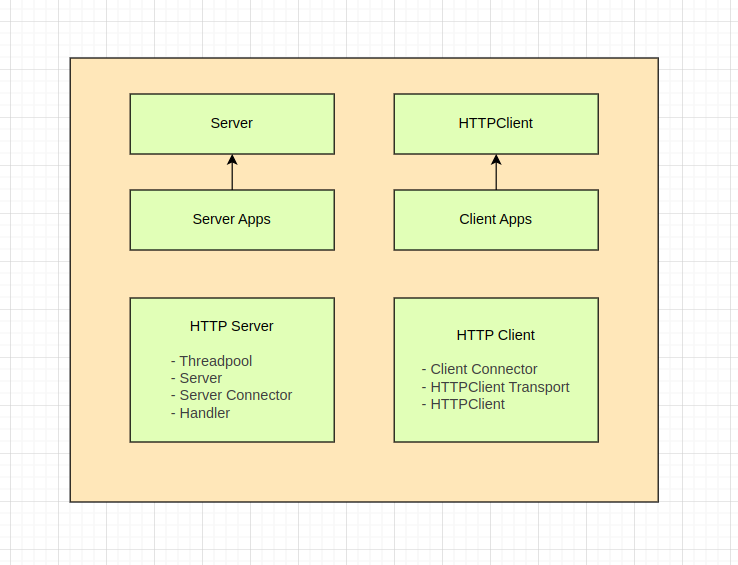

Endpoints and Connections

SocketChannels get wrapped into Endpoints and Connections.

-

Endpoint - abstraction for reading or writing

-

Connection - abstraction for reading from an Endpoint and deserializing it. Read and parse incoming bytes into a particular protocol.

However your writing abstraction is implemented, eventually bytes are written out via the Endpoint.

Endpoints and Connections can be chained to implement more complex functionality e.g. TLS:

Content Source

A non-blocking read model where a read returns a content.chunk - can be a normal or a failure chunk.

A content sink provides an offer() bytes abstraction.

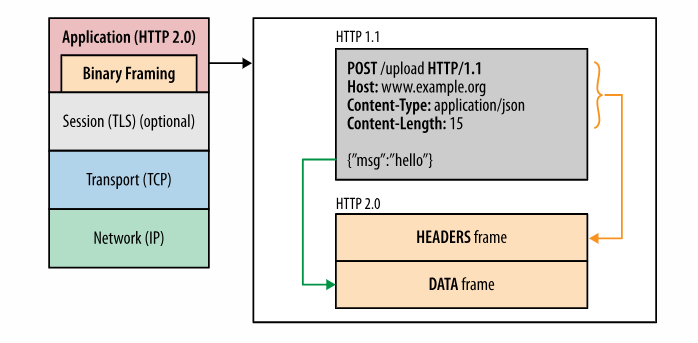

HTTP2

Builds upon the core concepts of HTTP1 (verbs, status codes, header fields etc) to enable:

- Multiplexing of requests and responses

- Compression of HTTP header fields (some standardized tables from Huffman encoding and a shared compression context at the connection level) - HPACK

- Server push (PUSH_PROMISE)

- Request priorities

- Framed binary encoding of messages in transit vs. newline delimited plaintext as in HTTP1

- Persistent, single TCP connection reuse

Source: HPBN

Source: HPBN

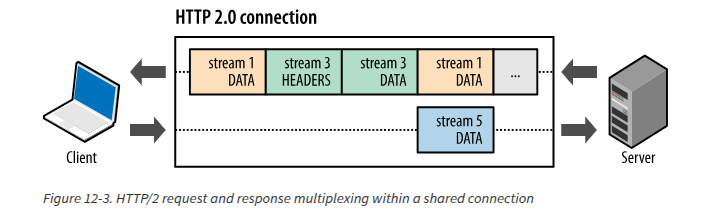

Streams, Messages and Frames

Messages can belong to a particular stream. These are encoded into frames which are sent over a single TCP connection which allows for bidi communication.

Each stream is identified by it's ID and has optional priority information.

Each message is a logical HTTP message - a request or a response for example.

Binary framing allows for multiplexing components of multiple messages across multiple streams across the same TCP connection.

Source: HPBN

Source: HPBN

Stream Prioritization

Each stream can be assigned a priority and dependencies on other streams - this allows for the construction of a prioritization tree which dictates and guides the order in which frames are interleaved and delivered.

These are a preference, not a requirement with guarantees.

Browsers can prioritize requests based on several factors:

- Type of asset e.g. JS, HTML, CSS

- Location on the page

- Previous history or usage

This also impacts things like FCP (first contentful paint) and ILS (incremental layout shift).

Stream Flow Control

Flow control can occur at the stream level not just the TCP level. It uses a windowing technique, based on bytes as opposed to messages. The window is reduced on DATA frames. The window is incremented on WINDOW_UPDATE frames which are sent by the receiver.

A SETTINGS frame is used on connection establishment to set the window size in both directions.

Server Push

The server can provide multiple responses for a request. Initiated via PUSH_PROMISE frames from the server to indicate intent. This has to occur before the actual response is sent as DATA frames.

Each resource being pushed is a stream - that means it can be individually prioritized, multiplexed and processed by the client.

When a client gets a PUSH_PROMISE is can decline via RST_STREAM (e.g. it has cached the resource already).

Frame Types

DATA - Used to transport HTTP message bodies

HEADERS - Used to communicate header fields for a stream

PRIORITY - Used to communicate sender-advised priority of a stream

RST_STREAM - Used to signal termination of a stream

SETTINGS - Used to communicate configuration parameters for the connection

PUSH_PROMISE - Used to signal a promise to serve the referenced resource

PING - Used to measure the roundtrip time and perform "liveness" checks

GOAWAY - Used to inform the peer to stop creating streams for current connection

WINDOW_UPDATE - Used to implement flow stream and connection flow control

CONTINUATION - Used to continue a sequence of header block fragments

Stream ID Collisions

- Client initiated streams - odd numbered stream IDs

- Server initiated streams - even numbered stream IDs

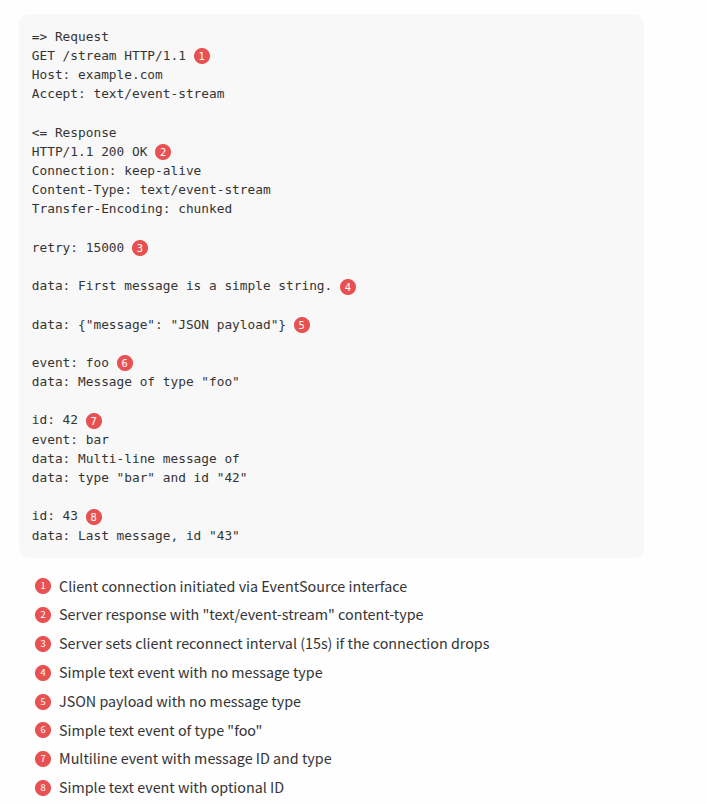

SSE

Streaming text over HTTP - uses a content-type of "text/event-stream". Implemented on the client side using EventSource API. Good for unidirectional server to client communication e.g. streaming data updates, LLMs and MCP servers etc.

The EventSource interface is meant to allow you to receive push notifications as DOM events. Provides auto-reconnect and tracking of last seen message - can advertise the last seen sequence on reconnects with the server.

Efficient, cross-browser impl of XHR streaming. See XHR for more details.

Events sent from the server can have optional id or event fields. Newlines demarcate event boundaries.

Source: HPBN

Source: HPBN

Please note: I think the above image is a bit out of date, SSE now also uses HTTP2 not 1.1

HTTP3

Uses UDP (QUIC) instead of TCP.

Reduces resource consumption overall as opposed to latency. Latency reduction was not really a primary goal.

Links

https://hpbn.co/http2/ - Ilya Grigorik (Web Performance Engineer at Google, Co-Chair W3C Performance Working Group), High Performance Browser Networking

https://jetty.org/docs/jetty/12/programming-guide/arch/bean.html - Jetty Architecture documentation

https://stackoverflow.com/questions/3106452/how-do-servlets-work-instantiation-sessions-shared-variables-and-multithreadi - great shares from Bauke Scholtz